Neuromorphic Computing: The Secret Weapon of Artificial Neural Networks?

Imagine a world where computers can not only perform complex calculations but also learn, adapt, and recognize patterns just like the human brain. This isn’t science fiction anymore. Neuromorphic computing, a revolutionary approach inspired by the brain’s structure and function, is rapidly evolving and has the potential to redefine the future of technology.

Unlike traditional computers that rely on simple on/off states (0s and 1s), neuromorphic computing mimics the brain’s intricate network of neurons and their electrical signals. This bio-inspired approach promises to unlock entirely new ways for computers to process information, paving the way for a future where machines can learn and adapt like never before.

How does the Human Brain Work?

The human brain is a marvel of evolution, cramming an estimated 86 billion neurons into a 3-pound package. These aren’t just simple switches – they’re the fundamental units of information processing, forming a complex network that underpins everything from the simplest sensory perception to the most intricate thoughts and emotions. Let’s delve deeper into this fascinating organ to understand how it works and how neuromorphic computing aims to mimic its brilliance.

The Busy Bee of the Brain: The Neuron

Imagine a microscopic tree with a long, branching extension called an axon. This is a neuron’s basic structure, the brain’s workhorse. Information enters the neuron through shorter extensions called dendrites, which receive electrical signals from other neurons. These signals can be excitatory (pushing the neuron to fire) or inhibitory (dampening its activity).

The Spark of Communication: Synapses

The information exchange between neurons doesn’t happen through direct contact. Instead, a tiny gap called a synapse bridges the space between them. When an incoming signal in a dendrite reaches a certain threshold, the neuron fires an electrical impulse down its axon. This impulse, however, doesn’t jump directly to the next neuron. Instead, it triggers the release of neurotransmitters, chemical messengers that travel across the synapse.

The Strength of the Connection: It’s All About Plasticity

Here’s where things get truly fascinating. The neurotransmitters don’t just activate the receiving neuron – they can also influence the strength of the connection between the two neurons. Repeated firing and successful communication can strengthen a synapse, making it easier for the sending neuron to trigger a signal in the receiving neuron. Conversely, lack of activity can weaken the connection. This dynamic process, known as plasticity, allows the brain to constantly learn and adapt, forming new connections and strengthening existing ones based on experiences.

The Neural Networks

The power of the brain doesn’t lie in individual neurons but in their interconnectedness. Billions of neurons are connected by trillions of synapses, forming intricate networks that process information in parallel. These networks are responsible for everything we do, from seeing a red apple to feeling happiness or solving a complex math problem. The specific way neurons are connected and the strength of these connections determine how information flows through the brain, shaping our thoughts, memories, and actions.

Websites:

- National Institute of Neurological Disorders and Stroke (NINDS): This website from the National Institutes of Health (NIH) provides a wealth of information on the brain, including its structure, function, and development.

- Society for Neuroscience: This website is a great resource for exploring the latest research in neuroscience.

- Neuroscience News: This website provides current news and articles on various aspects of neuroscience research, making it a good resource for staying up-to-date on the field.

- Blue Brain Project: This ambitious project aims to create a detailed computer model of the human brain. Their website offers insights into the complexity of the brain and its function.

Research Papers:

- “Fundamental principles of neural computation” by Eric Kandel, Eric R. Kandel, and Thomas M. Jessell (2013): This seminal textbook provides a comprehensive overview of the basic principles of neural computation in the brain.

- “Neuromorphic computing with spiking neurons” by Wolfgang Maass (2014): This paper delves into the concept of spiking neural networks (SNNs), a core aspect of neuromorphic computing that mimics the brain’s use of timed pulses for information processing.

- “A roadmap for neuromorphic computing” by Giacomo Indiveri et al. (2016): This comprehensive roadmap paper outlines the challenges and opportunities in the field of neuromorphic computing.

Neuromorphic Mimicry: From Biology to Electronics

Neuromorphic computing takes a page from this biological marvel. Neuromorphic engineers, combining expertise from biology, computer science, physics, mathematics, and electrical engineering, design computer chips that mimic the structure and function of the brain:

Artificial Neurons: Beyond On/Off Switches

Traditional computers rely on transistors, tiny switches that can be either on (1) or off (0). This binary system works well for basic calculations but struggles with tasks that require complex information processing, like recognizing patterns or adapting to new situations.

Neuromorphic computing takes a different approach. Instead of transistors, neuromorphic chips employ artificial neurons. The biological neurons inspire these in our brains and can process information in a more nuanced way. Here’s how:

- Weighted Inputs: Unlike a simple on/off switch, artificial neurons can receive multiple inputs, each with a varying strength or “weight.” This weight reflects the importance of each input, similar to how connections between biological neurons can be strengthened or weakened based on experience.

Reference: “A Learning Rule for Fixed Point Networks” by Terry Sejnowski et al. (1988): explores how learning algorithms can adjust these weights in artificial neural networks.

- Non-Linear Activation Functions: Biological neurons don’t simply fire electrical impulses based on a single threshold. Neuromorphic computing incorporates similar non-linear activation functions that mimic this behavior. These functions determine whether the weighted sum of inputs is strong enough to trigger an output signal in the artificial neuron.

Reference: “Deep Learning” by Ian Goodfellow, Yoshua Bengio, and Aaron Courville (2016): This comprehensive textbook provides a detailed explanation of activation functions used in artificial neural networks.

Spiking Neural Networks (SNNs): The Power of Timing

Another key difference between traditional computers and neuromorphic systems lies in how information is transmitted. Traditional computers use a constant flow of data, while SNNs mimic the brain’s use of timed electrical pulses (spikes).

- Energy Efficiency: SNNs can potentially be more energy-efficient than traditional systems because they only transmit information when necessary, utilizing periods of silence between spikes.

Reference: “Low-Power Spiking Neural Networks on Processor Architectures” by Catherine Meador (2011): This paper explores the potential for energy-efficient neuromorphic computing using SNNs.

- Sparse Coding and Pattern Recognition: The timing and frequency of spikes may hold valuable information, similar to how the brain uses the timing of action potentials in biological neurons. This opens doors to exploring SNNs for tasks like pattern recognition and temporal processing.

Reference: “Sparse Coding and Information Processing in Spiking Neural Networks” by Wolfgang Maass (2010): This paper delves into the potential of SNNs for sparse coding, a technique for efficient information representation.

Interdisciplinary Collaboration

The field of neuromorphic computing thrives on the expertise of researchers from various fields:

- Biology: Understanding the structure and function of neurons and synapses is the foundation for designing artificial neurons and communication pathways in neuromorphic chips.

Computer Science: Scientists develop algorithms and software for training and optimizing artificial neural networks on neuromorphic hardware for specific tasks (e.g., image recognition, natural language processing).

- Physics and Mathematics: These fields play a crucial role in designing and simulating the behavior of neuromorphic circuits at a microscopic level, considering factors like material properties and electrical interactions.

- Electrical Engineering: Engineers translate the biological and computational models into real-world hardware. They design and fabricate neuromorphic chips that are energy-efficient, scalable, and capable of performing complex computations inspired by the brain.

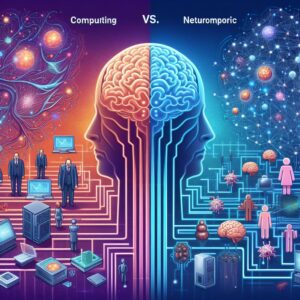

Von Neumann vs. Neuromorphic Computing

For decades, computers have relied on the von Neumann architecture, a well-established design that has powered technological advancements from the personal computer to space exploration. However, a new challenge is emerging – neuromorphic computing. Let’s delve into the characteristics of each and see how they compare:

The Von Neumann Architecture:

- Structure: This architecture separates the processing unit (CPU) from the memory unit. Information is fetched from memory, processed by the CPU, and then stored back in memory. This sequential approach ensures clarity and efficiency for many tasks.

- Data Processing: Data is represented as binary digits (0s and 1s) and processed in a serial manner, meaning the computer can only handle one instruction at a time.

- Strengths: The von Neumann architecture is highly structured, predictable, and efficient for well-defined tasks. It has been the foundation for most computers built to date due to its simplicity and scalability.

- Limitations: This architecture struggles with tasks that require high levels of parallelism, like pattern recognition or complex decision-making. Additionally, the constant data movement between memory and CPU can be energy-consuming.

Neuromorphic Computing: Inspired by the Brain

- Structure: Neuromorphic computers mimic the structure and function of the human brain. They use artificial neurons (inspired by biological neurons) and connections (synapses) to process information.

- Data Processing: Information is encoded in the strength of connections between artificial neurons and transmitted through timed pulses (spikes) similar to the brain. This allows for more parallel processing.

- Strengths: Neuromorphic computing holds promise for exceling at tasks that are naturally difficult for traditional computers, such as image and pattern recognition, natural language processing, and real-time adaptation. It also has the potential to be more energy-efficient for specific tasks due to its parallel processing capabilities.

- Limitations: Neuromorphic computing is still in its early stages. Neuromorphic chips are often more complex and expensive to develop than traditional CPUs. Additionally, programming and training these systems can be more challenging due to their bio-inspired architecture.

A Tale of Two Worlds: A Comparison Chart

Here’s a table summarizing the key differences between von Neumann and neuromorphic computing:

| Feature | Von Neumann Architecture | Neuromorphic Computing |

| Structure | CPU separate from memory | Mimics brain structure (neurons & synapses) |

| Data Processing | Serial, binary digits (0s & 1s) | Parallel, spiking neural networks |

| Strengths | Structured, efficient for defined tasks | Potentially excels in pattern recognition, adaptation, lower energy consumption (for specific tasks) |

| Limitations | Less efficient for parallel tasks, high energy consumption (data movement) | Early stage, complex & expensive chips, challenging programming |

The Future of Computing: A Collaboration?

The choice between von Neumann and neuromorphic computing isn’t necessarily an either/or situation. The two approaches might find their niche in a collaborative future:

- Hybrid Systems: Combining traditional computers with neuromorphic chips could leverage the strengths of both, maximizing efficiency depending on the task at hand.

- Specialized Applications: Neuromorphic computing might excel in specific areas like real-time image processing or robotics, while traditional computers handle more general tasks.

A Brief History of Brainy Machines and Neuromorphic Computing :

Neuromorphic computing isn’t a new idea, but advancements in technology have fueled its recent surge. Here’s a detailed look at the historical milestones that paved the way for this exciting field, along with the key research conducted at each stage:

Early Inspirations (1936s-1950s):

1936: The Foundation of Computation: Alan Turing establishes the theoretical foundation for modern computers with his proof that any mathematical computation can be performed with an algorithm. This paves the way for exploring alternative computational models inspired by biology.

1943: Warren McCulloch and Walter Pitts publish their groundbreaking paper, “A Logical Calculus of the Ideas Immanent in Nervous Activity,” laying the foundation for artificial neural networks (ANNs) as a model for information processing inspired by the brain.

1948: The Seeds of Neural Inspiration: Turing’s paper “Intelligent Machinery” delves into the possibility of building machines that mimic the cognitive processes of the brain. He proposes a model based on artificial neurons, laying the groundwork for future developments.

1949: Synaptic Plasticity and Learning: Canadian psychologist Donald Hebb makes a pivotal contribution by theorizing a connection between changes in synaptic strength and learning. This “Hebbian learning rule” remains a cornerstone of artificial neural networks.

The Rise and Fall of ANNs (1950s-1980s):

1950: The Turing Test: Turing proposes the Turing Test, a benchmark for measuring a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human.

1958: The Perceptron: An Early Attempt: The U.S. Navy spearheads the development of the perceptron, a neural network designed for image recognition. While limited by the understanding of the brain at the time, the perceptron is considered an early precursor to neuromorphic computing.

1960s: Early enthusiasm for ANNs leads to significant research efforts. Marvin Minsky and Seymour Papert published “Perceptrons” in 1969, highlighting the limitations of early ANN models, leading to a period known as the “AI Winter.”

1980s: Researchers like David Rumelhart, Geoffrey Hinton, and Ronald Williams develop new learning algorithms like backpropagation, reviving interest in ANNs.

The Dawn of Neuromorphic Computing (1980s-2000s):

1980s: The Birth of Neuromorphic Computing: Caltech professor Carver Mead emerges as a pioneer in the field. Inspired by the structure and function of the brain, he proposes the concept of neuromorphic computing and creates the first analog silicon retina and cochlea, showcasing the potential for mimicking biological information processing in hardware.

1989: Mead publishes his book “Analog VLSI Implementation of Neural Systems,” further solidifying the foundations of neuromorphic computing hardware.

1990s: Research focuses on memristors, electronic components that mimic the behavior of synapses, potentially enabling more realistic modeling of neural connections.

The Age of Acceleration (2000s-Present):

2000s: Advances in fabrication technology allow for the development of more complex neuromorphic chips.

2010s: Research intensifies on Spiking Neural Networks (SNNs), exploring the potential of using timed pulses for information processing similar to the brain.

2013: The Human Brain Project (HBP): An ambitious undertaking, the HBP aims to create a detailed computer model of the human brain. This project incorporates cutting-edge neuromorphic computing resources and aims to improve our understanding of the brain for advancements in medicine and technology.

2014: TrueNorth Neuromorphic Chip: Developed by IBM, the TrueNorth chip represents a significant step forward. This low-power neuromorphic chip boasts a million programmable spiking neurons and demonstrates the potential for efficient visual object recognition.

Intel’s “Loihi” neuromorphic chip (2018): This chip features 130 billion learnable synapses and is designed for applications like gesture recognition and robot control.

2020s-Present: Focus on scalability, energy efficiency, and developing new algorithms specifically tailored for neuromorphic architectures.

Neuromorphic Computing: A Stepping Stone on the Path to Artificial General Intelligence (AGI)?

Neuromorphic computing, with its brain-inspired architecture, is revolutionizing artificial intelligence (AI). But can it truly lead to Artificial General Intelligence (AGI) – a machine capable of human-level intelligence and understanding? This is a complex question at the heart of a rapidly evolving field.

The Quest for AGI: Can Neuromorphic Computing Bridge the Gap?

AGI represents a machine that can not only learn and adapt, but also reason, plan, and exhibit common sense. Here’s how neuromorphic computing might contribute, along with the remaining challenges:

Potential Benefits:

- Parallel Processing and Pattern Recognition: Neuromorphic chips excel at handling massive amounts of data simultaneously, mimicking the brain’s strength in pattern recognition. This could be crucial for AGI tasks like image understanding and natural language processing.

- Energy Efficiency for Long-Term Learning: Neuromorphic systems have the potential to be more energy-efficient for specific tasks compared to traditional computers. This could be advantageous for AGI systems that require continuous learning and adaptation.

- Emergent Properties: Some believe the complex interactions within large-scale neuromorphic networks might lead to unforeseen capabilities, potentially paving the way for new forms of intelligence.

Remaining Challenges:

- Explainability and Transparency: Neuromorphic systems can be complex “black boxes,” making it difficult to understand how they arrive at their decisions. This is a critical hurdle for achieving trust and ethical considerations in AGI.

- Scalability and Cost: Building large-scale neuromorphic systems that rival the human brain’s complexity is an ongoing challenge. Additionally, the cost of developing and implementing such systems remains high.

- The “Missing Ingredients”: While neuromorphic computing offers advantages, some argue that it may not address fundamental questions of consciousness, intentionality, and true understanding – key aspects of human-level intelligence.

The AGI Criteria: Has a Machine Arrived?

Several criteria are often used to gauge the presence of AGI:

- Reasoning and Judgment under Uncertainty: Can a machine analyze complex situations, weigh evidence, and make sound decisions even with incomplete information?

- Planning: Can it set goals, develop strategies, and adapt its plans as circumstances change?

- Learning: Can it continuously learn from experience and apply that knowledge to new situations?

- Natural Language Understanding: Can it comprehend the nuances of human language, including context and intent?

- Knowledge and Common Sense: Does it possess a vast knowledge base and the ability to apply common sense in reasoning and decision-making?

- Integration of Skills: Can it combine all these capabilities to achieve a specific goal in a complex environment?

So, has any machine achieved AGI? Currently, the answer is no. While advancements in AI are impressive, no system can truly replicate the full spectrum of human intelligence as defined by the AGI criteria.

The Ethical and Legal Labyrinth of Sentient Machines

The debate surrounding a sentient machine raises profound questions:

- Rights and Responsibilities: Imagine a world where machines become truly aware. Should they be granted rights, just like us? And who would be on the hook if a conscious AI made a mistake?

- Bias and Discrimination: AI systems inherit biases from their training data. How can we ensure fairness and prevent discrimination in an AGI system?

- Control and Safety: Who would control an AGI? How can we ensure its actions benefit humanity and avoid potential risks?

Instead of solely focusing on emulating the human brain, an exciting perspective suggests exploring alternative forms of intelligence inspired by neurological principles. Neuromorphic computing could be a stepping stone, not just for replicating human thought processes, but for unlocking entirely new forms of intelligent computation.

Applications of Neuromorphic Computing

Neuromorphic computing’s potential extends far beyond mimicking the brain for image recognition. Here’s a glimpse into some unique and exciting applications that could reshape various industries:

Brain-Computer Interfaces (BCIs) with Enhanced Empathy:

- Current Scenario: Existing BCIs allow basic communication and control for individuals with disabilities.

- Neuromorphic Approach: By incorporating neuromorphic chips that can better understand brain signals, BCIs could become more nuanced, potentially allowing for a deeper exchange of thoughts and emotions between humans and machines. Imagine a future where paralyzed individuals can not only control robotic limbs but also express their feelings and intentions more naturally.

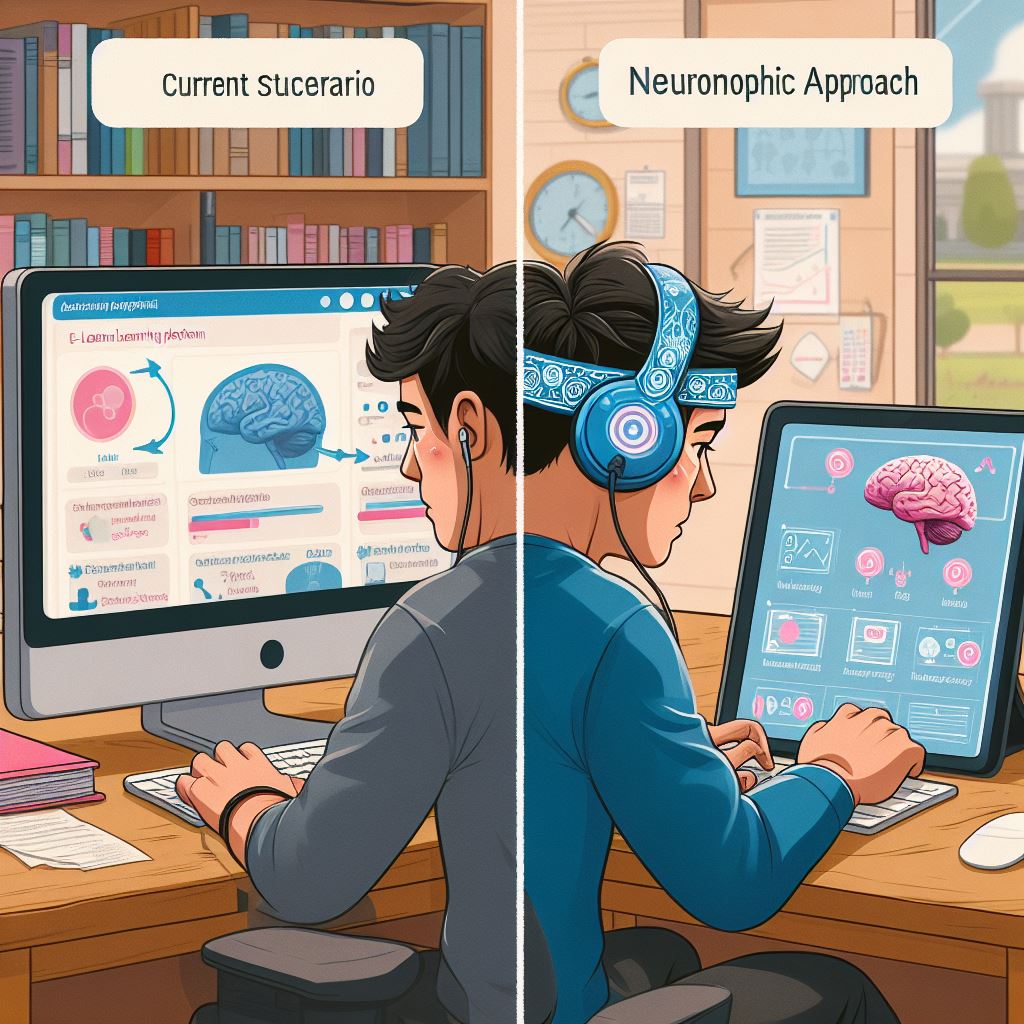

Personalized Learning with Adaptive Tutors:

- Current Scenario: E-learning platforms offer some level of personalization, but they cannot often adapt to individual learning styles in real time.

- Neuromorphic Approach: Neuromorphic chips could power intelligent tutoring systems that can analyze a student’s brain activity in real time, adjusting teaching styles and content delivery based on their cognitive strengths and weaknesses. This could revolutionize education by creating a truly personalized learning experience for each student.

Next-Gen Robotics with Enhanced Situational Awareness:

- Current Scenario: Robots excel at repetitive tasks in controlled environments, but struggle with dynamic situations requiring real-time adaptation.

- Neuromorphic Approach: Neuromorphic chips could equip robots with a more human-like ability to perceive and understand their surroundings. Imagine robots that can navigate complex environments, recognize and respond to unexpected situations, and even collaborate with humans seamlessly.

Drug Discovery with Biomimetic Simulations:

- Current Scenario: Drug discovery relies heavily on traditional lab experiments and animal testing, which can be slow and expensive.

- Neuromorphic Approach: Neuromorphic chips could be used to create highly detailed simulations of biological systems like the human brain or immune system. This could allow researchers to virtually test new drugs and therapies, accelerating the discovery process and reducing reliance on animal testing.

Artificial Creativity with Emergent Properties:

- Current Scenario: While AI can generate creative text formats, true artistic expression remains elusive.

- Neuromorphic Approach: The complex interactions within large-scale neuromorphic networks could lead to unforeseen capabilities. Imagine AI systems that can not only mimic existing art forms but also generate entirely new creative expressions, pushing the boundaries of human imagination.

A World of Possibilities: Beyond the Obvious

These are just a few examples of the unique potential applications of neuromorphic computing. As the field evolves, we can expect even more groundbreaking applications to emerge in various sectors, from healthcare and finance to entertainment and scientific discovery. The key takeaway? Don’t limit your vision of neuromorphic computing to simply replicating the brain for pattern recognition tasks. The true power lies in harnessing its principles to unlock entirely new possibilities in artificial intelligence.

The Future of Brainy Computing:

Neuromorphic computing holds immense promise for revolutionizing AI, but significant hurdles remain. To overcome these challenges and unlock the true potential of “brainy computing,” a collaborative approach is paramount. Here’s why interdisciplinary teamwork is crucial for the future of this exciting field:

The Symphony of Expertise: Why Collaboration Matters

Neuromorphic computing lies at the intersection of multiple disciplines:

Neuroscience: Understanding the intricate workings of the brain – its structure, function, and learning mechanisms – provides the blueprint for designing artificial neurons and communication pathways in neuromorphic chips.

- Neuroscientists play a vital role in deciphering the brain’s computational principles and translating those insights into actionable design specifications.

Computer Science: Developing efficient algorithms and software is essential for training and optimizing artificial neural networks on neuromorphic hardware.

- Computer scientists design novel learning algorithms specifically tailored to the unique architecture of neuromorphic chips, enabling them to learn and adapt effectively.

Engineering: Bridging the gap between biological and computational models requires translating those models into real-world hardware.

- Engineers create and fabricate neuromorphic chips that are not only energy-efficient and scalable but also capable of implementing complex neural network architectures inspired by the brain.

Emerging Research Areas: A Glimpse into the Future

By fostering collaboration between these disciplines, exciting research areas within neuromorphic computing are coming to light:

Neuromorphic Hardware Advancements:

- Material Science: Exploring novel materials with properties mimicking biological synapses could lead to more efficient and realistic neuromorphic devices.

- Brain-inspired Architectures: Developing new chip architectures that go beyond simply mimicking the brain’s structure, potentially leading to entirely new forms of neuromorphic computation.

New Learning Algorithms:

- Spiking Neural Network (SNN) Training: Designing algorithms that effectively train SNNs to utilize timed pulses for information processing, mimicking the brain’s natural communication method.

- Unsupervised Learning: Developing algorithms for unsupervised learning on neuromorphic hardware, allowing these systems to learn and adapt without the need for large amounts of labeled training data.

A Collaborative Future: Beyond Mimicry

The future of neuromorphic computing isn’t just about replicating the brain; it’s about harnessing its principles to create entirely new forms of intelligent computation. Collaboration between neuroscientists, computer scientists, and engineers will be the driving force behind this endeavor:

- Neuroscientists will continue to unravel the mysteries of the brain, providing deeper inspiration for novel computational models.

- Computer scientists will push the boundaries of learning algorithms, enabling neuromorphic systems to tackle increasingly complex tasks.

- Engineers will translate these advancements into powerful and efficient neuromorphic hardware, bridging the gap between theory and practice.

By working together, this interdisciplinary team holds the key to unlocking the true potential of neuromorphic computing, paving the way for a future where brain-inspired intelligence transforms our world.

Conclusion: Bridging the Gap with Brainy Computing

Neuromorphic computing stands at the precipice of a revolution, offering a glimpse into a future where machines can not only process information, but also learn, adapt, and potentially even exhibit a form of intelligence more akin to the human brain. While challenges remain, the potential rewards are immense.

Beyond Mimicry, a New Era of Intelligence

This isn’t simply about building machines that mimic the brain; it’s about harnessing the power of neural principles to explore entirely new forms of intelligent computation. Imagine machines that excel at tasks where traditional computers struggle – real-time pattern recognition, intuitive decision-making, and even creative problem-solving. Neuromorphic computing has the potential to bridge the gap between the rigid logic of machines and the flexible, adaptable intelligence of the human brain.

Fueling the Future: Your Role in the Journey

The future of neuromorphic computing hinges on a collaborative effort. If you’re fascinated by artificial intelligence, neuroscience, or simply the potential of technology to push boundaries, here’s your call to action:

- Explore Further: Delve deeper into this exciting field. Learn about the latest research, explore educational resources, and engage in discussions with others who share your curiosity.

- Consider the Ethics: As this technology evolves, it’s crucial to consider the ethical implications. How can we ensure AI is developed and used responsibly? Join the conversation and advocate for responsible AI development.

- Be a Part of the Solution: Whether you’re a student, researcher, or simply an interested individual, there are ways to contribute. Advocate for funding in neuromorphic research, support educational initiatives, or even consider pursuing a career in this rapidly growing field.

Neuromorphic computing is not just about technological advancements; it’s about shaping a future where machines augment our intelligence and capabilities, working alongside us to address humanity’s greatest challenges. The journey has just begun, and your participation can help shape its course.

“If you enjoyed this exploration of neuromorphic computing, you might also find my blog post on the amazing inventions and discoveries interesting. Check out the following blog section to discover more!”