Introduction to Text Embeddings

In the world of Natural Language Processing (NLP), text embeddings have revolutionized how machines understand and process human language. These embeddings are dense, numerical representations of words, phrases, or even entire documents, capturing semantic meaning and relationships. Unlike traditional techniques like TF-IDF, text embeddings provide a sophisticated way to encode the context and similarity between words.

For example, the words cat and feline will appear close to each other in a vector space due to their similar meanings, while cat and dog will be nearby but slightly farther apart. This level of nuance is crucial for applications such as AI chatbots, sentiment analysis, and semantic search.

Enter FastEmbed, a lightweight embedding tool that is transforming how Text Vectorization are created and used. Designed for efficiency, Compact NLP Tool offers a solution for developers and researchers seeking faster, resource-saving alternatives to bulky frameworks like Transformers. Its seamless integration into NLP embedding tools is set to redefine industry practices, making it a top choice for efficient text embeddings across applications.

What is FastEmbed?

At its core, Efficient Text Embeddings is a cutting-edge NLP embedding tool built to create lightweight embeddings with remarkable speed and accuracy. Using the BAAI/bge-small-en-v1.5 model, it ensures high-quality text embeddings while keeping computational requirements minimal.

How Does FastEmbed Stand Out?

Traditional frameworks like Sentence Transformers are powerful but resource-heavy, often overkill for applications requiring quick, scalable solutions. Efficient Text Embeddings provides a solution by offering a streamlined approach that excels in efficiency without compromising performance.

Imagine running a search engine for an e-commerce platform. With Sentence Transformers, every query would require significant processing power, increasing operational costs. Using Efficient Text Embeddings, the same results can be achieved with reduced latency, making it a more practical choice.

Key Features of FastEmbed

- Compact and Lightweight: Its small architecture is ideal for edge computing and smaller-scale tasks.

- Exceptional Speed: Efficient Text Vectorization processes data faster by optimizing resource usage, ensuring a smoother user experience.

- Wide Usability: Its integration into NLP embedding tools enables developers to apply it in search systems, chatbots, and recommendation engines.

Comparison: FastEmbed vs Transformers

While Transformers shine in complex scenarios, they often lead to high infrastructure costs and slowdowns. Compact NLP Tool, on the other hand, balances performance and cost-efficiency. For example, it supports efficient Text Vectorization in real-time systems, making it a preferred choice for industries like retail and healthcare.

By understanding FastEmbed’s definition and key features, businesses can adopt this transformative tool to unlock new efficiencies in their NLP embedding tools and other AI-driven processes.

Why Use FastEmbed?

In the rapidly evolving field of Natural Language Processing (NLP), FastEmbed stands out for its unparalleled combination of speed, efficiency, and versatility. Here’s why this lightweight embedding tool is gaining traction across industries:

Key Advantages of FastEmbed

- Speed and Resource Efficiency

Quick NLP Embedding Solution prioritizes computational efficiency without compromising on accuracy. Unlike resource-intensive models such as Sentence Transformers, it can generate high-quality text embeddings in a fraction of the time, making it ideal for applications requiring real-time data processing.

For instance, in recommendation systems, where latency can impact user experience, FastEmbed benefits shine by reducing processing delays while maintaining robust semantic understanding.

- Lightweight Architecture

One of FastEmbed’s benefits is its design tailored for low-resource environments. It doesn’t require extensive computational infrastructure, making it accessible to developers working on edge devices or in resource-constrained settings.

A practical use case includes deploying Compact NLP Tool in mobile applications, where traditional models might be too heavy to operate effectively.

- Seamless Integration

Efficient Text Vectorization is designed for ease of integration into existing NLP pipelines. Developers can quickly adopt it for tasks like semantic search, chatbots, and document categorization. Its compatibility with common machine learning libraries ensures minimal overhead during deployment.

Notable Use Cases

Search Optimization

FastEmbed use cases include powering advanced search systems capable of understanding context and user intent. By embedding text queries and documents into a shared vector space, it delivers highly relevant search results even for ambiguous queries.

For example, in e-commerce, Compact NLP Tool can match product descriptions with user queries more effectively, enhancing the customer experience.

Recommendation Systems

Modern recommendation engines rely on understanding user preferences through natural language. Efficient Text Embeddings simplifies this by encoding user reviews, search queries, and item descriptions into embeddings that reveal patterns and correlations.

In industries like streaming services or online retail, FastEmbed benefits businesses by improving personalized recommendations while keeping computational costs low.

Semantic Analysis

From sentiment analysis to summarization, FastEmbed’s benefits include extracting meaningful insights from textual data. It allows organizations to analyze customer feedback or market trends efficiently.

For example, healthcare companies can use EfficientText Vectorization to analyze patient feedback, identifying areas of improvement or satisfaction trends across services.

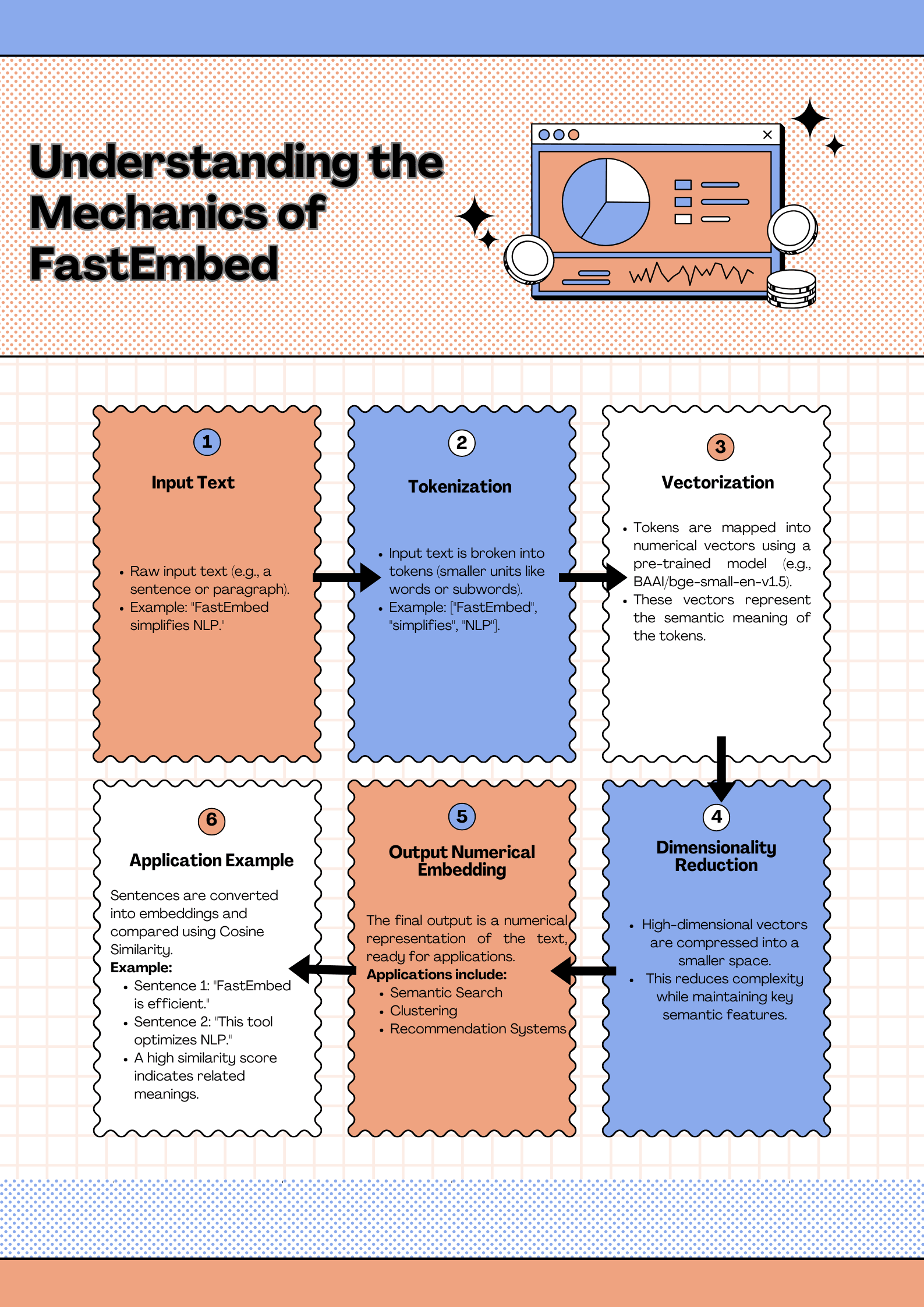

How FastEmbed Works?

Understanding the mechanics of FastEmbed sheds light on why it is such an efficient and versatile tool for Text Vectorization. By leveraging advanced modeling techniques and a lightweight architecture, Compact NLP Tool streamlines the embedding process while ensuring high performance.

Overview of the Embedding Process

FastEmbed operates using the BAAI/bge-small-en-v1.5 model, a pre-trained framework optimized for generating high-quality text embeddings. The process starts with input text being tokenized into smaller units (words or subwords). These tokens are then passed through the model’s layers, which convert them into dense numerical vectors representing their semantic meaning.

Unlike traditional methods, Efficient Text Vectorization focuses on efficiency by reducing computational complexity. This makes it faster than resource-heavy models like Sentence Transformers while maintaining a comparable level of accuracy.

Step-by-Step Process

- Tokenization: The input text is broken into smaller, manageable tokens. For example, “FastEmbed simplifies NLP” becomes a sequence of smaller components: “FastEmbed,” “simplifies,” and “NLP.”

- Vectorization: The model processes these tokens and maps them into a shared vector space, where words with similar meanings have closely aligned embeddings.

- Dimensionality Reduction: The resulting high-dimensional vectors are compacted, ensuring lightweight and efficient processing without sacrificing key semantic information.

- Output: The final embedding is a numerical representation of the text, ready for applications like semantic search, clustering, or recommendation systems.

Example of Generating Embeddings

Consider a simple use case: generating embeddings for two sentences to compare their semantic similarity:

- Input:

- “FastEmbed is efficient.”

- “This tool optimizes NLP.”

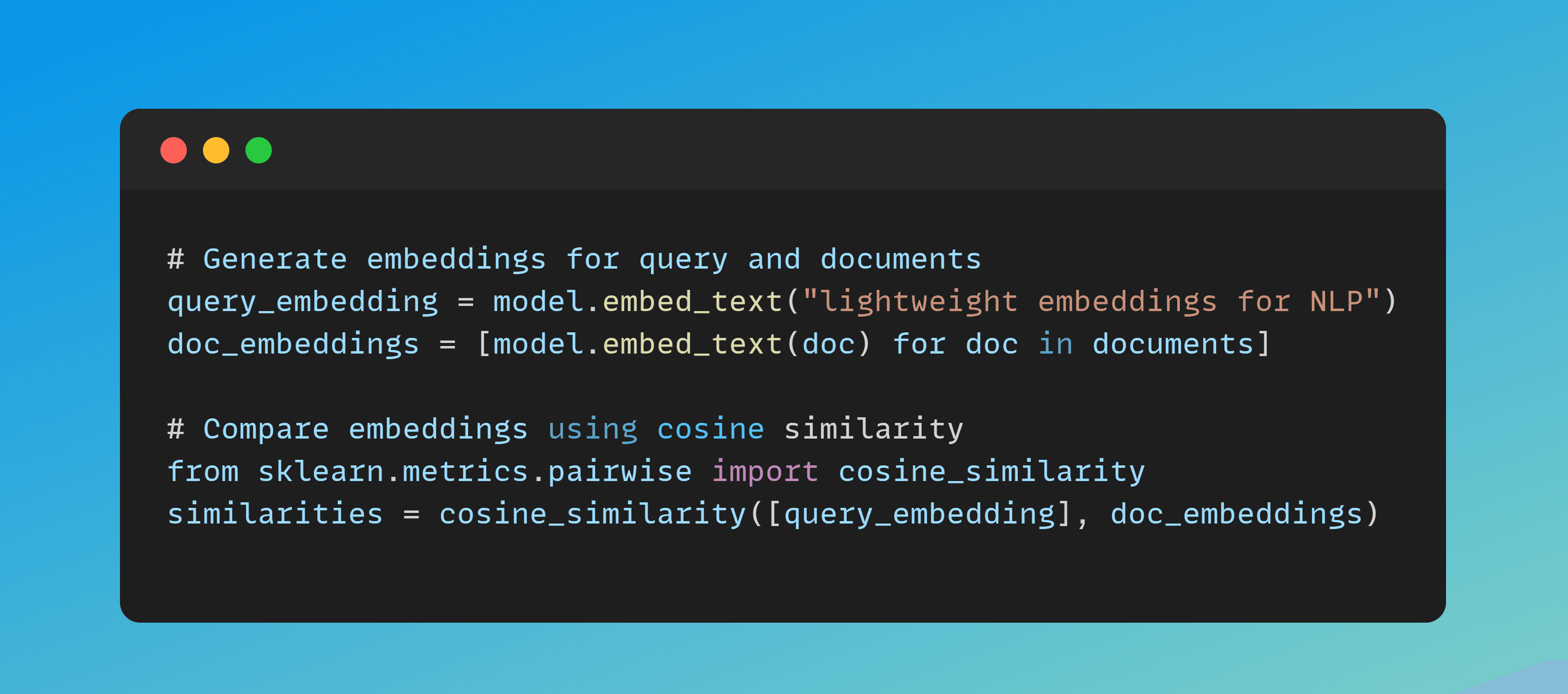

Using FastEmbed, both sentences are converted into embeddings. By calculating the cosine similarity between the two vectors, the model determines how closely related their meanings are. A high similarity score would indicate that the sentences convey similar ideas.

Visualization of Embeddings

To better understand embeddings, one can visualize them using tools like t-SNE or UMAP. These techniques reduce the high-dimensional embeddings into a 2D or 3D space. For example:

- Cluster 1: Words like “cat,” “dog,” and “animal” might form a tight cluster, indicating similar semantic meanings.

- Cluster 2: Words like “fast,” “efficient,” and “optimized” might form another, highlighting technical or performance-related concepts.

Such visualizations help developers and researchers see how well FastEmbed captures relationships between different pieces of text.

Key Features of FastEmbed’s Architecture

- Pre-trained for Versatility: With its BAAI/bge-small-en-v1.5 backbone, Compact NLP Tool delivers robust embeddings suitable for a wide range of NLP tasks.

- Lightweight and Adaptable: The architecture is fine-tuned to handle diverse datasets without requiring significant customization.

- Focus on Semantic Integrity: FastEmbed ensures embeddings are both compact and contextually rich, making them ideal for real-world applications.

Applications of FastEmbed

FastEmbed’s versatility in generating efficient text embeddings makes it a game-changer across industries, especially where speed and resource efficiency are critical. Below are expanded real-world applications illustrating its transformative potential.

Search Engines and Information Retrieval

Search engines rely on semantic understanding to go beyond basic keyword matching. Compact NLP Tool enhances these systems by delivering semantic search capabilities, allowing results to align better with user intent.

Example:

When a user searches for “eco-friendly car options,” traditional engines may focus on pages mentioning “eco-friendly” and “car.” However, Efficient Text Vectorization enables an understanding of context, prioritizing pages about electric or hybrid vehicles.

In academic and enterprise contexts, this technology can sift through vast document databases, identifying those most relevant to a nuanced query, such as research articles on “renewable energy in arid climates.”

AI Chatbots and Virtual Assistants

Efficient Text Vectorization powers intelligent virtual assistants by enabling a contextual understanding of user queries. This guarantees that responses are not only precise but also aligned with the context.

Customer Support:

A chatbot trained with FastEmbed can discern subtle differences between queries like “issue with my laptop” and “need advice on buying a laptop,” delivering tailored responses.

Healthcare:

In telehealth platforms, Compact NLP Tool interprets patient descriptions such as “persistent fatigue” or “recurring migraines,” guiding them toward relevant resources or scheduling a consultation.

Recommendation Systems

Personalization is key to modern recommendation systems, and Quick NLP Embedding Solution excels by embedding user preferences and product descriptions in a shared semantic space.

E-commerce:

FastEmbed can analyze a user’s search history for items like “affordable workout gear” and recommend budget-friendly fitness products.

Streaming Platforms:

Based on a user’s viewing habits, the system can suggest content with similar themes or genres, such as recommending documentaries on sustainability after a user watches a film on climate change.

Hybrid Search Systems

In hybrid search systems, which combine semantic search with traditional lexical search, Compact NLP Tool bridges the gap by integrating both approaches.

- Example:

Consider a legal firm searching for precedents related to “contractual breach.” FastEmbed identifies relevant cases, even when phrased differently, such as “violation of contractual terms,” enhancing both precision and recall.

Industry-Specific Use Cases

FastEmbed offers unique solutions tailored to specific sectors:

- Healthcare: Assisting in clinical research by identifying similar case studies or symptoms across large medical datasets.

- E-commerce: Streamlining product discovery through semantic tagging and retrieval.

- Education: Enhancing learning platforms by matching queries to the most relevant course materials.

By embedding domain-specific terminologies and use cases, it creates customized applications for niche industries.

Comparison: FastEmbed vs Other Embedding Models

When evaluated against frameworks like Transformers or Sentence Transformers, it stands out for its lightweight architecture, ease of use, and efficiency. Let’s delve deeper into how it compares across critical dimensions.

Performance and Resource Efficiency

- FastEmbed: Prioritizes speed and low resource consumption, making it ideal for environments with limited computational power.

- Transformers: Require substantial computational resources, including GPUs, for optimal performance, making them less accessible for smaller projects.

For example, indexing a large e-commerce database with Compact NLP Tool can be done at a fraction of the time and energy costs compared to Sentence Transformers.

Accuracy and Semantic Understanding

While Transformers excel in achieving state-of-the-art accuracy, Quick NLP Embedding Solution strikes a balance between performance and efficiency, delivering high-quality results without the computational overhead.

- FastEmbed: Ideal for use cases like semantic search or recommendation systems, where slight trade-offs in accuracy are acceptable for faster processing.

- Transformers: Better suited for research-heavy or critical tasks requiring absolute precision, such as medical diagnostics.

Scalability

Efficient Text Embeddings scales effortlessly across large datasets and diverse domains.

- Example: In a global news aggregation system, it can categorize millions of articles into meaningful clusters for regional audiences faster than its counterparts.

By contrast, scaling Transformers often incurs exponential increases in computational costs.

Customizability

FastEmbed offers an excellent mix of pre-trained capabilities and adaptability.

- Sentence Transformers: Deliver pre-trained models that work out-of-the-box but often require fine-tuning for domain-specific applications.

- FastEmbed: Allows developers to integrate and customize embeddings with minimal overhead, ensuring rapid deployment.

Real-World Performance Metrics

- FastEmbed: Achieves embedding generation speeds up to 2–3 times faster than Transformers, making it a top choice for real-time applications like AI chatbots and live recommendation engines.

- Sentence Transformers: Offer slightly better embedding quality but at the cost of latency and resource consumption.

Cost-Benefit Analysis

For businesses seeking high-quality embeddings without a significant hardware investment, Compact NLP Tool offers an unparalleled cost-to-performance ratio. A startup looking to implement a semantic search engine, for instance, can deploy FastEmbed without requiring expensive GPUs, saving costs while maintaining functional excellence.

Comparative Analysis

Here’s a detailed comparison of FastEmbed and HuggingFace Sentence Transformers across various performance and resource metrics. The comparison highlights their efficiency and suitability for specific use cases:

| Feature | FastEmbed | HuggingFace Sentence Transformers |

|---|---|---|

| Model Size | Smaller, lightweight models (supports quantization for CPUs and edge devices) | Larger models, often require GPUs for optimal performance |

| Dependencies | Minimal dependencies; can operate without CUDA or PyTorch | Requires dependencies like PyTorch and CUDA for GPU acceleration |

| Ease of Setup | Easy and fast setup with reduced installation size | Installation size increases with additional libraries |

| Embedding Time (per doc) | Average: 0.0438s, Max: 0.0565s, Min: 0.0429s | Average: 0.0471s, Max: 0.0658s, Min: 0.0431s |

| Resource Efficiency | Designed for CPU and low-memory environments; supports AWS Lambda deployments | Optimized for high-performance GPUs with larger RAM requirements |

| Versatility | Suited for real-time applications in constrained environments | Best for high-complexity tasks and bulk processing on powerful setups |

| Accuracy | Competitive embeddings using quantized models | High accuracy with a broader range of pretrained models |

| Scaling Options | Supports efficient scaling in edge AI and serverless platforms | Better for centralized, high-throughput systems |

| Use Cases | Lightweight text embedding generation, edge deployments, resource-limited applications | Advanced NLP tasks, large-scale data processing, and deep model customization |

Key Takeaways:

- FastEmbed excels in scenarios requiring agility, low computational overhead, and real-time text processing in constrained environments. It’s particularly useful for edge AI and serverless deployments

- HuggingFace Sentence Transformers are ideal for complex NLP tasks where precision and scalability are crucial, supported by their expansive ecosystem and pretrained model diversity

Getting Started with FastEmbed

FastEmbed’s lightweight design ensures quick and straightforward implementation for users across skill levels, from beginners to experienced developers. Here’s a step-by-step guide to setting up and using Quick NLP Embedding Solution for your next NLP project:

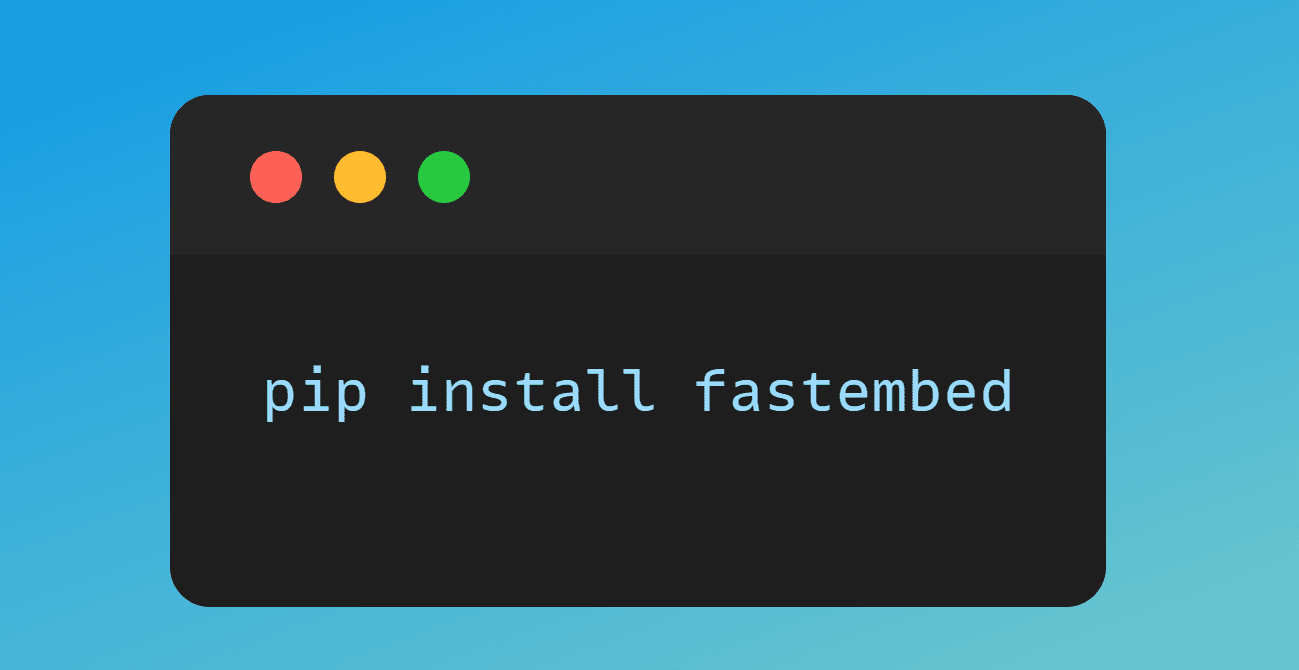

Installation and Setup

FastEmbed supports Python-based workflows, making it compatible with widely used development environments. Follow these steps to get started:

Install Dependencies:

Use the pip command to install Quick NLP Embedding Solution and its minimal requirements.

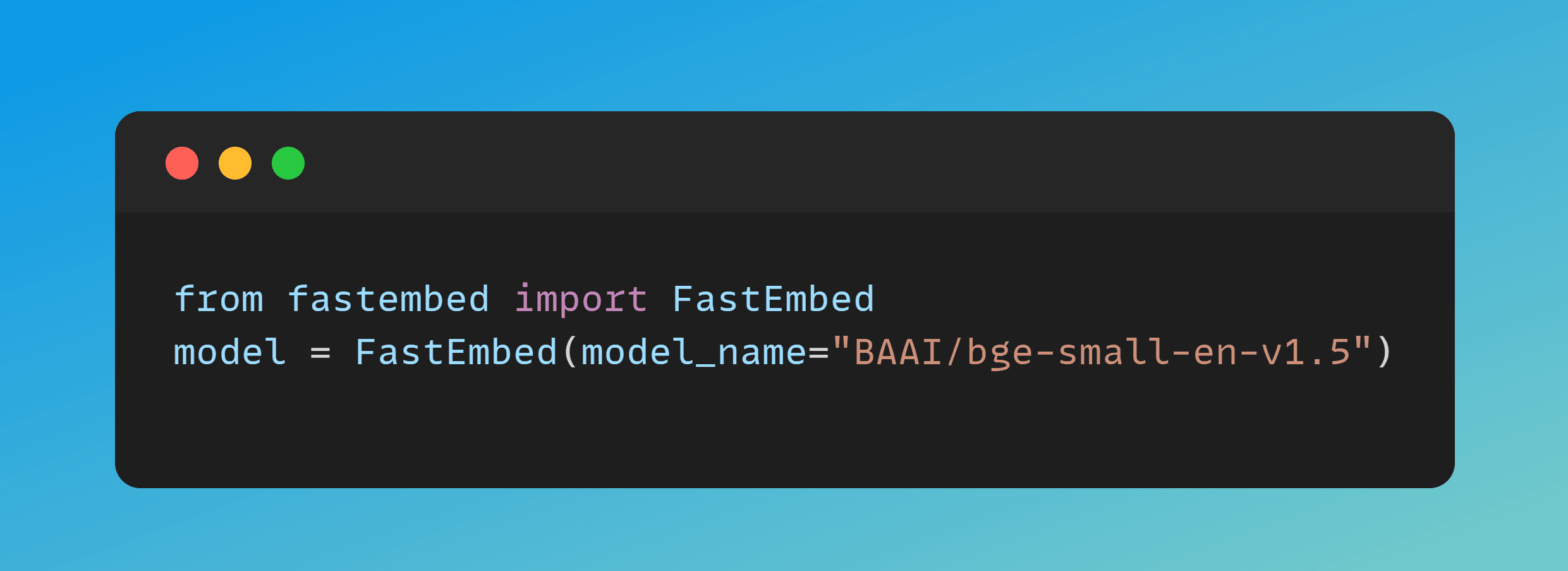

Loading Pretrained Models:

FastEmbed utilizes efficient models like BAAI/bge-small-en-v1.5. Loading a model is simple:

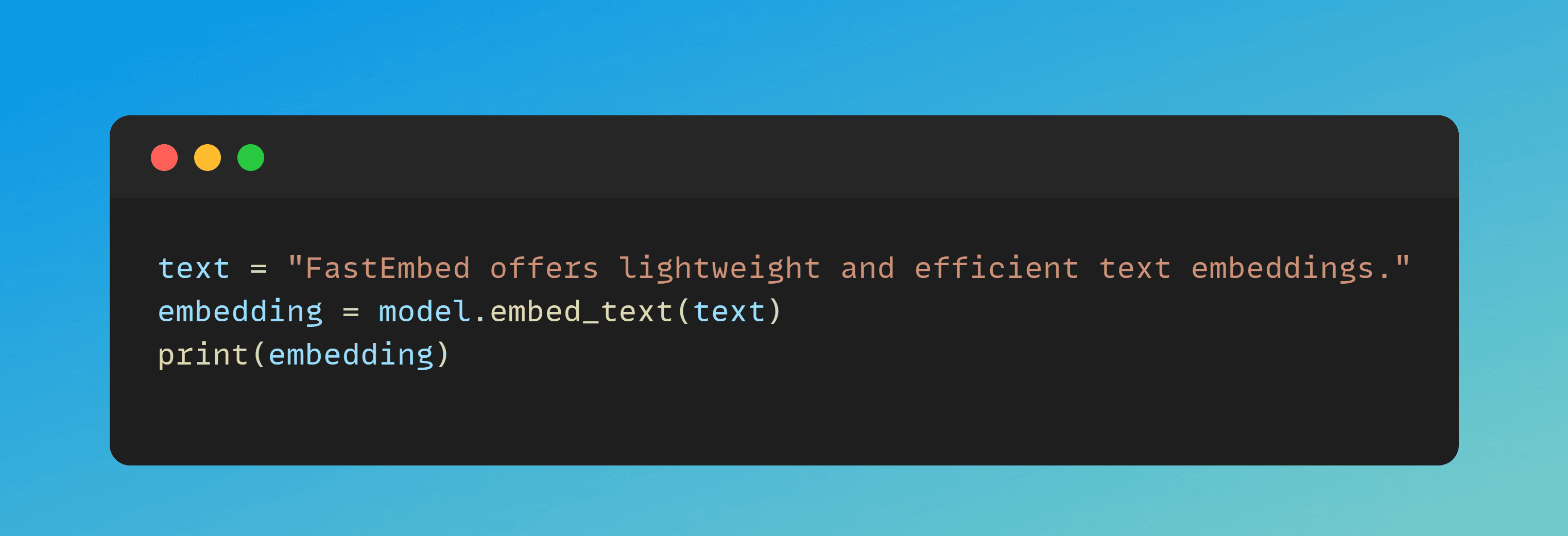

Generating Embeddings:

Generate embeddings with minimal code:

Hybrid Search Use Case

FastEmbed shines in applications like hybrid search systems that combine semantic and keyword-based search techniques.

Example:

Suppose you are building a document search tool. You can use the Compact NLP Tool to generate embeddings for semantic matching:

This setup enables efficient, real-time search functionalities for various applications.

Resources for Further Learning

- Explore Quick NLP Embedding Solution documentation for advanced features.

- Access tutorials demonstrating integration with platforms like AWS Lambda.

Challenges and Future Potential

While FastEmbed’s capabilities are impressive, there are areas for improvement and vast untapped potential for future growth.

Current Challenges

- Ecosystem Size:

FastEmbed’s ecosystem is still growing compared to established frameworks like Sentence Transformers. This can limit access to pretrained models and third-party integrations. - Specialized Tasks:

While FastEmbed is efficient for general text embeddings, tasks requiring deep customization or domain-specific embeddings may require more advanced tuning. - Community Support:

A smaller user base means fewer tutorials, community examples, and troubleshooting resources. - Evaluation Across Domains:

FastEmbed performs exceptionally well in resource-constrained environments, but benchmarks for niche domains (e.g., legal, medical) are less explored.

Future Directions

- Expanded Model Library:

Development of domain-specific Compact NLP Tool models (e.g., biomedical or legal text embeddings) will expand its usability. - Integration with Large Ecosystems:

Partnerships with platforms like HuggingFace or TensorFlow could streamline adoption by existing users. - Edge AI Innovations:

Further optimization for mobile and IoT devices will position FastEmbed as a leader in edge NLP applications. - Community Contributions:

Encouraging open-source contributions can enrich the ecosystem and address gaps in documentation or model availability.

FastEmbed’s trajectory suggests it could play a pivotal role in future NLP innovations, emphasizing lightweight, scalable solutions.

FAQ

What is FastEmbed?

FastEmbed is a lightweight and efficient library for generating text embeddings. It’s designed to be faster, easier to use, and more resource-friendly compared to many traditional embedding models.

How does FastEmbed work?

It uses optimized transformer models to convert words, phrases, or documents into numerical vectors, capturing semantic meaning for search, clustering, or classification tasks.

What are the benefits of using FastEmbed over traditional embeddings?

FastEmbed offers speed, low memory usage, and scalability, making it suitable for real-time applications like search engines, chatbots, and recommendation systems.

Can FastEmbed handle large datasets?

Yes, FastEmbed is optimized for scalability. It can process large text corpora efficiently without requiring heavy computational resources.

What are the main applications of FastEmbed?

Common applications include semantic search, document clustering, sentiment analysis, question answering, and powering recommendation engines.

Is FastEmbed suitable for multilingual text processing?

Yes, FastEmbed supports multiple languages, enabling cross-lingual understanding and applications like global search and translation support.

How does FastEmbed improve semantic search?

It generates embeddings that capture contextual meaning, allowing search systems to retrieve results based on intent and meaning—not just keyword matches.

Can FastEmbed be integrated with AI models and pipelines?

Yes, it’s highly flexible and can be integrated with machine learning pipelines, NLP tasks, and large language model workflows.

Is FastEmbed open-source and easy to use?

Yes, FastEmbed is open-source, lightweight, and comes with simple APIs, making it easy for developers and researchers to implement quickly.

Conclusion

FastEmbed is a groundbreaking addition to the field of text embeddings, offering unparalleled efficiency and simplicity. Its lightweight models and focus on resource optimization make it a compelling choice for developers and businesses alike.

Key Takeaways

- Efficiency Redefined: FastEmbed brings high performance to low-resource environments, enabling real-time applications.

- Versatility: From search engines to chatbots, its applications span multiple industries.

- Future Promise: As its ecosystem grows, Compact NLP Tool is poised to redefine text embeddings with its lightweight approach.

Are you ready to harness the power of FastEmbed in your NLP projects? Start today by exploring its robust features and simple setup. Learn more and experiment with real-world use cases to experience its transformative potential.

Explore More on AI and Technology!

Dive deeper into the fascinating world of AI with these must-read articles:

- AI in Everyday Life – Discover how AI is reshaping daily routines, from smart devices to personalized services.

- AI in Wildlife Conservation – Learn how AI is revolutionizing efforts in wildlife protection and ecosystem preservation.

- BERT vs GPT: Comparing Modern AI-Language Models – A detailed comparison between BERT and GPT, two of the most advanced AI language models.

- Agentic AI Systems – Explore how agentic AI paves the way for more autonomous, intelligent systems in various industries.

Start reading now and unlock new insights on artificial intelligence!