Introduction: Comparision of BERT Vs GPT

In the fast-paced world of artificial intelligence (AI), language models like BERT and GPT have revolutionized how machines process and understand human language. These two groundbreaking models, developed by Google and OpenAI respectively, represent significant advancements in natural language processing (NLP), yet they are designed with fundamentally different architectures and purposes. Understanding the nuances between BERT vs GPT is essential for anyone working in AI, as these models cater to distinct applications—from search engine optimization to text generation.

This blog dives deep into the difference between BERT and GPT, exploring BERT vs GPT architecture, applications, and training methodologies. Along the way, we’ll uncover these models’ real-world impact, discuss OpenAI embeddings vs BERT, and provide insights into emerging trends. By the end of this guide, you’ll know which model aligns best with your specific requirements.

Understanding BERT: The Contextual King

What is BERT?

BERT (Bidirectional Encoder Representations from Transformers), introduced by Google in 2018, marked a paradigm shift in natural language understanding (NLU). Unlike earlier models, BERT processes text bi-directionally, analyzing the relationships between words both before and after a target word in a sentence. This bidirectional approach allows BERT to grasp deeper contextual meaning, making it highly effective for tasks that require nuanced comprehension.

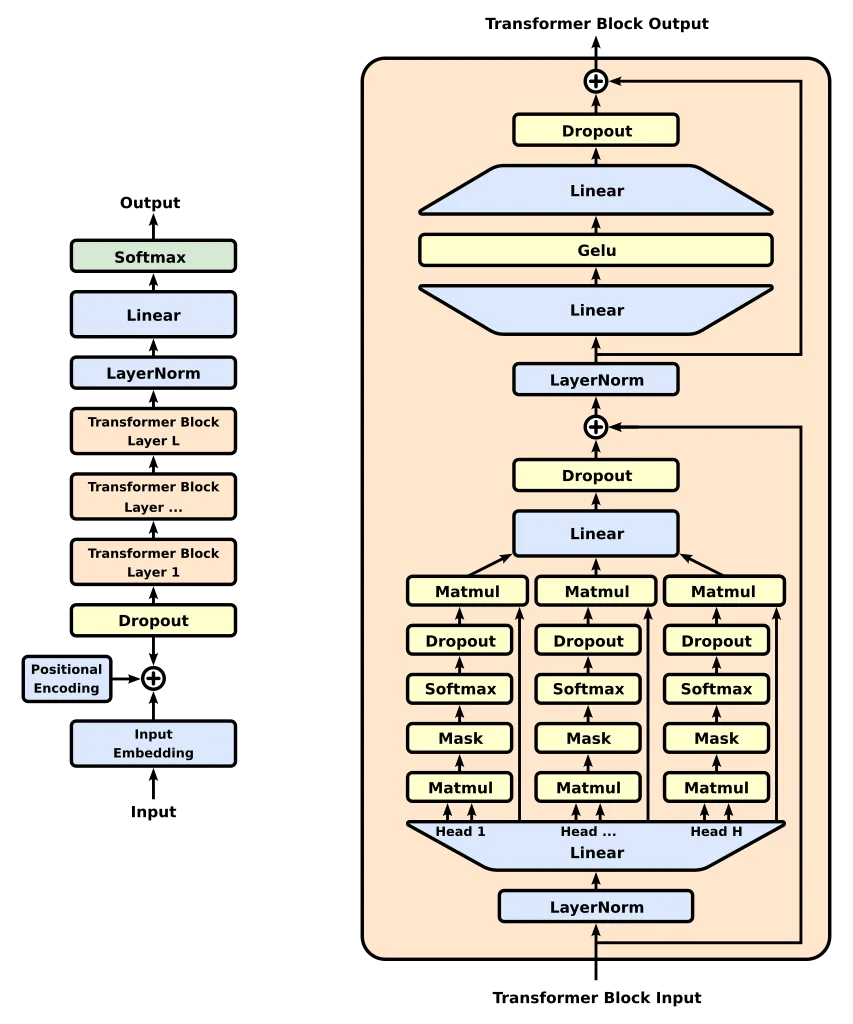

How BERT’s Encoder Works

BERT is built on the transformer encoder architecture, which enables it to process an entire input sequence simultaneously. The encoder assigns attention scores to words, focusing on relevant terms while ignoring less significant ones. This architecture is the backbone of BERT’s ability to handle tasks like:

- Question answering (e.g., Google Search snippets).

- Text classification (e.g., spam detection or sentiment analysis).

- Named entity recognition (e.g., extracting names, dates, and places from text).

Training Objectives of BERT

BERT leverages two unique training objectives:

- Masked Language Modeling (MLM): A portion of the input text is randomly masked, and the model is trained to predict the missing words. This forces BERT to understand the broader context rather than relying solely on sequential data.

- Next Sentence Prediction (NSP): BERT learns the relationships between paired sentences, which is particularly useful for tasks like dialogue generation and document retrieval.

These training methods distinguish BERT from models like GPT, which rely on unidirectional processing.

Applications of BERT

BERT excels in language understanding tasks where context is critical. Key applications include:

- Search Engines: Google uses BERT to interpret user queries more accurately, enhancing the relevance of search results.

- Sentiment Analysis: Determining user sentiment in social media or product reviews.

- Text Summarization: Generating concise summaries for lengthy articles.

By prioritizing context over sequence, BERT has become the go-to model for industries requiring precise language comprehension.

GPT Unveiled: The Autoregressive Powerhouse

What is GPT?

GPT (Generative Pre-trained Transformer), developed by OpenAI, is a cutting-edge model designed for natural language generation (NLG) tasks. Unlike BERT, which is focused on understanding the context in text, GPT shines in generating human-like text by predicting the next word in a sequence. Its autoregressive architecture processes data sequentially, making it a powerful tool for creative writing, conversational AI, and much more.

How GPT’s Decoder Works

GPT employs the transformer decoder architecture, which operates in a unidirectional (left-to-right) manner. This structure predicts the next word based on previous words in the sequence. The decoder pays close attention to sequential dependencies, enabling GPT to craft coherent and contextually accurate sentences.

Key features of the GPT model include:

- Attention Mechanisms: It uses self-attention layers to assign importance to specific words in the sequence.

- Positional Encoding: Tracks the order of words, crucial for sentence structure.

These elements make GPT particularly adept at generative tasks, including story writing, summarization, and chatbot interactions.

Training Objectives of GPT

GPT uses a simpler training strategy compared to BERT. Its primary objective is causal language modeling, which involves:

- Unidirectional Training: GPT predicts the next token based only on prior tokens, limiting its ability to consider future context.

- Pre-training on Large Datasets: The model is pre-trained on extensive internet text, making it familiar with a wide range of topics and writing styles.

This methodology allows GPT to excel in scenarios requiring text prediction and completion but makes it less effective for certain comprehension tasks where bidirectional context is vital.

Applications of GPT

GPT’s versatility lies in its ability to generate content and assist with creative or interactive tasks. Common applications include:

- Chatbots: Used in conversational AI systems for customer support and virtual assistants.

- Content Generation: Writing blogs, articles, and even poetry with minimal human intervention.

- Code Assistance: Tools like OpenAI Codex, based on GPT, can generate and debug programming code.

Its ability to mimic human-like text has also made GPT a popular tool for creating personalized content at scale.

GPT’s Edge Over BERT: BERT vs GPT architecture

While BERT is exceptional at understanding text, GPT’s strength lies in content creation. Its autoregressive architecture makes it ideal for applications requiring language generation, such as chatbot dialogues or creative writing.

Key Difference between BERT and GPT

The differences between BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer) primarily arise from their underlying architectures, training methodologies, and specific use cases. Both leverage the transformative power of the BERT Vs GPT Encoder Decoder paradigm but diverge in their focus and implementation, making them uniquely suited for different tasks.

Architectural Contrasts: BERT Vs GPT Encoder Decoder

BERT’s Bidirectional Encoder Architecture:

BERT utilizes a bidirectional encoder design, enabling it to simultaneously consider the context of words from both preceding and succeeding directions. This architecture allows it to understand complex sentence structures and subtle word relationships.

- For instance, in the sentence “The bear couldn’t bear the pain,” BERT uses its bidirectional processing to differentiate between the noun “bear” and the verb “bear.”

This precise contextual understanding makes BERT particularly effective for tasks such as question answering, sentiment analysis, and named entity recognition (NER).

GPT’s Autoregressive Decoder Architecture:

In contrast, GPT operates on a unidirectional decoder model, predicting the next word based only on preceding tokens. This autoregressive approach makes GPT highly proficient in generating coherent and contextually relevant text but limits its ability to interpret holistic sentence context.

- For example, given the prompt “Once upon a time,” GPT generates a logical continuation, crafting stories or completing dialogues fluently.

When comparing BERT Vs GPT Encoder Decoder, the former excels in analysis, while the latter shines in creative and generative tasks.

Training Objectives: Key Insights on BERT Vs GPT Encoder Decoder

BERT’s Masked Language Model (MLM):

BERT is trained by randomly masking tokens within a sentence and predicting the masked words, a technique that compels the model to understand both the left and right context.

- Example: In the phrase “The [MASK] is blue,” BERT determines that the missing word is likely “sky” by considering the entire sentence’s meaning.

This method makes BERT an analytical powerhouse for applications requiring deep contextual comprehension.

GPT’s Causal Language Model (CLM):

GPT follows a sequential training strategy, predicting the next word in a left-to-right manner. Its focus is on fluency and naturalness, ideal for tasks like dialogue generation and creative writing.

- Example: For the prompt “AI will shape the future of,” GPT might generate “technology, healthcare, and communication,” emphasizing coherence and relevance.

The BERT Vs GPT Encoder Decoder comparison highlights how these training objectives define their strengths: BERT prioritizes understanding, while GPT focuses on generation.

Performance Benchmarks: BERT Vs GPT Encoder Decoder in Action

The strengths of BERT Vs GPT Encoder Decoder architectures are evident in their benchmark performance:

- BERT dominates in GLUE (General Language Understanding Evaluation) tasks, excelling in text classification and semantic similarity analysis. Its bidirectional nature allows it to outperform GPT in these comprehension-heavy challenges.

- GPT sets the bar for generative benchmarks, such as creating stories, dialogue systems, or even Python code, showcasing its decoder’s capacity for sequential fluency.

Applications in Real-World Scenarios

The BERT Vs GPT Encoder Decoder paradigm reveals its impact in distinct real-world settings:

- BERT: Used by Google Search for query interpretation, enabling more accurate and context-aware search results.

GPT: Powers tools like ChatGPT, which facilitate dynamic conversations, email drafting, and creative writing tasks.

| BERT (Contextual Understanding) | GPT (Text Generation) |

|---|---|

| Enhancing search engine algorithms | Powering chatbots and virtual assistants |

| Identifying customer sentiment | Generating blog content or creative stories |

| Summarizing dense legal or medical texts | Assisting in software code autocompletion |

For example, Google Search uses BERT to interpret user queries better by grasping the intent behind phrases, whereas GPT-powered applications like ChatGPT can hold dynamic conversations or write emails.

Emerging Hybrid Models: The Future of BERT Vs GPT Encoder Decoder

Innovative models such as T5 and ChatGPT-4 bridge the gap between BERT Vs GPT Encoder Decoder systems, combining BERT’s comprehension with GPT’s generation capabilities. These hybrid frameworks are paving the way for AI systems that blend contextual understanding with creative fluency, ensuring they can tackle diverse challenges in the ever-evolving AI landscape.

OpenAI Embeddings vs BERT

In this section, we’ll compare OpenAI’s GPT-based embeddings and BERT embeddings, highlighting their differences, use cases, and advantages in various text similarity and classification tasks. This comparison is essential for understanding the distinct strengths of these AI models and when to choose one over the other.

Overview of OpenAI’s GPT-Based Embeddings

OpenAI’s GPT-based embeddings are designed for semantic search, clustering, and classification tasks by leveraging the generative pre-trained transformer architecture. Key attributes include:

- Rich Contextual Representation: These embeddings capture long-range dependencies, excelling in tasks where text meaning spans multiple sentences or paragraphs.

- Versatile Use Cases: From search engines to recommendation systems, GPT embeddings are highly adaptable.

For example, OpenAI’s embeddings can rank customer reviews by relevance to a query like, “Best laptops for students,” even when the reviews use vastly different terminologies.

Key Distinctions Between OpenAI Embeddings Vs BERT Embeddings

| Feature | OpenAI GPT-Based Embeddings | BERT Embeddings |

|---|---|---|

| Architecture | Focused on generation and long-range context | Optimized for bidirectional encoding |

| Strengths | Semantic understanding over lengthy contexts | Precision in shorter, context-rich tasks |

| Applications | Search ranking, recommendation systems | Sentiment analysis, question answering |

| Training Objective | Autoregressive (predicting next token) | Masked language modeling |

| Performance | Better in creative and generative tasks | Superior in precise context disambiguation |

Example:

- OpenAI embeddings outperform BERT when determining how a movie description aligns with user preferences across multiple dimensions, such as mood, genre, and cast.

- Conversely, BERT is ideal for detecting nuanced sentiments in product reviews like, “The phone’s camera is good, but the battery life is disappointing.”

When to Use OpenAI Embeddings vs BERT

- For Tasks Requiring Long-Range Context Understanding: OpenAI embeddings shine in applications like document similarity or knowledge graphs, where relationships span large text corpora.

- In Generative Contexts: They are better suited for dynamic search engines or chatbots where responses need to be fluid and adapt to multi-turn conversations.

- High-Level Abstraction: OpenAI embeddings excel in summarizing or classifying abstract ideas, such as clustering academic papers by themes like sustainability in AI.

When to Use BERT Instead:

- In short, structured text analysis like tweets or product titles, BERT’s bidirectional attention ensures precise semantic alignment.

Applications in Real-World Scenarios

- Semantic Similarity Models: OpenAI embeddings are widely used in tools like semantic search engines for e-commerce, helping users find products even with vague queries.

- AI Embeddings in Chatbots: For chatbots requiring intuitive, context-aware responses, OpenAI embeddings outperform due to their generative training.

Example:

An AI writing assistant employing OpenAI embeddings can refine a user’s input of “write a professional email to reschedule a meeting” into a polished draft, while BERT might excel in analyzing the tone of the user’s message.

Real-World Use Cases: Choosing the Right Model

When selecting between BERT vs GPT applications, it’s important to understand the strengths and limitations of each model within real-world industries. The agentic AI tools developed using BERT and GPT have transformed sectors like healthcare, education, and marketing by enabling more accurate, scalable, and efficient natural language processing tasks.

Use Case Scenarios for BERT and GPT in Various Industries:

- Healthcare: In healthcare, BERT is often preferred for text classification tasks, such as analyzing electronic health records (EHRs) for disease detection or categorizing patient queries. The GPT model, on the other hand, excels in generating more human-like responses, making it ideal for chatbots and virtual assistants that interact with patients.

- Education: In the education sector, BERT is frequently used for question-answering systems and automated essay scoring, while GPT is applied for creating personalized learning experiences and generating summaries for educational content.

- Marketing: Marketers are increasingly using BERT for customer sentiment analysis to gauge public opinion about products, whereas GPT shines in text summarization and creative content generation, such as generating ad copy and social media posts.

Example Applications:

- Sentiment Analysis (BERT): BERT has demonstrated exceptional performance in sentiment analysis due to its bidirectional context understanding. This allows the model to capture nuanced meanings from text, making it ideal for evaluating customer feedback or social media comments.

- Text Summarization (GPT): GPT is widely used for text summarization as its generative capabilities allow it to understand complex paragraphs and create concise, readable summaries that retain critical information.

Performance Comparisons in Fine-Tuned Tasks:

While both models have their strengths, BERT vs GPT in specific, fine-tuned NLP tasks showcases their unique characteristics:

- BERT excels in understanding and classifying text, making it ideal for tasks like sentiment analysis and question answering.

- GPT excels in tasks requiring creativity and coherence, such as content generation, making it a stronger candidate for applications like text summarization and dialogue generation.

When choosing between BERT vs GPT applications, it’s crucial to consider the specific requirements of the task at hand. If you need precise classification or contextual understanding, BERT is the go-to model. For tasks requiring creative writing, GPT is more suitable. Additionally, in scenarios that require both understanding and generation, hybrid systems leveraging both models can be effective.

The Role of Agentic AI Frameworks

The growing complexity of AI models like BERT and GPT has led to the rise of agentic AI frameworks designed to govern AI applications and ensure that their use remains ethical and responsible. These frameworks provide guidelines for the development, deployment, and monitoring of AI systems to mitigate risks and ensure safety.

Agentic AI in the Context of BERT and GPT

Agentic AI refers to AI systems that are designed with a level of autonomy and decision-making capabilities. When applied to models like BERT and GPT, agentic AI frameworks ensure that these systems are used responsibly in environments like healthcare, legal, and financial industries. These models, when governed by strong frameworks, can help in delivering accurate predictions without infringing on privacy or bias.

How Frameworks Can Enhance Model Usage and Safety

AI frameworks provide a structured approach to AI model governance by setting boundaries and ensuring that AI models operate within predefined ethical guidelines. For instance, frameworks can help monitor and assess the outputs of GPT-based systems in real-time, preventing the generation of harmful or biased content. Similarly, BERT-based models can be used in high-risk industries with frameworks that enforce strict data privacy measures.

The role of agentic AI frameworks is vital for advancing NLP technology by creating environments where AI models like BERT and GPT can be trusted to make decisions, assist in content generation, and perform complex analysis without compromising on transparency and accountability.

Examples of Agentic Systems and Their Role in Advancing NLP Technology

- Healthcare: In healthcare, agentic AI can help in automating the analysis of patient data, ensuring that AI models operate under strict ethical standards regarding patient confidentiality and consent.

- Finance: In finance, agentic AI frameworks can guide AI in making financial predictions while ensuring fairness, non-bias, and ethical compliance with financial regulations.

As BERT and GPT continue to evolve, integrating agentic AI frameworks into their governance will be essential for the future of responsible AI development. These frameworks play a crucial role in maintaining ethical standards while enhancing model performance and safety in real-world applications.

Conclusion

In this comparison of BERT vs GPT, we’ve examined the strengths, differences, and applications of these transformative AI language models. By understanding the difference between BERT and GPT, businesses and developers can make more informed decisions about which model to integrate into their AI applications based on specific use cases.

Recap of Key difference between BERT and GPT and its Applications

To summarize:

- BERT excels in tasks requiring deep understanding of context and is highly effective in tasks like sentiment analysis, question answering, and text classification.

- GPT, on the other hand, is designed for generative tasks and shines in text generation, summarization, and creative content production. The unique autoregressive nature of GPT makes it ideal for generating coherent and human-like text over extended sequences.

Final Advice on Selecting a Model for Specific Needs

When deciding between BERT vs GPT, it’s important to consider the specific task at hand. If your project requires contextual understanding and deep analysis of text, BERT is the best fit. For applications needing creative writing, content generation, or summarization, GPT is the preferred choice. Additionally, hybrid models incorporating both technologies could become more prevalent, leveraging BERT’s strengths in comprehension and GPT’s generative capabilities to perform more complex NLP tasks.

Explore the potential of modern NLP by integrating these cutting-edge models into your AI applications. As the world of transformer models evolves, staying up-to-date with the latest advancements in BERT vs GPT will empower you to unlock new possibilities and enhance your AI solutions.

Curious about more cutting-edge advancements in AI and NLP? Dive into our related blogs to expand your knowledge: