Introduction: The AI Power consumption exploding with its Boom

AI’s Exponential Growth and Its Energy Demands

The rapid advancement of artificial intelligence, especially large language models (LLMs) and real-time generative AI, is revolutionizing industries. However, this innovation comes at a steep energy cost. AI systems require immense computational power for training and inference, leading to AI power consumption exploding across industries.

AI training alone is highly energy-intensive. For instance, training an advanced LLM like GPT-4 consumes as much electricity as 120 U.S. homes use in a year. Once deployed, AI models continue to draw power for inference, which can use up to 10 times more energy than traditional search engines. Image generation tasks can be even more demanding, consuming the equivalent energy needed to charge a smartphone with every query.

AI Energy Consumption Forecast

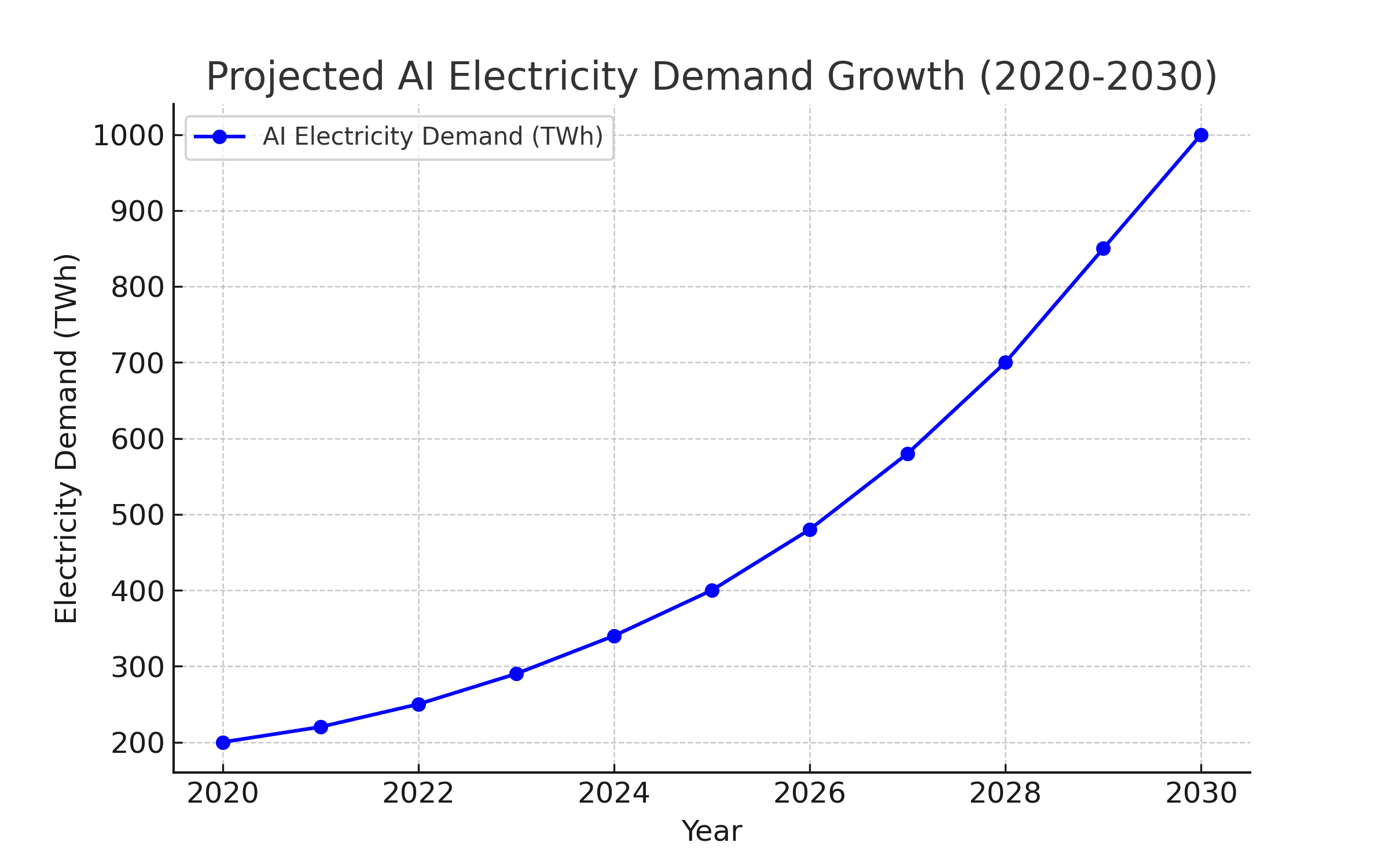

Current estimates suggest that AI power demand could reach 1,000 terawatt-hours (TWh) by 2026—double its consumption in 2022. This surge is driven by the increasing adoption of AI in businesses, automation, and consumer applications. The AI energy consumption forecast also indicates that data centers housing AI infrastructure will significantly contribute to this rise. In the U.S. alone, data center consumption was 176 TWh in 2023 and could increase to between 325 and 580 TWh by 2028, potentially making up 6-12% of total U.S. electricity consumption.

Why AI and Electricity Demand is a Pressing Issue

This surge in energy use has major implications:

-

Higher Electricity Costs: As AI expands, electricity prices may rise due to increased demand.

-

Environmental Impact: AI infrastructure relies on massive data centers, many of which still depend on fossil fuels, contributing to greenhouse gas emissions.

-

Grid Strain: AI and electricity demand continue to grow, potentially overloading electrical grids, especially in regions already facing energy shortages.

Efforts to improve AI’s energy efficiency are underway, with companies exploring alternative energy sources like solar, wind, and even nuclear power. However, without significant breakthroughs in energy-efficient AI design, the rapid expansion of generative AI energy consumption could create sustainability challenges for the global power grid.

The Energy Cost of Training and Running AI Models

AI Training vs. Inference: A Huge Energy Divide

AI’s electricity demand primarily comes from two key processes: training and inference. While both are power-intensive, training large AI models is significantly more demanding, contributing to AI power consumption exploding at an unprecedented rate.

Training AI Models

This phase involves feeding vast datasets into deep learning models to optimize their accuracy. It requires thousands of GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units), designed for high-performance computing. Training a single generative AI model like GPT-4 can consume as much electricity as hundreds of thousands of smartphone charges, significantly increasing generative AI energy consumption worldwide.

Inference

Once trained, AI models perform tasks such as generating text, analyzing images, or responding to voice commands. Inference runs on large server farms, where even a single query can consume 10 times the power of a Google search, making AI and electricity demand a growing concern for global infrastructure.

How Much Energy Does Generative AI Consume?

The power consumption of AI models is staggering:

-

One ChatGPT request can use as much energy as charging a smartphone.

-

AI-powered search engines could add billions in electricity costs annually if deployed at scale.

-

Data centers housing AI models are expected to double their electricity demand by 2026, reaching over 1,000 terawatt-hours (TWh) globally.

This rapid expansion aligns with the AI energy consumption forecast, which predicts that AI-driven workloads will put increasing pressure on energy grids in the coming years.

The Role of GPUs and TPUs in AI’s Energy Demand

Modern AI models rely on specialized processors to handle complex computations:

-

GPUs: Originally designed for gaming, GPUs are now essential for deep learning, but they require hundreds of watts per chip, leading to massive heat generation and energy costs.

-

TPUs: Developed by Google for AI workloads, TPUs are more energy-efficient but still contribute to high data center electricity demand.

-

ASICs (Application-Specific Integrated Circuits): These chips are being developed for ultra-efficient AI computations, but their adoption remains limited.

The Growing Energy Burden of AI Data Centers

AI-driven data centers are among the fastest-growing electricity consumers worldwide. Tech giants like Google, Microsoft, and OpenAI are scaling up AI infrastructure, leading to concerns over sustainability. The shift to AI-powered cloud computing could drive AI and electricity demand past 10% of total global electricity consumption within the next decade.

(Sources: Scientific American, Lawrence Berkeley National Laboratory, AI industry reports)

AI’s Growing Strain on the Global Electricity Grid

AI’s Energy Demand Is Rising Faster Than the Grid Can Handle

The rapid expansion of artificial intelligence is placing unprecedented pressure on global electricity grids. AI data centers require constant, high-power computing, leading to projections that AI power consumption exploding could rival that of entire nations. As AI adoption grows across industries, experts predict a severe strain on power infrastructure, especially in high-tech regions such as the U.S., Europe, and China.

AI Energy Consumption Forecast: When Will It Peak?

Analysts estimate that AI energy consumption forecast predicts a peak around 2029 at approximately 670 terawatt-hours (TWh) before efficiency improvements help slow its growth. However, before this decline, the demand surge could cause significant grid stress.

-

In the U.S. alone, AI and electricity demand is projected to require an additional 14 gigawatts (GW) annually by 2030—more than the total generating capacity of some smaller nations.

-

Globally, AI energy consumption could surpass 1,000 TWh by 2026, doubling from 2022 levels.

-

AI’s total power footprint could become comparable to Germany’s annual electricity consumption by the mid-2020s.

The Electricity Footprint of AI vs. Countries

To understand the scale of AI’s power consumption, consider this:

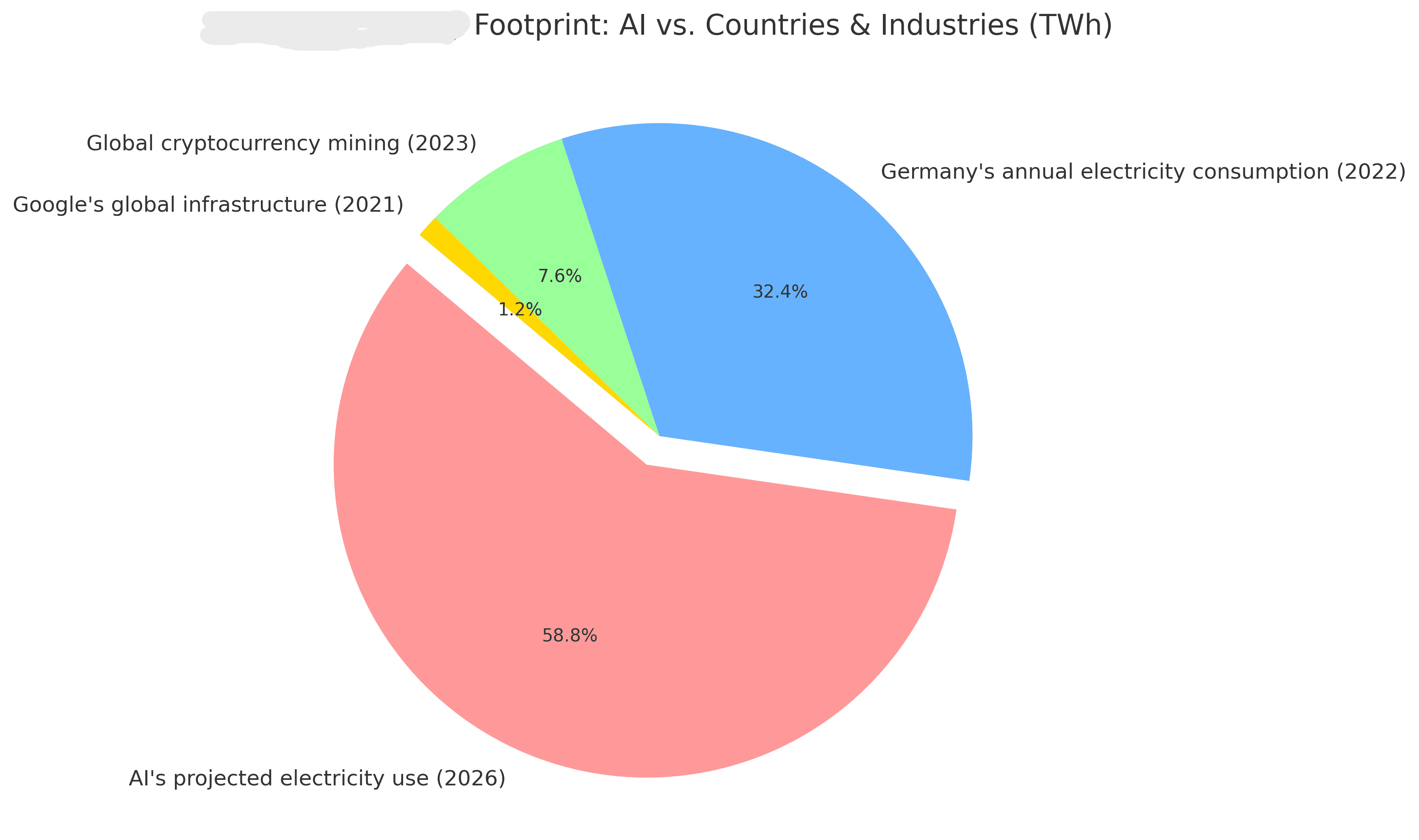

AI’s projected electricity use by 2026 (~1,000 TWh) would be:

-

Equal to Germany’s total annual power consumption (~1,000 TWh in 2022).

-

More than double the energy used by the entire global cryptocurrency mining industry (~400 TWh in 2023).

-

Roughly 10 times the power demand of Google’s entire global infrastructure in 2021 (~100 TWh).

Grid Reliability Concerns: Can AI Push Power Systems to Their Limit?

As AI-driven applications expand into healthcare, finance, and autonomous vehicles, the power grid faces serious challenges in stability and sustainability:

-

Risk of localized blackouts: AI-heavy regions like California, Texas, and Ireland are already struggling with energy bottlenecks.

-

Rising costs for electricity consumers: Increased AI demand may drive up prices for businesses and households.

-

Need for renewable energy integration: AI’s power footprint highlights the urgency of transitioning to solar, wind, and nuclear power to meet demand without excessive carbon emissions.

Will Efficiency Improvements Offset AI’s Energy Demand?

While AI chip efficiency is improving, it won’t fully offset rising demand in the short term. Next-generation hardware (such as liquid-cooled GPUs, photonic chips, and neuromorphic computing) may help slow generative AI energy consumption growth, but widespread implementation is still years away.

Without massive grid expansion and cleaner energy solutions, AI and electricity demand could push power grids beyond their limits, raising concerns about the sustainability of continued AI growth.

Why Data Centers Are at the Heart of the AI Power Problem

AI’s Growing Reliance on Data Centers

AI-driven applications—from large language models (LLMs) to real-time generative AI—require immense computational resources. The vast majority of this computing occurs in high-performance data centers, which have become the backbone of the AI revolution. However, AI power consumption exploding in these facilities is pushing power grids to their limits and increasing AI’s carbon footprint.

How Much Energy Do AI Data Centers Consume?

-

Data centers already account for 1-1.5% of global electricity consumption, a figure expected to double by 2030.

-

AI-focused data centers require multiple gigawatts (GW) of power, significantly more than traditional cloud computing facilities.

-

The world is witnessing a surge in 5 GW-scale data centers, with each consuming as much energy as entire countries like New Zealand (~40 TWh annually).

-

Some hyperscale AI data centers now exceed 500 MW per facility, dwarfing conventional tech infrastructure.

The Cooling Challenge: AI’s Hidden Power Drain

One of the biggest challenges in AI-driven data centers is cooling efficiency. As AI models grow in complexity, their computational demands generate excessive heat, requiring advanced cooling solutions to prevent system failures and inefficiencies.

-

Traditional air cooling is reaching its limits, especially for AI workloads.

-

Liquid cooling—using water or dielectric fluids—is becoming essential for AI-specific hardware (GPUs, TPUs).

-

Data centers are increasingly moving to direct-to-chip cooling and immersion cooling to handle heat loads more efficiently.

AI’s Carbon Footprint: A Growing Concern

With AI energy consumption forecast predicting a sharp rise, sustainability is a major issue. AI’s reliance on fossil-fuel-powered grids is increasing its carbon footprint, making it a target for regulatory scrutiny and corporate sustainability efforts.

-

AI companies like Google, Microsoft, and OpenAI are investing in renewable energy-powered data centers to offset emissions.

-

Carbon-aware computing is emerging, where AI workloads shift dynamically to regions with cleaner energy sources.

-

AI-driven energy optimization is being explored to reduce data center electricity waste.

The Future of AI Data Centers: More Power, More Sustainability?

To sustain AI’s rapid growth while mitigating energy concerns, data center operators are focusing on:

-

Deploying energy-efficient AI hardware (neuromorphic chips, photonic processors).

-

Integrating AI-driven cooling and power management systems.

-

Expanding the use of green hydrogen, solar, and wind power for AI infrastructure.

-

Building modular and edge AI data centers to distribute workloads more efficiently.

As AI continues to revolutionize industries, balancing AI and electricity demand with environmental responsibility will be a defining challenge for data centers in the coming decade.

Energy-Efficient AI: Can AI Become More Sustainable?

As AI energy consumption continues to surge, the demand for sustainable AI power solutions is becoming more urgent. While AI is a major driver of innovation, its energy inefficiency raises concerns about scalability, environmental impact, and grid stability. Can AI models and infrastructure evolve to consume less power while maintaining performance?

Optimizing AI Models for Lower Energy Consumption

One of the most promising approaches to reducing AI’s power footprint is through software-level optimizations. AI researchers are actively refining model architectures to minimize energy waste without sacrificing accuracy.

-

Model pruning – Removing redundant neurons and layers from deep learning networks to reduce computation and power use.

-

Quantization – Lowering the precision of AI computations (e.g., using 8-bit instead of 32-bit floating points), significantly cutting power consumption.

-

Lightweight architectures – Designing smaller, optimized AI models that require fewer parameters and less computational power.

-

Federated learning – Processing AI tasks locally on edge devices instead of cloud-based data centers, reducing reliance on centralized energy-intensive training.

Advances in AI Hardware: Building Energy-Efficient AI Processors

Next-generation AI chips are being developed to improve processing efficiency, reducing generative AI energy consumption and reliance on traditional GPUs and TPUs, which are notorious for their high power consumption.

-

Neuromorphic computing – Chips inspired by the human brain, such as Intel’s Loihi or IBM’s TrueNorth, consume far less power by mimicking biological synapses.

-

Photonic AI chips – Optical processors that use light-based computation rather than electrical signals, significantly improving AI energy efficiency.

-

Analog computing – Moving away from digital processing to analog AI chips, which consume much less energy for AI inference tasks.

Renewable Energy-Powered AI Data Centers

A key strategy for reducing AI’s carbon footprint is transitioning AI workloads to renewable energy-powered data centers. Companies like Google, Microsoft, and Amazon are investing in green AI computing by integrating:

-

Solar-powered AI infrastructure – AI data centers equipped with solar farms for sustainable electricity generation.

-

Wind-powered AI processing hubs – Hyperscale data centers in wind-rich regions to ensure clean, renewable power.

-

Hydrogen energy storage – Using hydrogen fuel cells for backup power and reducing dependence on fossil fuel-based energy grids.

AI-Assisted Energy Optimization: Using AI to Improve Its Own Efficiency

Ironically, AI itself is being used to optimize AI and electricity demand through:

-

AI-powered cooling systems – Machine learning models dynamically adjust cooling in data centers to minimize energy waste.

-

Dynamic workload allocation – AI automatically shifts computing tasks to regions with lower electricity costs or cleaner energy sources.

-

Predictive maintenance – AI-driven analytics help prevent energy losses in power-hungry data centers.

The Future of Sustainable AI Computing

The path to sustainable AI requires a multi-pronged approach—smarter AI models, efficient hardware, and renewable-powered data centers. By implementing these solutions, AI could transition from being an energy burden to an energy-conscious technology, balancing computational power with environmental responsibility.

The Future of AI and Global Energy Policies

Governments and Corporations Respond to AI’s Energy Demands

As AI power consumption explodes, governments and corporations are realizing the urgent need to manage its energy impact. Some key strategies include:

-

AI energy reporting requirements – Mandating that data centers and AI companies track and disclose their electricity usage.

-

AI sustainability incentives – Offering tax breaks and subsidies for AI companies that invest in renewable energy and energy-efficient computing.

-

Grid modernization efforts – Upgrading national power infrastructures to support AI’s increasing electricity demand.

AI Regulations: Carbon Footprint Taxation and Energy Usage Limits

As AI and electricity demand continue to rise, regulators are exploring carbon taxation and energy consumption limits to encourage sustainability. Potential regulations include:

-

Carbon taxes on AI data centers that exceed a certain energy threshold.

-

Power allocation restrictions to prevent AI from overloading grids in high-demand areas.

-

Energy efficiency benchmarks for AI hardware manufacturers, ensuring lower power consumption per computation.

Future Predictions: AI Energy Demand Beyond 2030

Experts forecast that AI energy consumption will continue rising sharply until at least 2030. However, several breakthroughs could help reduce power demands:

-

AI-driven optimization – Using AI itself to enhance energy efficiency within AI models.

-

Breakthroughs in AI hardware – The rise of neuromorphic computing and optical chips could drastically cut energy use.

-

Fusion energy & quantum computing – Theoretical solutions that could eventually power AI with minimal energy waste.

Conclusion: Balancing AI Growth with Energy Sustainability

AI’s Rapid Expansion Comes with an Energy Cost

AI’s impact on industries is undeniable, but its rising electricity consumption is a major concern. If unchecked, AI energy consumption forecasts suggest it could soon rival that of entire nations. Balancing AI innovation with sustainability is no longer optional—it’s an imperative.

The Path Forward: Energy-Efficient AI and Renewable Power

-

Investing in energy-efficient AI models – Techniques like model pruning, quantization, and sparse computing must become industry standards.

-

Scaling up renewable energy for AI – AI data centers must prioritize solar, wind, and green hydrogen to reduce their carbon footprint.

-

AI-driven energy solutions – AI can optimize power grids and energy storage technologies for a sustainable future.

Call to Action: Driving Innovation in Green AI

Governments, tech leaders, and researchers must collaborate to develop sustainable AI power solutions by:

-

Investing in R&D for energy-efficient AI chips and low-power architectures.

-

Adopting clean energy policies that promote responsible AI development.

-

Encouraging AI transparency – Companies should publicly report AI power usage to drive accountability.

The future of AI and electricity demand depends on how well we manage its energy needs today. The next decade will determine whether AI becomes a driver of sustainability—or a growing burden on power grids.

Discover More About AI’s Transformative Power

Curious about AI’s growing influence? Dive into these fascinating topics:

🚀 AI in Space Exploration – See how AI is pushing the boundaries of space travel, from self-navigating rovers to deep-space discoveries.

🌪️ Harnessing AI in Predicting Natural Disasters – Learn how AI-driven forecasting is helping us prepare for earthquakes, hurricanes, and floods.

🌊 AI in Oceanography – Explore how AI is revolutionizing deep-sea exploration, climate tracking, and marine conservation.

🏠 AI in Everyday Life – From smart assistants to predictive algorithms, discover the AI technologies shaping your daily routine.

🤖 Agentic AI Systems – Uncover the potential of AI models that operate with autonomy, making intelligent decisions without human intervention.

🐾 AI in Wildlife Conservation – Find out how AI is safeguarding endangered species, tracking ecosystems, and combating poaching.

🎨 Impact of Generative AI in Creative Industries – See how AI is redefining creativity in art, music, writing, and content production.

Click on a topic that sparks your interest and explore the limitless possibilities of AI! 🚀