Introduction: Early Computer Inventions

The journey of computers from rudimentary tools to indispensable companions has profoundly transformed human life. Today, they power industries, drive innovation and connect billions across the globe. However, understanding their humble beginnings—how early computer inventions laid the foundation for this transformation—reveals a story of ingenuity, perseverance, and vision.

In the early stages, inventors grappled with creating machines capable of performing calculations that were faster, more accurate, and automated. These machines weren’t just about numbers; they aimed to revolutionize thinking and problem-solving. Over time, the integration of computer science innovations like programming languages and algorithmic logic turned these concepts into dynamic systems that could tackle complex challenges.

This blog will explore the milestones that defined the inventions of computers, tracing their development through time. By delving into the early computer inventions timeline, we’ll uncover the pivotal moments that shaped today’s digital landscape and the individuals whose groundbreaking ideas continue to influence modern technology.

Precursors to Modern Computing

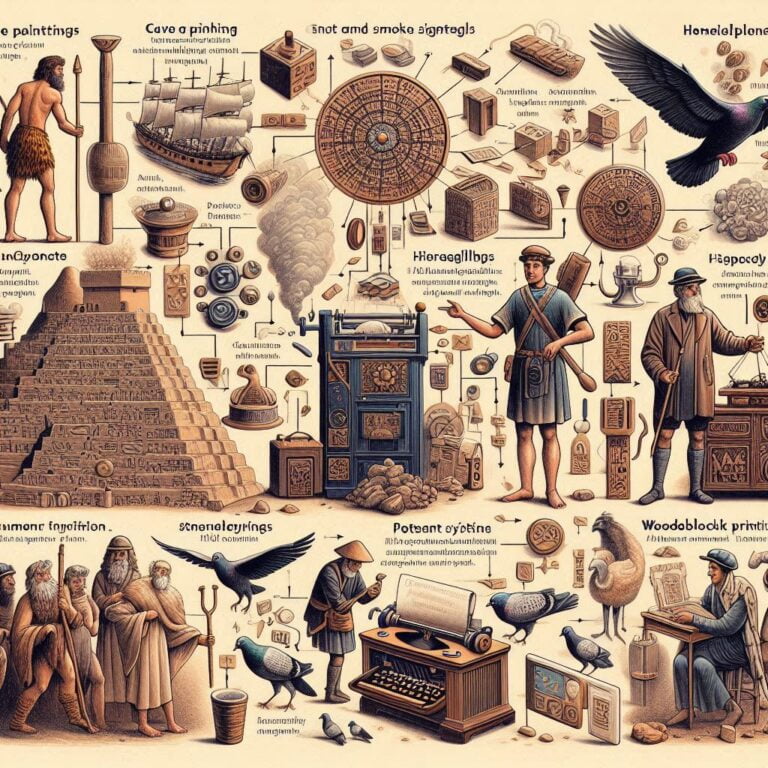

Before silicon chips and binary logic, humans relied on tools designed to simplify calculations. These tools, precursors to modern computing, illustrate humanity’s timeless quest for efficiency and accuracy.

Ancient Computational Tools

The abacus, one of the oldest known computational devices, emerged in ancient Mesopotamia around 2300 BCE. Used for arithmetic tasks, it facilitated trade and administration. Variations like the Chinese suanpan and the Roman abacus adapted to different cultural needs, showcasing its versatility and importance in ancient societies.

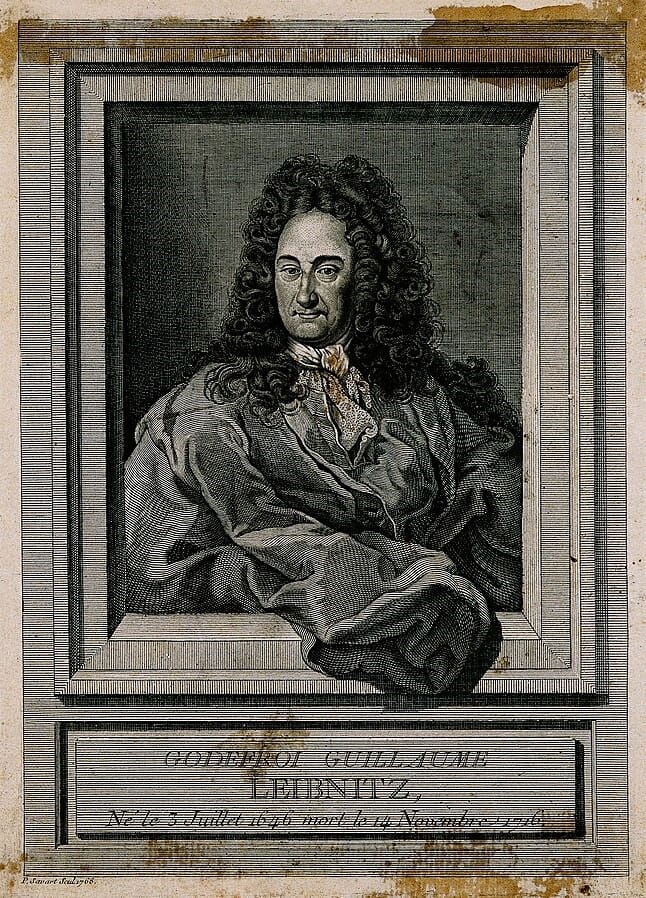

Fast forward to the Renaissance, inventors began developing mechanical calculators to reduce human error in computations. Blaise Pascal’s Pascaline (1642) was a pioneering device capable of performing addition and subtraction using intricate gears and wheels. Gottfried Wilhelm Leibniz extended these capabilities with his Step Reckoner, which introduced multiplication and division functions. These innovations were vital stepping stones toward the inventions of computers, inspiring more advanced machines.

Charles Babbage’s Analytical Engine

The Analytical Engine, conceptualized by Charles Babbage in the 1830s, marked a turning point in computational history. It was the first design to incorporate programmability, using punched cards to dictate operations. This revolutionary concept introduced components like the “mill” (processor) and “store” (memory), making it a direct ancestor of modern computers.

Babbage’s work went beyond theoretical design. It hinted at the potential of machines to perform tasks beyond arithmetic, laying the groundwork for the early computer inventions timeline that followed.

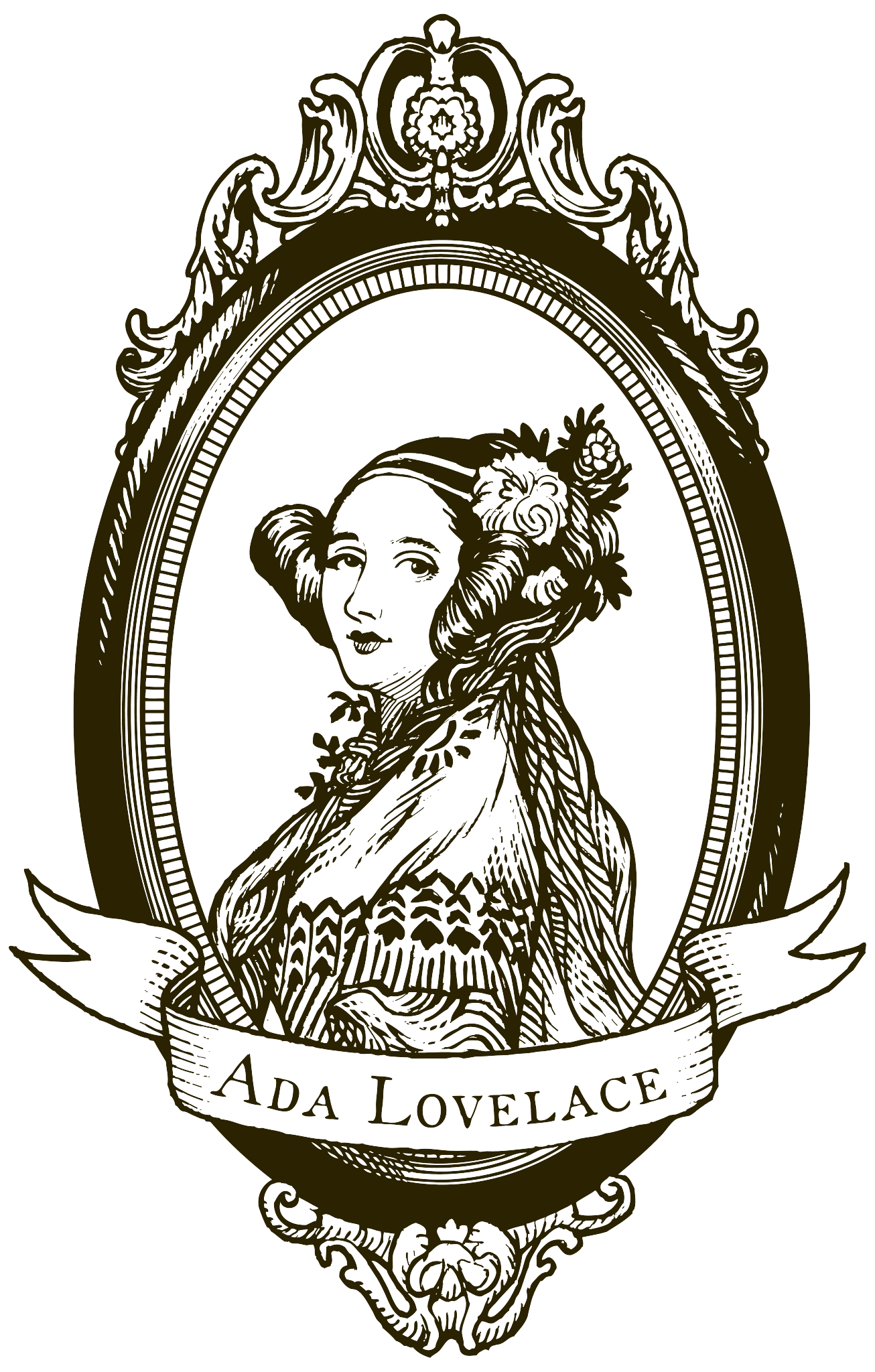

Ada Lovelace: The First Programmer

Collaborating with Babbage, Ada Lovelace envisioned how the Analytical Engine could transcend numerical computation. Her notes described algorithms for the machine, effectively making her the world’s first programmer. Lovelace also predicted that computers could manipulate symbols and generate music—concepts central to modern computer science innovations.

Her foresight underscored the immense possibilities of computational machines, firmly establishing her as a pioneer in the inventions of computers and a symbol of visionary thinking in science and technology.

Key Innovations in Early Computing (1940s-1960s)

The 1940s–1960s was a defining era in the history of early computer inventions, where foundational technologies emerged, setting the stage for today’s computing landscape. These innovations reflected the human drive to push boundaries in engineering, science, and problem-solving.

The Rise of Electromechanical Computers

Electromechanical computers bridged the gap between mechanical calculators and fully electronic computers. The Zuse computer, specifically the Z3, invented by Konrad Zuse in 1941, was the world’s first programmable digital computer. It operated using electromechanical relays and utilized a binary floating-point system, introducing principles that underpin modern computing.

Another remarkable innovation of this time was Colossus, designed by Tommy Flowers and his team in 1943. This machine was created for a specific purpose: decrypting the Lorenz-encrypted messages used by German forces during World War II. Colossus was not only a technological breakthrough but also a testament to how computational power could serve strategic goals. Its success paved the way for future applications of computers in specialized fields.

These developments marked the beginning of computers being used for tasks beyond simple arithmetic, showcasing their potential for broader applications in science, engineering, and national security.

The ENIAC: A Game-Changer

The Electronic Numerical Integrator and Computer (ENIAC) was unveiled in 1946 and is celebrated as the first general-purpose, fully electronic computer. Unlike earlier electromechanical systems, ENIAC used 17,468 vacuum tubes to perform calculations at unprecedented speeds.

For example, ENIAC could calculate artillery trajectories in seconds—a process that previously took hours using manual methods. It also found applications in fields such as weather forecasting and atomic energy research. The ENIAC history is a compelling narrative of collaboration between government, academia, and industry, demonstrating how large-scale projects could drive technological advancements.

ENIAC’s massive size and power requirements (it consumed 150 kilowatts of electricity) highlighted the need for further innovations in computing hardware. This realization spurred efforts to miniaturize components and improve efficiency, shaping the future trajectory of computer science innovations.

Binary Systems and the Birth of Transistor Technology

The shift to binary systems in this period was pivotal. By representing data as combinations of 0s and 1s, binary systems greatly simplified computations and allowed for more reliable hardware designs. This conceptual leap set the stage for digital computing as we know it.

In 1947, William Shockley, John Bardeen, and Walter Brattain at Bell Labs invented the transistor, marking another milestone. Transistors replaced bulky and unreliable vacuum tubes, making computers smaller, faster, and more energy-efficient. The transistor invention timeline underscores how this single innovation catalyzed a series of advancements that culminated in the development of integrated circuits and microprocessors.

These innovations during the 1940s–1960s highlight the rapid evolution of early computer inventions, driven by the need for faster, more versatile, and compact computing systems.

The Early Computer Timeline

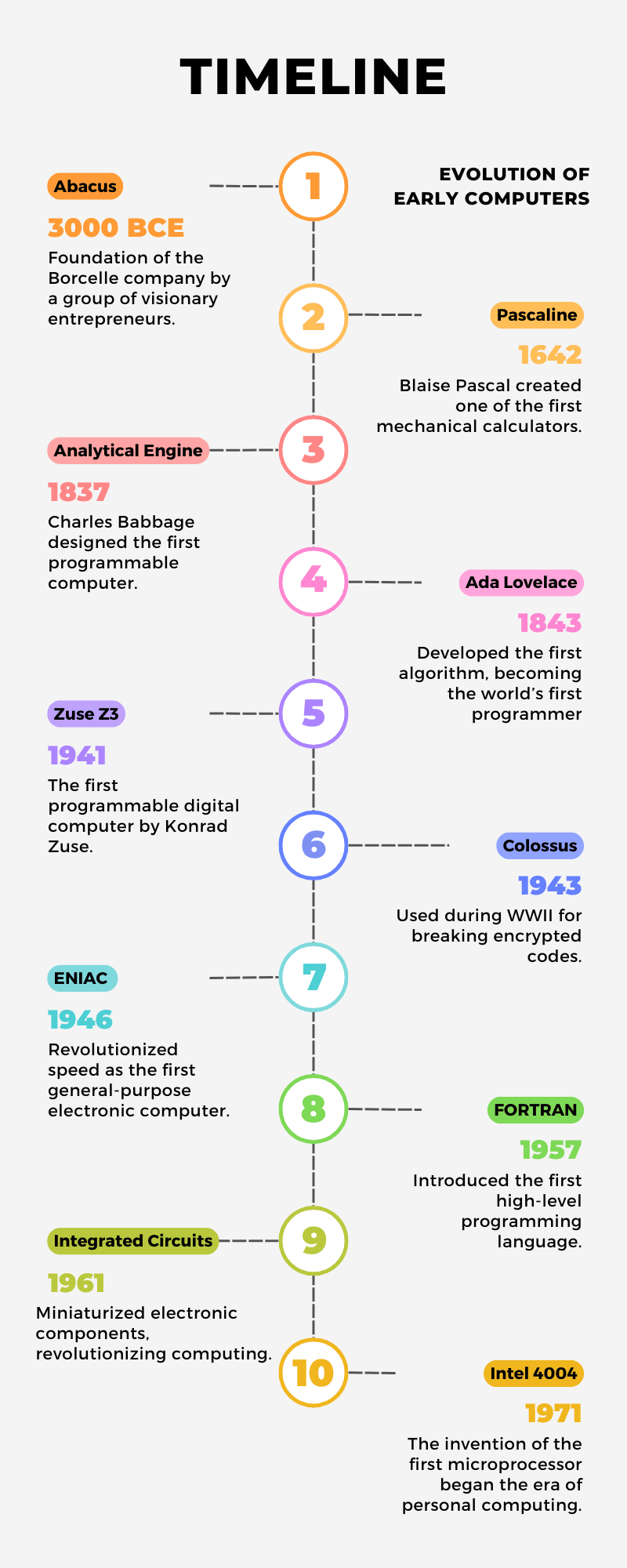

The early computer inventions timeline offers a detailed look at the significant milestones that shaped modern computing. Each step reflects a leap in human ingenuity and technological capability.

1941: Zuse Z3 – The First Programmable Computer

The Z3, created by Konrad Zuse, was revolutionary for its time. It introduced programmability using punched tape and was capable of performing arithmetic calculations with unprecedented accuracy. Unlike earlier calculating machines, the Z3 incorporated floating-point arithmetic, enabling it to handle complex mathematical problems.

Though it was destroyed during World War II, Zuse’s Z3 remains a symbol of innovation. The reconstruction of the Z3 in the 1960s solidified its legacy as a cornerstone in the timeline of early computer inventions.

1943: Colossus – The Codebreaker

Developed by British engineer Tommy Flowers, Colossus was instrumental in the Allied forces’ victory during World War II. Unlike the Z3, which was a general-purpose computer, Colossus was designed specifically for decrypting the Lorenz cipher.

The machine’s design included 1,500 vacuum tubes, allowing it to process encrypted messages at high speeds. Its success demonstrated the strategic value of computing technology and marked a significant leap in cryptographic techniques. This achievement also set the stage for the development of digital computers for military and intelligence applications.

1946: ENIAC – The Speed Revolution

The unveiling of ENIAC in 1946 represented a dramatic leap forward in computational power. With its ability to perform 5,000 additions or 357 multiplications per second, ENIAC was thousands of times faster than previous machines.

ENIAC’s versatility allowed it to be programmed for different tasks, including ballistics calculations and scientific research. However, programming the machine was a labor-intensive process, requiring manual rewiring for each new task. This limitation highlighted the need for more user-friendly programming methods, which would emerge in later decades.

Key Takeaways from the Timeline

The period from 1941 to 1946 saw rapid advancements in computing technology. Each milestone—whether it was the creation of the Z3, the operational success of Colossus, or the versatility of ENIAC—represented a step toward the sophisticated computing systems we use today.

The timeline of early computer inventions not only underscores the ingenuity of early pioneers but also highlights the collaborative nature of technological progress. These inventions were not standalone accomplishments but integral chapters in a larger story of innovation, experimentation, and evolution.

The Dawn of Computer Science Innovations

The emergence of computer science innovations marked a transformative period in the history of technology, shaping the foundations of modern computing. Visionary pioneers established theories, languages, and frameworks that enabled computers to go beyond hardware capabilities and become engines of problem-solving and creativity.

Alan Turing and the Universal Machine

Alan Turing, often referred to as the father of computer science, laid the groundwork for theoretical computing with his concept of the Turing Machine in the 1930s. This abstract device could simulate the logic of any computer algorithm, proving that a universal computing machine was feasible.

Turing’s work extended to practical applications during World War II with his contributions to breaking the Enigma code. His design for the Bombe machine was pivotal in deciphering German military communications, showcasing how computational principles could solve real-world problems. Turing’s ideas also influenced the development of stored-program computers, a critical advancement in early computer inventions.

John von Neumann and the Architecture of Modern Computing

John von Neumann introduced the architecture that remains the basis of most computers today. Known as the von Neumann architecture, it proposed that a computer’s data and program instructions be stored in the same memory space. This innovation made computers more versatile and efficient, as it eliminated the need for manual rewiring for each new task.

Von Neumann’s contributions also extended to early concepts of artificial intelligence, inspiring future research in machine learning and algorithmic problem-solving. His vision exemplifies the seamless integration of hardware and software that defines computer science innovations.

The Development of Early Programming Languages

As computers became more sophisticated, the need for efficient programming tools grew. Early languages like FORTRAN (1957) and COBOL (1959) were designed to make programming more accessible and to expand the range of applications for computers.

- FORTRAN (Formula Translation) was created for scientific and engineering computations. It enabled researchers to write programs that solved complex equations and simulations, revolutionizing fields like aerodynamics and physics.

- COBOL (Common Business-Oriented Language) catered to business applications, facilitating tasks like payroll and inventory management. Its user-friendly syntax allowed non-specialists to interact with computers more effectively.

These early languages bridged the gap between humans and machines, transforming computers from specialized tools into multipurpose devices that could address a wide array of challenges.

The dawn of computer science innovations was characterized by groundbreaking theoretical and practical advancements. Visionaries like Turing and von Neumann not only redefined what computers could do but also laid the intellectual foundation for future technologies, from artificial intelligence to cloud computing.

Inventions That Shaped Modern Computing

The transition from early computing systems to modern technology was fueled by groundbreaking inventions that transformed the landscape of computing. These innovations, spanning hardware and software, introduced the capabilities that define today’s digital world.

Integrated Circuits and Miniaturization (1960s)

The invention of the integrated circuit by Jack Kilby and Robert Noyce in 1958 marked a seismic shift in computing technology. By combining multiple electronic components into a single silicon chip, integrated circuits revolutionized hardware design, making computers smaller, faster, and more reliable.

This innovation spurred the development of compact computing devices, enabling the transition from room-sized mainframes to desktop computers. Integrated circuits also laid the groundwork for the microprocessor, which would later define personal computing. The integrated circuit history is a testament to the power of miniaturization and its role in democratizing technology.

The Invention of the Microprocessor (1971)

The microprocessor, often referred to as the “brain” of the computer, was a pivotal invention in computing history. Intel’s 4004 microprocessor, introduced in 1971, integrated all the functions of a central processing unit (CPU) onto a single chip.

The significance of this invention cannot be overstated. It not only made computers more affordable and accessible but also enabled the development of portable devices, including laptops and smartphones. The microprocessor invention was a catalyst for the personal computing revolution, empowering individuals and businesses to leverage computational power in unprecedented ways.

The Shift from Mainframes to Personal Computers (1970s)

The 1970s witnessed the emergence of personal computers (PCs), marking a shift from centralized mainframes to decentralized computing. Companies like Apple, IBM, and Commodore developed user-friendly PCs that brought computing into homes and small businesses.

Key milestones in this era included:

- The release of the Altair 8800 in 1975, is often considered the first commercially successful personal computer.

- The introduction of the Apple II in 1977, featured a graphical interface and software ecosystem.

- IBM’s PC in 1981, set industry standards and solidified the PC market.

This shift transformed computing from a specialized field to a ubiquitous part of daily life, fueling the rise of software applications, gaming, and early networking technologies.

The inventions of integrated circuits, microprocessors, and personal computers reshaped the computing landscape, making technology more accessible and impactful. These milestones not only addressed the limitations of early computing systems but also created new possibilities, from personal productivity to global connectivity.

Societal and Scientific Impact

The early inventions in computing and the innovations in computer science have left a profound legacy on society and science. These advancements provided the foundation for modern technologies that have reshaped industries, enhanced communication, and improved quality of life.

The Path to Artificial Intelligence (AI)

The theoretical frameworks established by pioneers like Alan Turing and John von Neumann laid the groundwork for AI. Early innovations, such as machine learning algorithms and neural networks, were inspired by their vision of machines capable of independent decision-making.

Today, AI powers technologies such as:

- Virtual Assistants (e.g., Siri, Alexa) that perform everyday tasks.

- Predictive Analytics in medicine, improving diagnostics and treatments.

- Autonomous Vehicles, transforming transportation.

These applications owe their existence to early computer innovations, which made it possible to simulate human intelligence in machines. The journey from early computers to AI underscores the transformative power of early computer inventions.

Cloud Computing and Data Storage

Cloud computing, a staple of modern IT infrastructure, evolved from the mainframe systems of the 1960s. Early experiments in time-sharing, where multiple users could access a single machine, provided the conceptual basis for today’s cloud platforms.

Modern cloud solutions have revolutionized:

- Business Operations, enabling remote work and global collaboration.

- Data Accessibility, with services like Google Drive and Dropbox.

- Scientific Research, facilitating the storage and analysis of vast datasets.

The shift from local storage to cloud computing highlights the enduring influence of computer science innovations in enabling new possibilities.

The Internet Revolution

The origins of the internet can be traced back to early computing systems and networking experiments, such as ARPANET in the 1960s. By connecting computers across distances, researchers created the blueprint for the World Wide Web.

Key societal impacts include:

- Global Communication, fostering instant connectivity.

- E-commerce, transforming how people shop and conduct business.

- Knowledge Sharing, with platforms like Wikipedia and online courses democratizing education.

The internet has become an essential part of modern life, exemplifying the societal reach of early computing technologies.

The societal and scientific impacts of early computing are immeasurable. From AI and cloud computing to the internet revolution, these innovations have transformed industries, enhanced human productivity, and opened new frontiers in science and technology.

Conclusion

The journey through the history of early computer inventions and computer science innovations reveals a story of vision, perseverance, and transformation. These pioneering efforts have laid the foundation for the digital age, enabling groundbreaking advancements and reshaping human life.

Summary of Key Points

- Precursors to Modern Computing introduced foundational tools like the abacus and concepts like Babbage’s Analytical Engine.

- Key Innovations (1940s–1960s) showcased milestones like the ENIAC and the invention of transistors.

- The Early Computer Timeline provided a chronological perspective on transformative developments.

- Computer Science Innovations highlighted contributions from pioneers like Turing and von Neumann, as well as the emergence of programming languages.

- Inventions That Shaped Modern Computing demonstrated the significance of integrated circuits, microprocessors, and the rise of personal computers.

- Societal and Scientific Impact illustrated how these technologies revolutionized industries, inspired AI, and fueled the internet age.

Legacy of Early Computing Pioneers

The contributions of early computing pioneers have left an indelible mark on history. Their vision and ingenuity continue to inspire new generations of innovators, driving advancements in artificial intelligence, quantum computing, and beyond.

Explore related articles to delve deeper into the fascinating history and advancements in technology. From the rise of AI to the future of computing, there’s a wealth of knowledge waiting to be discovered.