🔍 The Mind-Blowing Power of Teaching AI the Unknown

Imagine showing a child a picture of a unicorn. Now imagine that, without ever seeing a dragon, the child could recognize one just because you told them it breathes fire and has wings. Sounds magical, right?

This is exactly what Zero-Shot Learning (ZSL) does in the world of artificial intelligence.

Unlike traditional AI models that rely on hundreds or thousands of labeled examples, Zero-Shot Learning allows machines to understand and categorize things they’ve never seen before. Using high-level descriptions, semantic embeddings, and language models, ZSL teaches AI how to make intelligent guesses about entirely new classes of data.

In this post, we’ll dive deep into what zero-shot learning is, break down how it works, why it’s transforming industries like computer vision and NLP, and what the future holds.

If you’ve ever wondered how AI learns without examples, this is the breakthrough you’ve been waiting for — consider this your ultimate guide to zero-shot learning explained.

🧠 What is Zero-Shot Learning? (And Why It’s Revolutionary)

At its core, zero-shot learning (ZSL) is a machine learning technique where a model can identify or classify data it has never seen before — without being explicitly trained on that specific category. If that sounds impossible, think of it like this:

You’ve never seen a “zebra-dolphin,” but if someone tells you it has fins like a dolphin and stripes like a zebra, you could probably imagine it. That’s how zero-shot learning works — the AI uses semantic descriptions rather than direct examples.

🧠 The Zero-Shot Learning Definition

In plain terms, zero-shot learning means training a machine to understand concepts through descriptions or language-based prompts, instead of images or labeled data. Instead of feeding an AI thousands of labeled dog photos, we describe the attributes of a dog and let the model infer what a dog likely looks like.

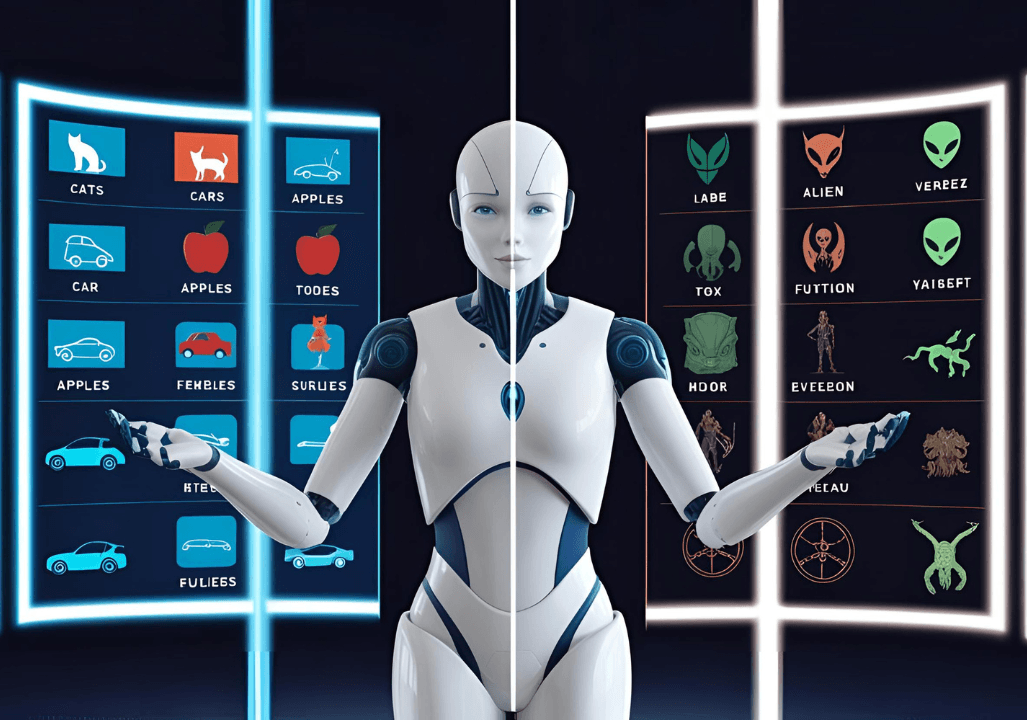

🔍 ZSL vs. Traditional Supervised Learning

In supervised learning, the AI must learn from labeled datasets — tons of examples with clear tags like “cat,” “car,” or “tree.” In contrast, zero-shot learning enables AI to generalize from previously learned information to recognize new, unseen categories.

This approach is revolutionary in fields like natural language processing (e.g., GPT-4) and computer vision (e.g., OpenAI’s CLIP model) where the model uses semantic embedding — mapping words, images, and concepts into a shared space for intelligent reasoning.

The real power of zero-shot learning lies in its ability to scale AI in data-scarce environments. In medicine, space exploration, or rare language analysis, ZSL eliminates the bottleneck of labeled data — enabling smarter systems with far less training.

In short, zero-shot learning isn’t just a technical innovation — it’s a gateway to AI that learns like we do: through meaning, not memory.

📸 Zero-Shot Learning Examples: From Language to Vision

Zero-shot learning may sound futuristic, but it’s already embedded in the technologies we use every day — from smart assistants to image recognition tools and beyond. Below are several real-world zero-shot learning examples that illustrate how this concept is quietly transforming artificial intelligence.

🖼️ Visual AI: Image Classification ZSL in Action

Traditional image classifiers need thousands of labeled photos to recognize a cat or a tree. But with zero-shot learning, a model like OpenAI’s CLIP can identify completely new objects based on text prompts alone. For example:

You tell it: “A photo of a furry animal with a black nose and round ears sitting in a tree.”

It correctly labels a koala, even if it has never been trained on images of koalas.

This ability to connect textual attributes with visual features enables image classification ZSL to thrive in scenarios where annotated images are rare or unavailable — such as identifying endangered species, classifying medical scans, or analyzing satellite imagery.

🧠 Zero-Shot NLP: Smarter Language Models

In the world of natural language processing (NLP), zero-shot NLP allows AI models like GPT-4 to perform tasks like:

- Sentiment analysis on niche topics (e.g., detecting sarcasm in posts about climate change)

- Content classification in technical fields it wasn’t trained on

- Topic detection in never-seen-before writing styles or formats

This is especially useful in industries like finance, healthcare, and law, where labeled datasets are expensive or sensitive.

One mind-blowing zero-shot learning example in NLP is automatic translation of ancient languages. A model like GPT-4, using zero-shot reasoning, can infer patterns in dead or under-researched dialects by mapping similarities to modern language structures — even without direct training.

🎤 Voice Assistants: Zero-Shot Learning in Everyday Use

If you’ve asked your smart assistant a weird question like:

“Can I use lemon juice to charge a phone?”

… and still got a semi-useful answer, thank zero-shot learning. These systems often rely on zero-shot NLP to interpret new, contextually unfamiliar queries and respond accurately, despite no prior training on the exact phrasing or topic.

This capacity to generalize and infer meaning makes ZSL ideal for scaling AI to handle real-world conversations, not just pre-programmed responses.

⚖️ Zero-Shot Learning vs. Few-Shot Learning: Know the Difference

In the world of artificial intelligence, there’s a subtle but important distinction between zero-shot learning (ZSL) and few-shot learning (FSL). Both methods allow machines to make predictions or classifications with limited data, but they achieve this in different ways. Let’s dive deep into the difference between ZSL and FSL to clarify the key concepts and help you understand when and why you should use each.

What is Zero-Shot Learning (ZSL)?

In zero-shot learning, a machine is asked to recognize or classify a new class or concept without any prior examples. Instead, the model relies on semantic knowledge, which can be obtained from text descriptions, attributes, or related knowledge sources. Think of it as teaching AI through language or contextual information, rather than direct examples.

Example: Imagine asking an AI to classify a “tiger,” even though it has never seen a picture of one. It uses a description like: “A large wild animal with orange fur and black stripes.” Based on this description and its knowledge of similar animals, the AI can identify the tiger despite never having been shown a photo of it.

What is Few-Shot Learning (FSL)?

Unlike traditional methods, few-shot learning trains a model using only a limited set of labeled examples, often just a handful per category. Instead of thousands or millions of examples, only a few — typically ranging from 1 to 5 — are used. FSL is valuable in situations where data is scarce, but some examples can still be provided for training.

Example: Consider training an AI model to classify new slang terms in a language it’s unfamiliar with. With just 3–5 examples of how the slang is used in sentences, the model can quickly adapt to recognize and interpret new uses of that slang.

Table: Zero-Shot Learning vs Few-Shot Learning

| Feature | Zero-Shot Learning (ZSL) | Few-Shot Learning (FSL) |

|---|---|---|

| Training Data for New Class | 0 examples (relies on textual descriptions or attributes) | A small number of labeled examples (1-5 per class) |

| Learning Method | Uses semantic embeddings and contextual reasoning | Learns from a few labeled examples and prior knowledge |

| Real Example | AI identifying a "koala" from a textual description (no koala images) | AI understanding a new slang term after seeing it in 3 sentences |

| Analogies | Giving AI a description or general concept | Giving AI a few examples or samples |

| Use Case | Data is missing or difficult to obtain | You can afford a few examples but need a model that adapts quickly |

| Main Advantage | No need for labeled examples, just contextual understanding | Adaptation to new classes with minimal data |

Analogy: Teaching AI with Descriptions vs. Examples

To simplify, think of zero-shot learning as providing an AI with a detailed description of something it’s never encountered. For example, if you describe an elephant as “a large mammal with big ears, tusks, and a trunk,” the AI can recognize an elephant based on that description.

In few-shot learning, it’s like teaching a child something new by showing them several examples of it. If you showed a child a few pictures of a specific kind of bird, they could recognize that bird in the future. Similarly, the AI is shown a small number of labeled examples (like 3-5 images) to learn from.

Transfer Learning: Where ZSL and FSL Meet

Both ZSL and FSL are forms of transfer learning, but in different ways. Transfer learning involves leveraging insights or patterns acquired from one task or domain to enhance performance in a different but related area. However, the level of “transfer” differs:

- Zero-shot learning is more abstract. The AI uses existing knowledge from semantics and language to transfer understanding to entirely new, unseen classes without specific examples.

- Few-shot learning requires some prior learning but makes minor adaptations using small sets of labeled data. It’s a transfer from a known domain to a new, but somewhat related, task or class.

When to Use Zero-Shot Learning vs. Few-Shot Learning

Each technique shines in different scenarios:

Zero-Shot Learning is ideal when:

- There’s no labeled data available for a new class or category.

- You need AI to generalize across completely new tasks that require reasoning based on semantic knowledge.

- Examples: Classifying rare species in wildlife conservation, identifying new diseases in medical diagnostics.

Few-Shot Learning is useful when:

- A few labeled examples exist but large datasets are still unattainable.

- You require AI systems that can rapidly adjust to new information without the need for time-consuming retraining processes.

- Examples: Adapting AI to recognize new product categories in e-commerce or detecting new slang words in a social media platform.

Choosing between zero-shot learning vs few-shot learning depends on the availability of data and the flexibility required. If data is scarce and you can provide descriptions or context, zero-shot learning is your go-to. If you can afford to provide a few examples, then few-shot learning can be your best bet for a quick, adaptive AI model.

🚀 Real-World Applications of Zero-Shot Learning

Zero-shot learning (ZSL) has emerged as a game-changer in the field of artificial intelligence, offering innovative solutions to problems that were once thought to be data-dependent. By using semantic knowledge rather than large datasets, ZSL enables AI models to identify, classify, and act on new information without the need for labeled examples. Here are some groundbreaking applications of zero-shot learning that are transforming industries and providing real-world utility.

📊 Data Labeling Automation

One of the most labor-intensive tasks in AI and machine learning is data labeling — the process of tagging data (e.g., images, text, or audio) so that models can learn from them. Traditional supervised learning demands a large amount of labeled data, which can be both expensive and time-intensive to generate.

Zero-shot learning eliminates the need for pre-labeled datasets by enabling models to automatically classify data based on semantic descriptions. For instance, AI can be trained to identify images of animals without needing prior examples of every single animal type. This automation helps accelerate AI development and reduce the costs associated with manual labeling.

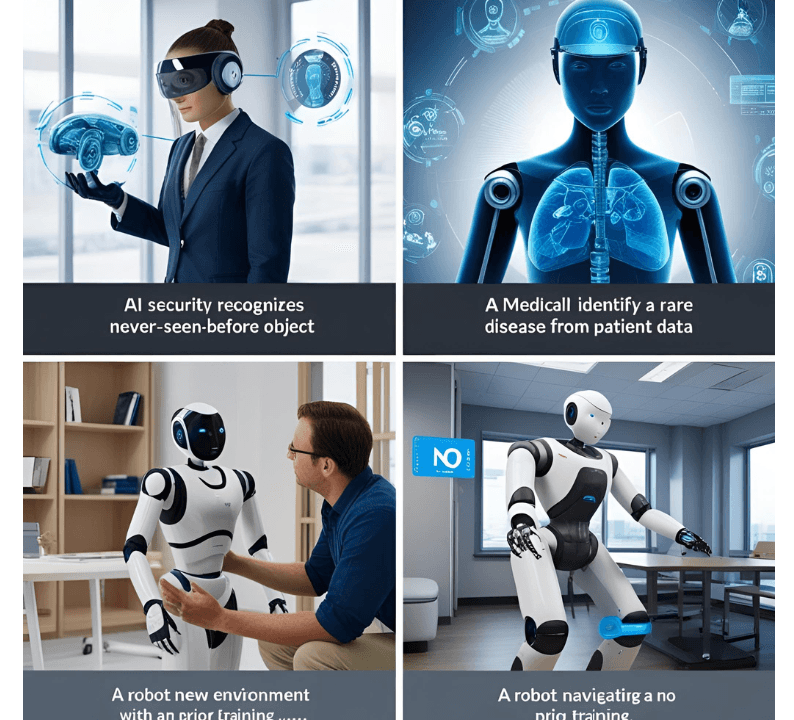

🧬 Medical Imaging & Rare Disease Detection

The medical field is one of the most promising areas for zero-shot learning, particularly when it comes to medical imaging and the diagnosis of rare diseases. AI models often struggle to identify diseases that lack sufficient training data. With ZSL, models can learn to detect diseases or abnormalities based on textual descriptions or medical research instead of requiring large datasets for every rare condition.

For example, ZSL models can identify patterns in X-rays or MRIs that might indicate a rare disease, even if no such case has been previously encountered. This is invaluable in early diagnosis and healthcare accessibility, especially in underfunded regions or where rare diseases are under-researched.

📷 Content Moderation in Unseen Categories (e.g., New Meme Trends)

The fast-paced nature of social media and digital content creation constantly introduces new trends, slang, and categories. Traditional content moderation systems rely on pre-labeled examples, making them ineffective against new, emerging trends (such as viral memes or images).

With zero-shot learning, AI can automatically moderate user-generated content by recognizing new trends or inappropriate content without explicit training on these new categories. This makes content moderation much more adaptable and scalable, especially for large social media platforms, where the volume and diversity of content are constantly evolving.

🗣️ Multilingual NLP without Specific Language Training

Natural language processing (NLP) is typically language-specific, with models needing separate training datasets for each language. Zero-shot learning can revolutionize multilingual NLP by enabling AI to understand and generate text in multiple languages without requiring extensive training on each individual language.

For example, a zero-shot learning model could translate a text from French to English, even if it has never seen French-to-English examples. This capability is game-changing for global businesses looking to scale across multiple regions and markets without the need for extensive language-specific models.

🧠 Zero-Shot Learning in Robotics: Interpreting Verbal Commands On-the-Fly

Zero-shot learning’s applications are also transforming the field of robotics, particularly when it comes to interpretation of verbal commands. Imagine a robot in a warehouse environment: traditionally, robots would need to be specifically trained on every possible command they might encounter. With ZSL, robots can understand and execute commands based on context and generalized descriptions.

For instance, a user could tell a robot to “pick up the red box from the table” even if the robot has never been trained on that specific phrase. The robot can interpret the request using contextual reasoning from its training data and carry out the task. This makes robots much more flexible and adaptable, capable of learning new tasks without requiring reprogramming or retraining for every new instruction.

Zero-shot learning holds a wide range of transformative and groundbreaking applications. From automating tedious tasks like data labeling to empowering robots to act on the fly, ZSL is pushing the boundaries of what AI can achieve. As we continue to develop more semantic models and generalized learning algorithms, the use cases for zero-shot learning will only expand, making AI more efficient, adaptable, and scalable than ever before. This approach not only accelerates the development of AI systems but also enhances their practical utility in real-world applications across industries.

🧠💡The Future of Zero-Shot Learning

Zero-shot learning (ZSL) isn’t just the next frontier of AI; it’s the key to a world where artificial intelligence can think, act, and learn without constraints. Exploring the future of zero-shot learning allows us to expand the limits of creativity, revealing an endless realm of potential. In this section, we’ll venture into the visionary potential of open-ended learning and how ZSL might change the way we interact with the world and beyond.

🧬 Zero-Shot Bioscience: AI Diagnosing Alien Biology on Other Planets

Imagine a future where space exploration brings humanity to distant planets, each with its own unique ecosystem and biology. Now, imagine an AI that can understand alien life forms—life that no human has ever encountered. Zero-shot learning could enable AI to identify and diagnose alien biology by drawing on descriptions or generalized concepts about life on Earth.

For example, an AI trained to recognize carbon-based life on Earth could use ZSL to analyze an alien organism made of entirely different molecular structures. By leveraging semantic understanding and biological theories, AI could classify and understand these new lifeforms, even in the absence of prior knowledge. This could revolutionize the field of astrobiology, allowing scientists to explore distant planets without waiting for lengthy training cycles on new organisms.

🤖 Autonomous ZSL Bots: Learning to Navigate Unknown Terrain with Just Text Prompts

In today’s robotics, most machines rely on large datasets to navigate specific environments. But what if we could program robots to navigate entirely unknown environments with just a simple textual prompt? Zero-shot learning opens the door for robots to understand the basic concepts of “walking on sand,” or “crossing a river,” based on descriptive input alone, without the need for pre-programmed data.

Imagine a robot exploring new planets or performing tasks in disaster recovery zones, learning to handle unknown terrain by processing instructions and adapting its actions in real time. As we move towards autonomous zero-shot bots, ZSL could provide a method for robots to explore, learn, and adapt to unpredictable environments simply by understanding the linguistic and conceptual cues we give them.

🧠 Zero-Shot Emotional Intelligence: AI Understanding New Human Expressions or Slang in Real-Time

Humans constantly evolve their ways of communicating—whether it’s emojis, slang, or cultural references. For AI to be truly effective in human-centric environments, it must adapt to these dynamic modes of communication. Zero-shot learning allows AI to understand emotional intelligence and linguistic nuances, even when they’ve never encountered them before.

For instance, a ZSL-powered AI might easily detect new emotional expressions such as a smirk or a gesture that represents sarcasm, even if it has never seen that specific combination before. It could also learn the meaning of new slang terms or phrases, like “ghosting” or “flexing,” without explicit training. As AI systems evolve, zero-shot emotional intelligence will enable them to engage with humans in a more natural, empathetic manner, making human-AI interactions smoother and more effective.

🔮 Future Language Creators: AI that Invents and Understands Entirely New Languages on the Fly

What if AI could not only understand every existing language but also create and learn entirely new languages? Zero-shot learning paves the way for AI to develop and understand languages that have never been conceived by humans, simply by drawing on patterns and semantic relationships in its environment.

In the distant future, an AI could generate and translate entirely new languages as needed—languages designed for new human communities, alien species, or advanced technological systems. For example, a colony on Mars may require a new form of communication suited to its environment, and ZSL could enable AI to invent and understand this language on the fly. Similarly, as AI generalization advances, models may create languages for more effective communication between robots or with humans, all driven by semantic frameworks learned through zero-shot learning.

Zero-shot learning is not only revolutionizing how AI understands data—it’s creating new pathways for human-AI interaction, robot autonomy, and even intergalactic communication. From diagnosing alien biology to understanding real-time human emotions, the future of zero-shot learning is limitless, shaped by both scientific advances and our wildest imaginations. As we continue to explore open-ended learning, we may unlock capabilities in AI that far exceed current expectations.

⚠️ Limitations and Challenges of ZSL Today

While zero-shot learning (ZSL) presents remarkable advancements, it still faces significant limitations that hinder its broader application today. These challenges primarily stem from the inherent nature of AI generalization, which requires very high-quality semantic information to function effectively. Let’s explore the main roadblocks:

- Requires High-Quality Semantic Information:

For ZSL to function optimally, AI must be provided with highly accurate semantic descriptions of unseen classes or concepts. Poor-quality, missing, or misleading data can significantly undermine the effectiveness of zero-shot learning models. The effectiveness of ZSL hinges on the quality of the contextual or semantic information it receives. Without detailed, well-defined descriptions, the AI may misclassify or fail to identify new concepts. - Struggles with Ambiguous or Abstract Classes:

ZSL models have difficulty with abstract or vague concepts. AI systems excel when it comes to identifying well-defined, clear-cut categories, but when it encounters abstract ideas—such as emotions or complex societal constructs—it can struggle. The lack of clear distinctions between similar classes makes it challenging for ZSL to maintain accuracy in dynamic, real-world settings. - Domain Generalization Limits:

One of the core challenges of zero-shot AI is its struggle to transfer knowledge across domains effectively. For instance, a model trained to recognize objects in images may not easily apply that knowledge to text-based tasks or other modalities (e.g., speech). This domain generalization issue limits ZSL’s ability to seamlessly function across diverse fields.

Despite these limitations, zero-shot learning continues to evolve and show promise. As we address these challenges, the potential for AI systems that can generalize across different domains without direct training remains immense.

✅ Conclusion: The Gateway to General AI?

Zero-shot learning (ZSL) has undeniably showcased its transformative potential, from enhancing AI’s ability to recognize objects and understand language to pioneering new frontiers in robotics and bioscience. However, its true promise lies in paving the way toward general artificial intelligence (AGI)—AI that can learn and adapt to any new task without extensive retraining.

But what would it take to make a machine learn truly anything, with nothing? The answer lies in overcoming the challenges currently faced by ZSL and continuing to refine the underlying models. With more advancements in semantic understanding and cross-domain learning, ZSL might eventually unlock a new era of universal AI, capable of responding to the complexities of the world in ways we’ve never imagined. The journey is long, but we’re closer than ever.

🔗 Internal Link Suggestions for Discover Optimization

Boost engagement and optimize for Google Discover with these related articles that align with your Zero-Shot Learning content:

- 🧠 Neurograins: Wireless Brain Chips and the Rise of Collective Human Intelligence

Dive into Neurograins, the futuristic wireless brain chips that could revolutionize human cognition and communication. This piece explores how brain-machine interfaces are evolving and the potential of collective intelligence. - 🤖 Emotion AI: Can Robots Truly Feel or Just Fake It?

Explore the emotional capabilities of AI with Emotion AI—robots designed to mimic human emotions. Learn how AI systems could adapt to emotional cues and develop empathy, with connections to zero-shot learning for human-like interactions. - 🧘 AI Therapists: Rise of AI in Mental Health

Discover how AI therapists are reshaping mental health care by providing accessible, personalized support. This article also touches on the integration of AI learning models like zero-shot learning to understand diverse emotional responses. - 🧬 CRISPR and the Genetic Future of Human Evolution

Learn how CRISPR technology is unlocking new frontiers in gene-editing, and how AI, particularly zero-shot learning, is aiding in analyzing complex biological data for human evolution and disease prevention. - 📡 AI Dream Interpretation: Decoding the Mind Through Machines

Delve into the fascinating world of AI dream interpretation, where machines use zero-shot learning to decode the mysteries of human dreams. This article explores how AI-powered systems interpret subconscious thoughts and connect them to real-world insights.