Explainable AI for beginners

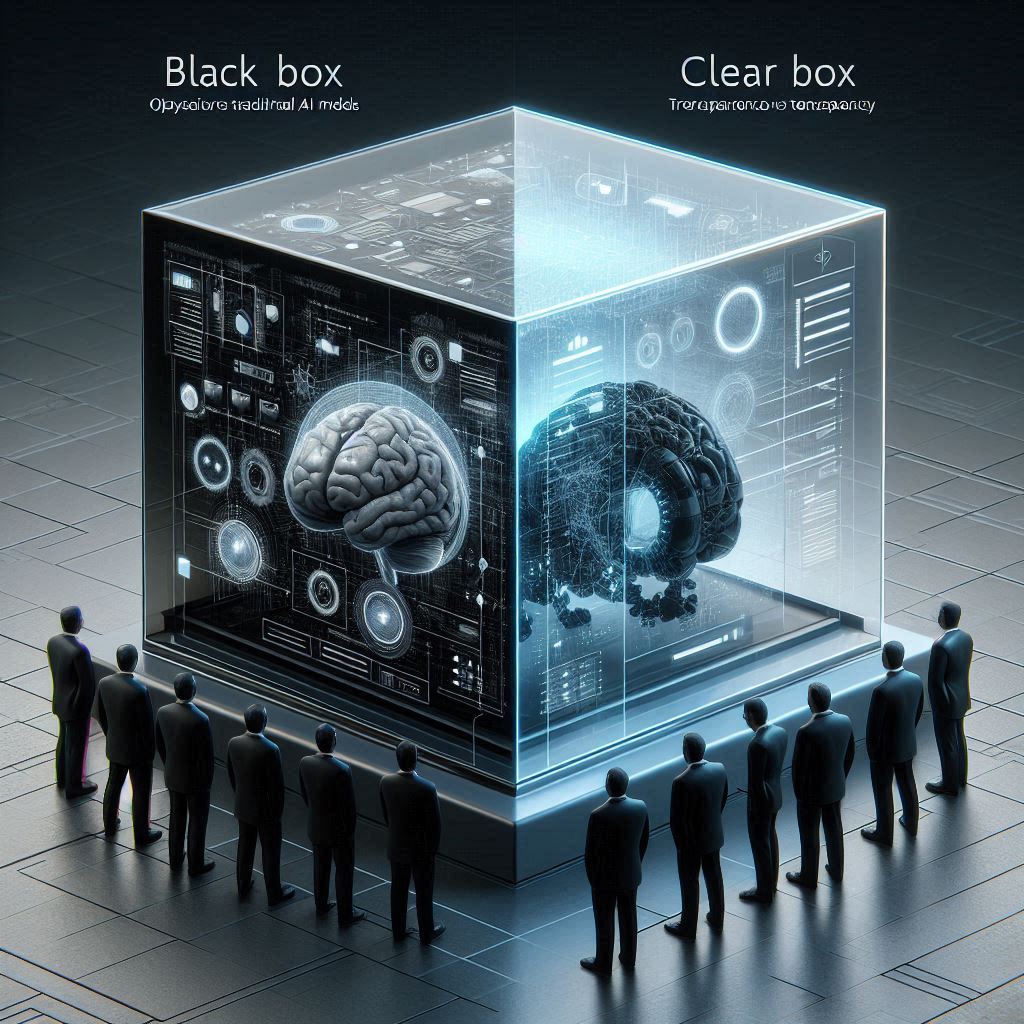

Imagine you have a super cool friend named AI who can do amazing things, like recommend movies you’d love or even write a funny poem. But sometimes, AI makes decisions that seem like a mystery. This is because some AI models work like a magic box – they take things in (data) and give things out (predictions), but it’s hard to see exactly what happens inside.

This is where XAI comes in! XAI stands for Explainable Artificial Intelligence, and it’s like giving AI a see-through window. With XAI, we can understand how AI makes its choices, just like understanding how your friend AI came up with that perfect movie pick.

Let’s delve into the world of Explainable AI for beginners, and uncover XAI’s benefits, limitations, and future. Explore LIME for explainable AI.

Here’s why XAI is important:

Trust: If we don’t understand how AI works, it’s hard to trust its decisions. XAI helps us see what’s going on inside the magic box, building trust in AI for things like loan approvals or medical diagnoses.

Fairness: Sometimes, AI decisions can be unfair, like accidentally recommending only action movies to men and comedies to women. XAI helps us catch these biases and make AI fairer for everyone.

Better AI: By understanding how AI works, we can improve it! XAI helps us fix mistakes and make AI even smarter.

Now, let’s explore how XAI peeks inside the magic box and explains AI’s decisions. We’ll dive deeper into XAI in the next parts, but for now, you can imagine XAI as a tool that helps us see the steps AI takes to reach its conclusions.

Demystifying the Black Box: Why AI Decisions Can Be a Mystery

Remember our super cool friend AI from before? Well, sometimes AI acts like a magic box, especially when it comes to making decisions. Here’s why:

Imagine a Weather Wizard:

Think of a weather prediction model as a type of AI. It takes a bunch of information – like temperature, wind speed, and humidity (like data for AI) – and predicts if it will be sunny or rainy tomorrow (like an AI prediction).

The problem? The weather model is like a black box. It crunches all that data through complex calculations (like AI algorithms), but it’s hard to understand exactly how it arrives at its prediction. We just see the final answer (sunny or rainy) without knowing the steps the model took to get there.

This is the “black box” effect in AI. Many AI models are like this weather wizard – they take data in, process it, and give an answer, but the inner workings are a mystery. This can be a problem for a few reasons:

- Trust Issues: If we don’t understand how AI makes decisions, it’s hard to trust those decisions. Would you trust a weatherman who just said “rain” without explaining why?

- Fairness Check: Sometimes, black box AI can be biased without us realizing it. Imagine the weatherman always predicts rain for picnics but sunshine for soccer games! XAI helps us check for these biases and make AI fair for everyone.

The good news? Just like we can learn how weather models work with some effort, XAI (Explainable Artificial Intelligence) helps us see inside the magic box of AI and understand how it makes its decisions. In the next section, we’ll explore how XAI works to shed light on the mysterious world of AI!

Core Concepts of XAI: Lifting the Lid on the Magic Box

Now that we understand the “black box” issue with some AI models, let’s explore XAI (Explainable Artificial Intelligence) and how it helps us see inside. Here, we’ll encounter some new terms, but don’t worry, they’re all about understanding AI better!

AI’s Three Keys: Interpretability, Explainability, and Transparency

Imagine you have a delicious cake recipe. Here’s how XAI relates to understanding recipes (and AI):

Interpretability: This is like having a recipe that’s super easy to follow, with clear steps and simple ingredients. An interpretable AI model is easy to understand because its decision-making process is clear and straightforward. (Think of a simple recipe with only a few ingredients)

Explainability: This is like having a recipe with some complex steps, but the instructions explain why each step is important. An explainable AI model might be more intricate, but XAI tools can help explain its decisions even if the inner workings are complex. (Think of a recipe with more steps, but each step has an explanation)

Transparency: This is like being able to see all the ingredients and steps involved in making the cake. A transparent AI model allows us to see not only the final decision but also the data it used and the process it followed to reach that decision.

Interpretable vs. Explainable AI: What’s the Difference?

Not all AI models are created equal. Here’s a quick breakdown:

Interpretable AI Models: These are the “easy-to-follow recipe” models. They’re often simpler and easier to understand because their decision-making process is clear from the start. For example, a basic spam filter that identifies spam emails based on keywords might be interpretable.

Explainable AI Models: These are like the “complex recipe with explanations” models. They might be more intricate, but XAI tools can help us understand their decisions even if the inner workings are difficult to grasp directly. For example, a complex image recognition AI might be explainable – XAI can show us which parts of the image the AI focuses on to identify an object.

In the real world, many AI models fall somewhere in between. XAI helps us explain even complex models, making AI decision-making more transparent. In the next section, we’ll dive into how XAI actually works its magic!

How Does XAI Work?

Now that we understand the need for XAI and the core concepts, let’s see how XAI actually works its magic! Here, we’ll explore some common XAI techniques that help us peek inside the AI’s magic box and understand its decisions.

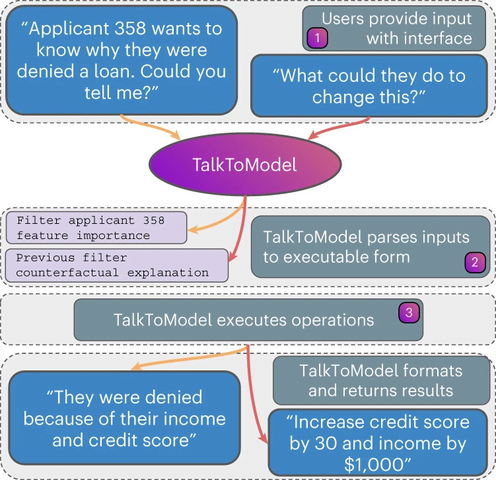

Feature Importance: Ranking the Ingredients

Imagine you’re baking a cake. XAI’s “Feature Importance” technique is like figuring out which ingredients have the biggest impact on the final cake. Here’s how it works:

- AI Models Use Many Ingredients (Data): Just like a cake recipe uses various ingredients, AI models use a lot of data points (features) to make decisions. These features can be anything from numbers to text.

- XAI Ranks the Impact: XAI tools analyze how much each data point (feature) affects the AI’s decision. It identifies the features that have the biggest influence, like flour being more important than sprinkles for the cake’s structure.

Example: An AI loan approval system might use many factors to decide on a loan. XAI’s Feature Importance can reveal that income history and credit score are the most impactful features for loan approval, like flour and sugar being key for a cake.

Decision Trees: A Step-by-Step Recipe

Another XAI technique is called a “Decision Tree.” Imagine a flowchart where each step asks a yes-or-no question to reach a final decision. This is similar to how a decision tree visualizes an AI model’s thought process.

- Breaking Down the Process: XAI creates a decision tree that maps out the steps the AI model takes to reach a decision. Each branch of the tree represents a question the AI asks about the data, and the answer determines which direction it goes next.

- Simple and Clear Visualization: This decision tree is like a visual recipe, making it easy to understand the sequence of steps the AI follows to arrive at its conclusion.

For Instance: Imagine an AI spam filter. A decision tree might show the AI first checking for certain keywords, then looking at sender information, and finally deciding if it’s spam based on these steps.

LIME for Explainable AI: Explaining Individual Decisions

XAI also has tools for explaining specific decisions made by an AI model. LIME (Local Interpretable Model-Agnostic Explanations) is like having a sous chef who creates a simplified version of your complex cake recipe to explain a specific cake you baked.

- Understanding Single Predictions: Sometimes, we might not just want to know how the AI works in general, but why it made a specific decision for one instance. LIME helps with this.

- Creating a Simpler Model: For a particular prediction, LIME creates a simpler, easier-to-understand model that mimics the original AI’s behavior for that specific case. This helps explain why the AI made that particular decision.

Imagine This: An AI recommends a movie you might like. LIME can explain why by creating a simpler model that considers your past viewing history and highlights the genres or actors that influenced the recommendation.

By using these techniques and more, XAI helps us understand the inner workings of AI models and builds trust in their decision-making processes. In the next section, we’ll explore the benefits and limitations of XAI.

Benefits of Explainable AI: Shining a Light on the Advantages

We’ve explored how XAI works to peek inside the magic box of AI models. But why is XAI so important? Here are some key benefits of Explainable AI:

Building Trust in AI:

Imagine using a self-driving car. If you don’t understand how it makes decisions, would you feel safe? XAI helps by:

- Transparency: By explaining how AI models arrive at their decisions, XAI fosters trust in their capabilities. We can see the data they use, the steps they take, and the reasoning behind their outputs.

- Understanding the “Why”: Knowing why an AI model made a decision is crucial. XAI helps us understand the logic behind its choices, making them more trustworthy and easier to accept.

Improved Fairness:

Remember the weatherman who always predicted rain for picnics? Bias can creep into AI models too. XAI helps by:

- Identifying Bias: XAI tools can detect hidden biases in AI models. For example, they might reveal if a loan approval system is unintentionally biased against a certain demographic.

- Creating Fairer AI: By identifying biases, we can adjust AI models to make fairer and more equitable decisions for everyone.

Better Model Debugging:

Imagine baking a cake and it always comes out lopsided. XAI helps with AI models too:

- Finding Errors: XAI can help identify weaknesses or errors within an AI model. By explaining how the model arrives at its decision, XAI can pinpoint where things might be going wrong.

- Improving AI Performance: Once we understand where the model struggles, we can fix the errors and make it more accurate and reliable.

Increased Human-AI Collaboration:

Imagine a chef and their sous chef working together. XAI fosters a similar collaboration between humans and AI:

- Understanding the “How”: By explaining how AI models work, XAI allows humans to understand their strengths and weaknesses. This knowledge helps us leverage AI’s capabilities while making informed decisions ourselves.

- Better Teamwork: With XAI, humans and AI can work together more effectively. Humans can guide AI models and use their explanations to make better overall decisions.

XAI is a powerful tool that unlocks the potential of AI by building trust, improving fairness, and fostering better human-AI collaboration. In the next section, we’ll explore some of the limitations of XAI to get a well-rounded picture.

Limitations of Explainable AI: The Magic Box Isn’t Fully Transparent Yet

While XAI offers incredible benefits, it’s important to remember that it’s still a developing field. Below are some limitations of XAI to consider:

Not All Magic Boxes Can Be Explained:

Imagine a super complex cake recipe with exotic ingredients and intricate steps. Similarly, some AI models are incredibly complex, making them difficult to explain with current XAI techniques.

- Challenges with Complexity: Highly complex AI models might be challenging for XAI to fully explain. The inner workings of these models can be intricate and not easily broken down into clear steps.

Understanding the Explanation:

Imagine someone explaining a complex recipe using scientific jargon. Just because something is explained doesn’t mean everyone can understand it! Here’s the same challenge with XAI:

- Clarity for Non-Experts: XAI explanations might involve technical terms or concepts that are difficult for people without a technical background to grasp. This can limit the usefulness of XAI for everyday users.

The Quest for Perfect Explanations:

There’s no one-size-fits-all explanation for everyone. Just like some people prefer simple cake recipes while others enjoy the challenge of complex ones, the ideal XAI explanation can vary.

- Tailoring Explanations: Developing explanations that are both accurate and understandable for different audiences can be challenging. XAI is constantly evolving to address these limitations, but it’s important to be aware that perfect transparency for every AI model isn’t always achievable.

However, even with these limitations, XAI is a powerful tool that is constantly improving. In the next section, we’ll explore the exciting future of XAI!

The Future of XAI: Unveiling More of the Magic

XAI is a field brimming with potential, and researchers are constantly working to overcome its limitations and make explanations even better. Here’s a glimpse into the exciting future of XAI:

More Robust Explanations: Researchers are developing new XAI techniques that can explain even the most complex AI models. Imagine having a sous chef who can explain not just a simple cake recipe, but even the most intricate pastries!

Focus on Accessibility: The future of XAI is all about making explanations clear and understandable for everyone. This includes developing visualizations, using simpler language, and tailoring explanations to different audiences.

Human-Centered XAI: The ultimate goal is to create XAI that is not just informative, but also helpful and actionable for humans. Imagine explanations that don’t just tell you what the AI did, but also suggest ways to improve it or tailor its decisions for specific situations.

As XAI continues to evolve, we can expect AI models to become more transparent, trustworthy, and easier to collaborate with. This will open doors to even wider adoption of AI in various fields, with benefits for everyone.

Conclusion: Demystifying the Magic – The Power of XAI

AI is becoming an increasingly important part of our lives, but sometimes it can feel like a black box. XAI (Explainable Artificial Intelligence) is here to change that! By offering explanations for AI decisions, XAI helps us understand how AI works, building trust and making AI fairer and more reliable.

Here’s a quick recap of what we learned about XAI:

- XAI lifts the lid on AI models: It sheds light on the decision-making process of AI, helping us understand why AI makes the choices it does.

- XAI uses various techniques: Feature Importance, Decision Trees, and LIME are just a few tools XAI uses to explain AI’s inner workings.

- XAI benefits everyone: It fosters trust in AI, helps identify and remove biases, and allows us to improve AI models for better performance.

- XAI is still evolving: While there are limitations, like explaining complex models or ensuring explanations are universally clear, XAI is constantly improving.

The future of XAI is bright! As XAI continues to develop, AI will become more transparent, trustworthy, and collaborative. This opens doors to exciting advancements in various fields, benefiting everyone.

Want to learn more? The world of XAI is vast and fascinating. If you’re curious to explore further, there are many resources available online and in libraries.

What are your thoughts on XAI? Do you have any questions about how AI works or how XAI can be used? Let me know in the comments below! I’m always happy to discuss and learn more about this exciting field. Also, Check out other interesting blogs.