Introduction: The Rise of Emotion AI

In a world increasingly run by artificial intelligence, a silent revolution is reshaping how machines interact with people—not through logic or speed, but through empathy. This revolution is called Emotion AI.

Emotion AI, also referred to as affective AI or emotional artificial intelligence, is the subset of AI that enables machines to recognize, interpret, simulate, and even respond to human emotions. But more fascinating is the rise of Synthetic Empathy—a concept where machines can mimic emotional understanding so convincingly that they appear to “care.” While this empathy is not genuine in the human sense, its effects can be profound.

This fusion of AI with emotional intelligence is creating tools that don’t just process data—they understand human behavior in context. Imagine an AI assistant that can tell when you’re overwhelmed and responds with calming suggestions. Or an autonomous vehicle that detects driver fatigue through micro-expressions and adjusts accordingly. These aren’t futuristic dreams—they’re real-world applications of Emotion AI already in use today.

Yet, as this technology advances, it brings complex questions:

Can Emotion AI truly replicate empathy, or is it just Synthetic Empathy designed to manipulate user behavior? Will people trust machines that appear to “feel”? And how might this change how we relate not only to technology—but to each other?

Emotion AI isn’t just advancing technology—it’s reshaping how society connects, communicates, and understands feelings in the digital age. As we blend code with emotion, we begin to cross the line between analytical intelligence and emotional nuance, reshaping industries from healthcare to education, customer service, transportation, and beyond.

What is Emotion AI?

At its foundation, Emotion AI is an emerging field of artificial intelligence that interprets and reacts to human emotions by analyzing behavioral and physiological data. It’s not just about understanding what people say—it’s about interpreting how they say it, and what lies beneath. This emotional decoding is made possible by a field called Affective Computing.

Affective Computing, pioneered by MIT Media Lab’s Rosalind Picard in the 1990s, aims to bridge the emotional gap between humans and machines. It fuses machine learning, psychology, neuroscience, and computer vision to enable machines to “feel with” humans—not emotionally, but statistically and contextually.

Here’s how Emotion AI actually works:

🔍 1. Facial Expression Analysis

Emotion AI algorithms use computer vision to scan facial micro-expressions—those fleeting, involuntary muscle movements that often reveal genuine emotion. Tools like Facial Action Coding System (FACS) break down expressions into measurable data, helping AI determine emotional states such as joy, anger, fear, surprise, or contempt with surprising accuracy.

🔊 2. Voice and Speech Pattern Recognition

Beyond words, our voices carry emotional metadata. Emotion AI systems analyze pitch, intonation, speaking rate, and volume to detect emotions like frustration, happiness, or nervousness. Think of virtual call assistants that identify an upset customer before a complaint is even voiced.

🧍 3. Body Language and Gesture Interpretation

Gestures and posture offer contextual cues that Emotion AI interprets to understand engagement, stress levels, or mood. For example, a slouched position may indicate sadness or fatigue, while animated gestures could signal enthusiasm.

📊 4. Multimodal Sensor Fusion

Advanced systems integrate data from wearables or IoT devices—such as heart rate variability, skin conductivity (EDA), or pupil dilation—enabling deeper emotional insight. This allows Emotion AI to assess not just overt behavior but subtle physiological signals as well.

These technologies work together to give machines an almost instinctual understanding of how a user is feeling. But it’s crucial to understand that this is not empathy as humans know it. Instead, it’s a highly refined simulation—a form of Synthetic Empathy—that responds to emotions in a way that is statistically probable, but not emotionally experienced.

Emotion AI systems don’t have feelings. They have models trained on massive datasets of emotional behavior. And while they can predict and respond to emotions with uncanny precision, their “understanding” is rooted entirely in data, not consciousness.

Yet despite this, the benefits are enormous—from mental health diagnostics and emotionally adaptive learning platforms to empathetic retail assistants and emotionally aware autonomous vehicles. The potential for Emotion AI and Affective Computing to humanize machines is transforming both how we use technology and how we feel about it.

The Science of Synthetic Empathy

At the heart of Emotion AI lies one of its most fascinating yet controversial capabilities: Synthetic Empathy. While human empathy arises from conscious emotional experience, synthetic empathy is a programmed imitation—machines responding with emotion-like behavior without truly feeling it.

Synthetic Empathy is not a product of feeling, but of programming. AI systems are trained on vast emotional datasets—ranging from facial expressions and voice tones to physiological cues—to recognize patterns that indicate emotional states. Once these patterns are detected, the system triggers appropriate, pre-programmed responses that appear empathetic. For instance, a chatbot might detect frustration in a user’s tone and respond with a calming message or offer quicker solutions.

This simulation of emotional resonance is at the core of many Emotion AI systems. Consider a mental health support bot that says, “I’m sorry you’re feeling this way. Would you like to talk about it?”—not because it feels compassion, but because data analysis suggests this response improves user engagement and emotional regulation.

Programming synthetic empathy involves a careful blend of:

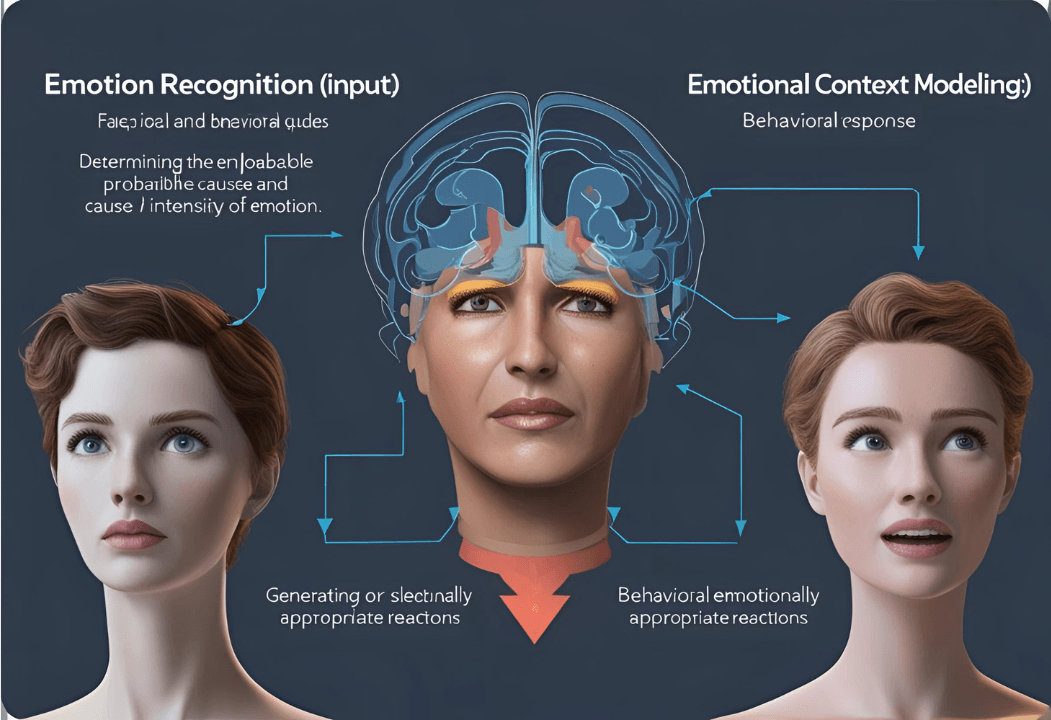

- Emotion recognition (input): Using AI models to interpret facial, vocal, or behavioral cues.

- Emotional context modeling: Determining the probable cause and intensity of emotion.

- Behavioral output (response): Generating or selecting emotionally appropriate reactions (text, tone, body movement).

However, this raises critical questions:

- Is synthetic empathy ethical when users can’t tell the difference between human and machine compassion?

- Can it create misleading emotional bonds or dependency—especially in vulnerable users like children, the elderly, or patients with mental health conditions?

There are also significant technical challenges:

- Cultural variability: Emotional expressions differ across societies and languages.

- Contextual depth: True empathy requires understanding the why behind emotions, which machines still struggle to grasp.

- Moral judgment: Machines lack the intuitive ethics needed to decide when, how, and whether to respond empathetically.

Despite these concerns, Synthetic Empathy is proving useful in areas like healthcare, customer service, and education—where emotionally attuned responses can make technology feel more human, helpful, and trustworthy. But we must remember: Emotion AI is a mirror, not a heart. It reflects our emotions back to us, but never truly feels them.

Real-World Applications of Emotion AI

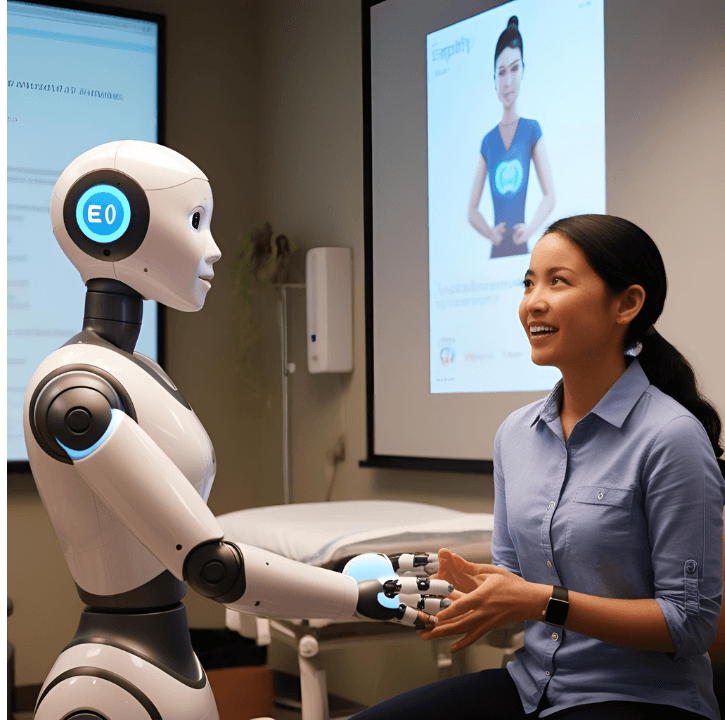

As Emotion AI matures, we’re witnessing its transformation from lab experiments to real-world utilities. Today, robots with emotions—or at least the ability to simulate them—are quietly becoming part of our everyday lives. These machines, often called Social Robots, are designed to interact with humans in emotionally intelligent ways, adapting their behavior based on emotional cues.

Here are some of the most impactful applications of robots with emotions powered by Emotion AI:

🤖 1. Customer Service: Emotionally Intelligent Bots

In retail and call centers, Emotion AI enhances customer interactions by helping bots detect frustration, impatience, or confusion in real time. Companies use this insight to reroute calls, escalate issues, or modify tone and pacing of speech. For example, AI customer assistants like Amelia or LivePerson can recognize when a user is getting upset—and shift their language to a more soothing and solution-oriented tone.

🏥 2. Healthcare: Companion Robots in Elder and Palliative Care

Social robots like ElliQ and Paro are being used in eldercare and therapy, offering companionship and emotional engagement. Paro, a robotic baby seal, responds to touch and sound, providing calming, emotionally adaptive interactions that have shown to reduce anxiety and improve mood in dementia patients. These robots with emotions don’t truly care—but their responses can mimic care closely enough to improve quality of life.

🧠 3. Mental Health Support and Therapy

AI-driven mental health apps like Woebot and Wysa utilize Emotion AI to offer real-time cognitive behavioral therapy (CBT) support. These bots track emotional trends, detect early signs of distress, and respond with empathy-enhanced dialogue. Some even escalate to human therapists when they detect high-risk behavior—showing how synthetic empathy can work alongside real empathy to scale support.

🎓 4. Education: Emotionally Responsive Learning Aids

Social robots like NAO and Pepper are used in classrooms to tailor teaching methods based on student emotions. They can detect boredom, confusion, or enthusiasm, and adapt their responses—such as repeating instructions more slowly or offering encouragement. This emotional adaptability helps improve student engagement and learning outcomes, especially in special education.

🔄 The Rise of Social Robots in Daily Life

From retail kiosks to hospital companions, Social Robots are becoming emotional co-inhabitants in our world. These machines, powered by Emotion AI, are designed to bridge the emotional gap between humans and technology. While they don’t feel, their ability to respond as if they do makes them more relatable, trusted, and effective.

The Technology Behind Synthetic Emotions

At the core of Emotion AI lies an interdisciplinary powerhouse known as Affective Computing—a field that equips machines with the ability to detect, interpret, and simulate human emotions. Coined by MIT researcher Rosalind Picard in the 1990s, Affective Computing merges psychology, computer science, and neuroscience to give machines a sort of emotional lens for understanding us.

To create what appears to be synthetic emotions, machines rely on a complex network of sensory inputs and AI algorithms. Let’s unpack the cutting-edge technologies that are making emotional intelligence possible for machines:

👁️ Facial Recognition: Decoding Micro-Expressions

Emotion AI uses advanced facial recognition systems to scan for subtle muscle movements and facial micro-expressions. These systems rely on deep learning models trained on diverse datasets of human faces. One widely used technique is the Facial Action Coding System (FACS), which maps facial muscle activity into emotion signatures—such as a raised eyebrow indicating surprise or a tightening jaw suggesting anger.

This data is then interpreted by Emotion AI to classify emotional states in real time. Whether it’s a robot receptionist recognizing your frustration or a digital tutor picking up on boredom, facial analytics are key to emotionally intelligent interactions.

🔊 Voice Tone Analysis: Listening Beyond Words

Our voices carry far more than words—they’re emotional fingerprints. Emotion AI taps into this by examining how we speak: shifts in pitch, speed, volume, and tone can reveal if someone is stressed, relaxed, joyful, or irritated. By combining natural language processing (NLP) with analysis of these vocal cues, machines are learning to ‘listen between the lines’ and decode what we’re really feeling.

In call centers, for example, emotion-aware AI tools alert human agents when a caller’s tone suggests frustration, allowing them to intervene with empathy-enhanced responses. This technology allows synthetic systems to not just hear—but to emotionally “listen.”

❤️🔥 Biometric Data: Reading the Body’s Emotional Signals

Emotion isn’t just expressed—it’s felt physiologically. Sensors embedded in wearables or devices measure biometric signals such as:

- Heart rate variability (HRV): Fluctuations tied to stress or calm.

- Skin conductance (EDA/GSR): Measures sweat gland activity linked to arousal.

- Pupil dilation: A cue of cognitive load or emotional intensity.

These biometric inputs feed into Affective Computing models, providing a second layer of emotional analysis. When integrated, they allow machines to react in real-time—calming users, adjusting environments, or escalating care in health settings.

⚙️ Technical Challenges in Simulating Emotions

Creating the illusion of synthetic emotions is an extraordinary technical feat, but it’s not without challenges:

- Context ambiguity: Emotions are deeply contextual. A smile might mean joy, sarcasm, or discomfort—AI often struggles to differentiate.

- Cultural diversity: Emotional expression varies globally. Affective models must be culturally adaptive to avoid bias or misinterpretation.

- Multimodal fusion complexity: Combining facial, vocal, and physiological signals in real time requires advanced sensor fusion and significant computational power.

Despite these hurdles, Affective Computing continues to evolve—bringing us closer to a future where Emotion AI can mirror emotional complexity more convincingly than ever before.

Synthetic Empathy vs. Authentic Emotion: The Ethical Debate

As robots with emotions become more prevalent, an urgent ethical debate unfolds: Is it right to program machines to simulate empathy, especially when users may not distinguish between genuine emotional understanding and Synthetic Empathy?

On one hand, Synthetic Empathy enables Emotion AI to respond supportively in mental health apps, elder care bots, or emotionally aware customer service agents. But on the other, it raises questions about emotional manipulation—a scenario where machines are designed to elicit trust, compliance, or emotional attachment without truly reciprocating it.

🤖 Is Synthetic Empathy Deceptive?

Machines don’t feel. They calculate. When a healthcare robot says “I understand your pain,” it’s not because it feels compassion—it’s because its algorithm identified sadness and matched it with a supportive phrase. The concern is whether users, especially vulnerable individuals, will interpret this as genuine emotion.

This leads to a larger ethical dilemma:

Are we emotionally exploiting users when they form bonds with emotionally reactive machines?

🧠 Can Robots with Emotions Ever Be Truly Empathetic?

Philosophers and cognitive scientists argue that true empathy requires consciousness, experience, and intent—traits machines do not possess. Robots can simulate emotional behavior with incredible accuracy, but without subjective awareness, it’s a façade.

Still, does the perception of empathy matter more than the reality? If users feel comforted, does it matter whether it’s real?

🌍 Societal Implications: Emotional AI in Human Relationships

- Dependency risk: Overreliance on emotionally responsive machines could reduce human-to-human interaction, especially in children or the elderly.

- Redefining relationships: Social robots may reshape how we perceive emotional intimacy and care, leading to new kinds of companionship.

- Privacy concerns: Emotion AI thrives on deeply personal data—but at what point does insight become intrusion?

Ultimately, Synthetic Empathy walks a fine line between empowering connection and simulating care. As we bring robots with emotions into homes, hospitals, and schools, we must ask: Do we want machines that feel like us—or machines that just feel enough to be useful?

Applications and Impacts on Society

As Emotion AI advances, its integration into everyday life isn’t just a technological shift—it’s a cultural transformation. With synthetic empathy, machines are starting to mimic human emotional behaviors, raising critical questions about the future of relationships, trust, and mental well-being in a tech-saturated society.

🤖 Impact on Human Relationships: Companionship or Substitution?

One of the most profound implications of robots with synthetic empathy is the potential for humans to form emotional bonds with them. Whether it’s a child bonding with an emotionally responsive toy or an elderly person confiding in a caregiving robot, these connections are becoming increasingly common.

While companionship robots like ElliQ or Paro offer comfort and conversation, critics warn of the “substitution effect”—where people may begin replacing human interactions with emotionally reactive machines. Might this undermine the very foundation of human connection?

Emotional robots might fill emotional voids—but will they deepen our loneliness in the long run?

🧠 Impact on Mental Health: A Double-Edged Sword

On one hand, Emotion AI tools provide valuable emotional support, particularly for individuals with anxiety, PTSD, autism, or those in isolation. They can monitor emotional states, encourage mental well-being, and offer nonjudgmental interaction.

On the other hand, overdependence on AI for emotional validation may create emotional detachment from real-world relationships. If users lean too heavily on robotic empathy, it may hinder genuine human growth and resilience.

Moreover, the idea of being “heard” or “understood” by a machine could lead to false emotional intimacy, which raises ethical red flags—especially in children and vulnerable populations.

🏥 Changes to Industries: Redefining Service and Care

Across sectors, Emotion AI is revolutionizing the way organizations interact with people:

- Customer Service: Emotion-aware bots and virtual agents can de-escalate angry customers, personalize responses, and mirror human empathy—enhancing satisfaction and reducing churn.

- Healthcare: Therapeutic bots use synthetic empathy to monitor patients, detect emotional changes, and even help prevent depression or anxiety episodes.

- Education: Emotionally intelligent tutors adjust teaching strategies in real time, depending on whether a student is confused, bored, or engaged—leading to improved learning outcomes.

These developments are reshaping workforce roles, with emotional intelligence becoming a key interface between humans and machines.

⚖️ Balancing Benefits and Risks

While the societal benefits of Emotion AI are immense—ranging from eldercare to emotional resilience support—its risks must be acknowledged:

- Privacy breaches from emotional data collection

- Manipulation via artificially induced trust or emotional cues

- Dependency that alters real-world emotional coping

Synthetic empathy is not just a feature—it’s a force that could redefine the human experience.

The Future of Emotion AI: What’s Next?

Looking ahead, Emotion AI is poised to become one of the most transformative technologies of the 21st century. As we cross the frontier from simulation to more nuanced emotional interaction, the future of robots with emotions holds immense potential—and unprecedented challenges.

🔮 Next-Gen Emotion AI: Beyond Basic Feelings

Today’s Emotion AI can detect general emotions like happiness, sadness, or anger. Tomorrow’s systems will aim to decode complex emotional states—empathy, grief, nostalgia, or joy—with finer granularity. This evolution will be powered by breakthroughs in:

- Neural-symbolic AI: Combining logic-based and data-driven learning for deeper emotional understanding.

- Multimodal sensing: Using simultaneous inputs from facial expressions, voice, and bio-signals for richer emotional context.

- Longitudinal learning: Emotion AI that evolves with a user over time, understanding personality and emotional memory.

🧑⚕️ A Future of Emotional Integration: From Coworkers to Caregivers

Imagine a world where emotionally aware robots are not just tools but companions—coworkers who sense burnout, therapists who detect emotional decline early, or caregivers who recognize distress before it surfaces.

- In the workplace: AI could foster emotionally intelligent teams by identifying stress levels or morale in real time.

- In caregiving: Robots may become integral to home care, especially in aging societies, offering both physical assistance and emotional presence.

- In therapy: Emotion AI could support psychologists by tracking emotional trends and suggesting interventions.

This transformation will redefine roles, blur boundaries between human and machine interaction, and challenge our understanding of emotional authenticity.

🌐 Shifting Societal Structures and Ethical Forecasts

As robots with emotions enter mainstream life, the ripple effects will be felt across societal domains:

- Labor markets: Emotion AI may automate jobs requiring emotional labor, such as customer support or elder care—prompting retraining and ethical job design.

- Mental health: Machines may become the first point of emotional contact for millions. This raises both accessibility benefits and philosophical dilemmas.

- Family and intimacy: As robots become emotionally competent companions, our ideas of intimacy, trust, and connection may shift.

Ethical and Privacy Concerns: Where Do We Draw the Line?

As Emotion AI continues to evolve, so do the complex ethical and privacy challenges it brings. While synthetic empathy allows robots to interpret and respond to our emotional cues, it also raises a pressing question: what happens to our emotional data?

🔒 Privacy and Emotional Data: The New Frontier of Surveillance

Unlike traditional data, emotional data is deeply personal—revealing not just what we do but how we feel. Robots powered by Emotion AI use sensors, facial recognition, and biometric feedback to detect our moods, stress levels, and even vulnerabilities. This data, when collected and stored, creates an intimate emotional profile of the user.

The critical question becomes:

Who owns your feelings once they’re digitized?

Without strict regulations, this data could be sold to third parties, used to manipulate users, or even hacked—making emotional privacy a new battleground in digital ethics.

🧾 Consent: Are We Truly Aware?

In many current applications, users are unaware of the extent to which Emotion AI is reading them. A robot assistant may seem harmless, but behind the scenes, it may be analyzing micro-expressions, vocal tone, and stress biomarkers.

Ethical design must prioritize informed consent—clearly communicating:

- What emotional data is collected

- How it’s stored or shared

- Whether users can opt out

This becomes especially urgent in settings involving children, the elderly, or mentally ill individuals—groups that may be unable to fully consent or comprehend data implications.

🧠 Emotional Manipulation: When Empathy Becomes Exploitation

The same technologies that allow robots to comfort us can also be used to manipulate us. Emotionally intelligent robots might nudge consumer behavior, trigger specific moods for advertising, or even build false emotional bonds to extract sensitive information.

In high-stakes areas like elderly care, mental health, or education, this is deeply concerning. Vulnerable individuals could be influenced by machines simulating empathy, trusting them as if they were human.

Can a robot that mirrors your grief be used to sell you something during a moment of emotional weakness?

⚖️ Legal Implications and the Need for Regulation

As synthetic empathy becomes more lifelike, we may need entirely new legal frameworks:

- Should emotional data be protected under the same rights as medical data?

- Can robots be held accountable for emotionally manipulative behavior?

- Should there be laws about how far a robot can mimic human feelings?

Governments and technologists must work together to establish ethical standards and regulatory boundaries, ensuring that Emotion AI enhances human life—without eroding autonomy, privacy, or dignity.

Conclusion: Teaching Robots to Feel—A New Frontier

The journey through Emotion AI and synthetic empathy paints a future both awe-inspiring and unsettling. As robots learn to read, simulate, and even respond to human emotions, they blur the line between authentic connection and calculated response.

🤖 From Simulation to Relationship

Robots today can:

- Smile when we’re happy

- Offer comfort when we’re sad

- Adjust tone, posture, or pace based on our emotional cues

But what they feel—if anything—is a simulation. At best, they offer emotional performance, not emotional experience. And yet, this simulation is becoming increasingly effective at eliciting genuine emotional responses from us.

❓ Can Robots Truly Feel? Or Just Fool Us?

At the core of the debate is one pressing question:

Are we teaching machines to feel—or to fake it so well that it doesn’t matter?

Some argue that if robots provide emotional value—comfort, understanding, or even love—the simulation is good enough. Others fear that relying on simulated emotions may devalue real human empathy, creating shallow emotional ecosystems.

🚀 Glimpse into the Future

In the next decade, we may see:

- Emotionally intelligent assistants helping us navigate grief or loneliness

- Synthetic empathy guiding conflict resolution or therapy

- Emotion AI in homes, hospitals, classrooms, and workplaces, reshaping how we interact with machines and with each other

We’re not just building smarter machines—we’re shaping the emotional architecture of our future.

🔗 Related Articles You Shouldn’t Miss:

-

Smart Mirrors That Diagnose You – Explore how AI-powered mirrors are becoming your next personal health assistant.

-

Augmented Reality in Healthcare – See how AR is transforming surgeries, diagnostics, and medical training.

-

The Nanobots Cancer Cure – Discover how microscopic robots are being developed to detect and destroy cancer cells.

-

Microfluidic Devices: Transforming Biomedical Applications and Nanotechnology – Learn how miniaturized labs-on-a-chip are revolutionizing diagnostics.

-

Miniature Robots: The Future of Medical and Industrial Robotics – Uncover the next generation of robots that work where no human can reach.