Introduction: What Is Embodied AI and Why It Matters Today

Embodied AI is a branch of artificial intelligence that combines thinking with physical doing — allowing machines not just to process data but to move, touch, and interact with the real world. Unlike traditional AI, which is confined to the cloud or screens, embodied artificial intelligence lives inside physical agents like robots, drones, or smart assistants equipped with sensors and actuators.

This emerging field is quickly gaining attention across industries such as robotics, autonomous vehicles, healthcare, and home automation. Why? Because intelligent action in the real world requires more than just computation — it demands physical awareness. Embodied AI systems learn by doing, sensing, and adapting — much like humans and animals.

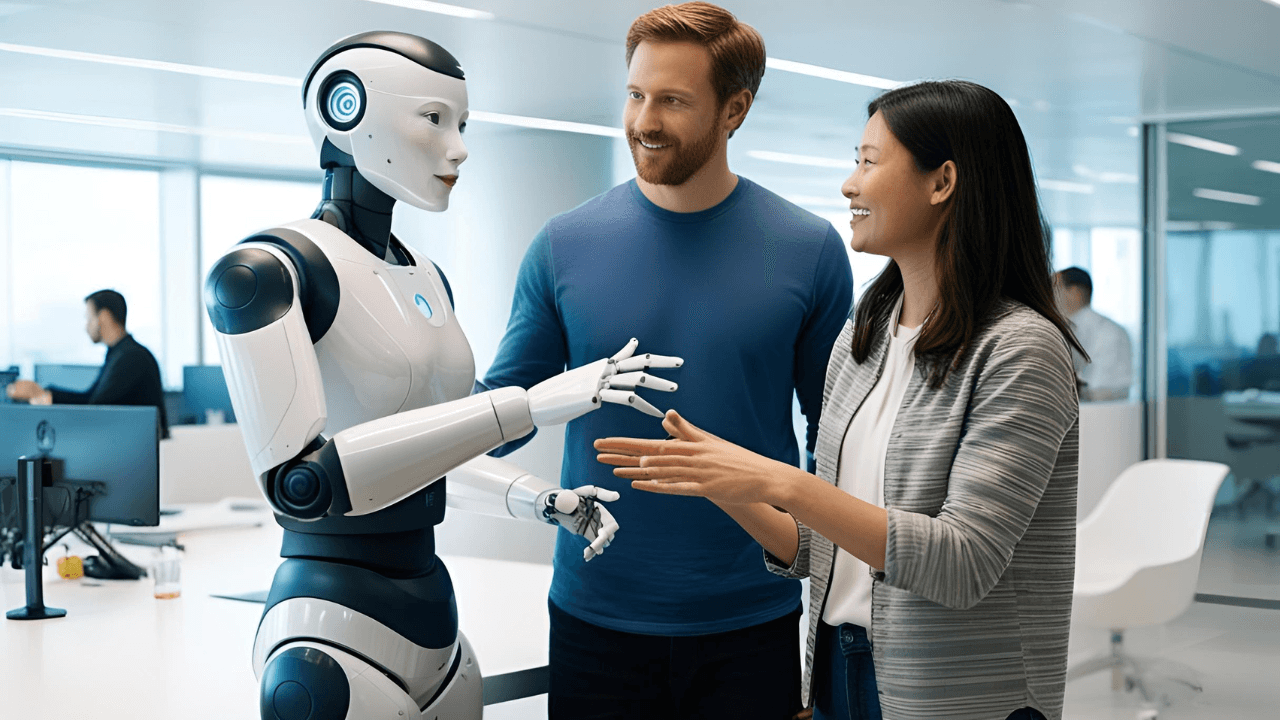

Imagine an AI assistant that doesn’t just set calendar reminders but walks across your house to hand you a water bottle, reads your facial expressions, and adjusts its behavior accordingly. This blend of perception, cognition, and movement is what makes embodied AI so groundbreaking — and potentially transformative for the future of human-machine collaboration.

From Code to Body: The Evolution of Embodied Artificial Intelligence

The journey of AI began in labs and server rooms — with algorithms trained to solve problems through raw data analysis. This “disembodied” AI became good at language processing, game-playing, and data prediction, but struggled with the real-world messiness of physics, touch, and context.

Embodied intelligence flips this paradigm. Rooted in fields like robotics and neuroscience, it emphasizes learning from physical experience. Just as toddlers grasp object permanence by handling toys, embodied AI systems learn spatial awareness, causality, and intent through trial and error in the real world.

The evolution accelerated with milestones like the DARPA Robotics Challenge and innovations in sensor fusion, computer vision, and reinforcement learning. Unlike traditional AI that operates in abstract digital space, embodied AI thrives in dynamic, uncertain environments — like warehouses, homes, or disaster zones — where real-time interaction and feedback are critical.

This shift from virtual brains to embodied agents is not just technological but philosophical: it reflects the idea that intelligence isn’t complete without a body. As we move toward more capable autonomous systems, embodied artificial intelligence is laying the groundwork for machines that can perceive, think, and act — not just compute.

Core Technologies Powering Embodied AI

Embodied AI systems are built on a powerful convergence of technologies that give machines the ability to perceive, learn, and act within the physical world.

The core driving force behind embodied AI lies in machine learning—especially deep learning and reinforcement learning techniques. Reinforcement learning allows robots to learn through interaction — trying different actions and receiving feedback to improve over time. Instead of hardcoding every behavior, these systems learn optimal strategies for complex tasks by themselves.

Next, sensor fusion plays a crucial role. Embodied AI systems integrate data from various sensory inputs — such as LIDAR (Light Detection and Ranging), cameras, tactile sensors, and inertial measurement units (IMUs) — to build a real-time understanding of their surroundings. These sensory streams are combined to create a coherent, 3D-aware view of the world, allowing for accurate movement and decision-making.

Imagine a robot learning to play fetch. It uses its vision sensors to locate a ball, its tactile sensors to grasp it gently, and reinforcement learning algorithms to understand what earns it a “good job.” Over time, this robot improves its fetch game — just like a puppy would — through trial, error, and environmental feedback. That’s the real power of embodied AI systems: machines that not only think but physically learn.

As advances continue in edge computing, neuromorphic chips, and energy-efficient hardware, embodied AI is transitioning from lab experiments to deployable solutions — paving the way for robots that seamlessly navigate our homes, hospitals, and cities.

Embodied AI Robots in Action: Real-World Examples

Embodied AI is no longer science fiction — it’s already operating in the real world through highly advanced robots designed to perceive and interact with complex environments.

Take Boston Dynamics’ robots, like Spot the quadruped and Atlas the humanoid. These machines combine computer vision, proprioception, and reinforcement learning to navigate rough terrain, open doors, carry payloads, and even dance — all powered by embodied AI.

Another example is Tesla’s Optimus humanoid robot, designed to assist with repetitive tasks in factories and potentially homes. It uses visual and motion sensors paired with neural network models (the same ones that power Tesla’s autonomous driving) to understand its surroundings and perform physical labor.

At Carnegie Mellon University’s REAL Lab (Robotics, Embodiment and Learning), researchers are building soft robots that learn dexterous tasks like folding laundry or preparing food, proving that embodied AI isn’t limited to rigid machines.

Use Cases:

- In logistics, embodied AI robots autonomously move packages in Amazon warehouses.

- In elderly care, robots assist with lifting, fetching, and emotional interaction.

- In disaster response, drones and robots equipped with embodied AI navigate rubble, assess risk zones, and locate survivors.

A comparison between humanoid robots and soft robotics highlights a spectrum: humanoids excel in structured environments and mimic human motion, while soft robots adapt to delicate, uncertain tasks — like handling biological tissue or exploring tight spaces.

These embodied AI examples are redefining what machines can do in physical space — from performing labor to acting as intuitive assistants in our everyday lives.

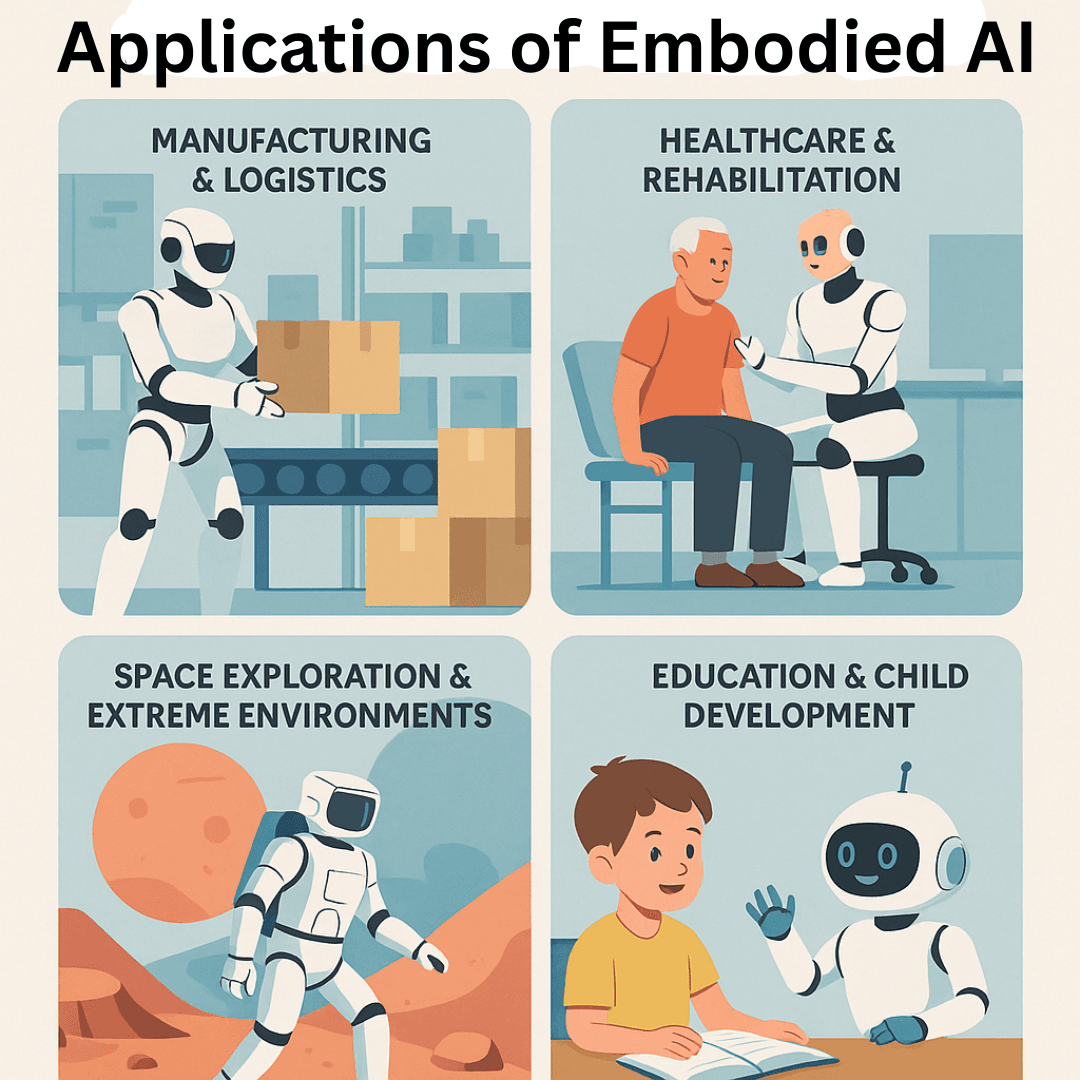

Applications of Embodied AI That Will Transform Our Lives

Embodied AI isn’t just a robotics trend—it’s a transformative force set to revolutionize key areas of human life.

🔧 Manufacturing & Logistics

In warehouses and factories, embodied AI applications are already improving efficiency. Robots powered by AI and sensor fusion can sort packages, load trucks, and even navigate complex supply chain environments autonomously. Unlike traditional automation, these machines adapt to dynamic layouts and unexpected events—such as a fallen item or a human stepping in their path.

🏥 Healthcare & Rehabilitation

One of the most impactful embodied AI applications is in healthcare and rehabilitation. Robots with real-time sensing and learning abilities are assisting patients with mobility exercises, helping elderly individuals with daily tasks, and even monitoring vital signs. For stroke victims or patients recovering from surgery, embodied AI-powered physical therapy robots offer consistent support with personalized adjustments.

👨🚀 Space Exploration & Extreme Environments

Embodied AI is a game-changer for exploring hostile or inaccessible environments. NASA and other space agencies are developing autonomous, embodied robots capable of performing tasks on the Moon, Mars, or deep-sea exploration vessels—where human presence is either costly or dangerous.

🧠 Education & Child Development

Social robots are emerging in education, particularly for children and individuals with autism. These embodied agents use expressive gestures, voice interaction, and adaptive behavior to teach, engage, and even model emotional intelligence. Their physical presence makes learning more immersive and relatable than screen-based AI tutors.

Across sectors, embodied AI applications are turning science fiction into science fact—offering real-world benefits with the potential to reshape how we work, heal, explore, and learn.

Challenges of Embodied AI: Why It’s Hard to Build Robots That Think and Move

While the promise of embodied AI is exciting, its path to widespread adoption is filled with complex challenges—both technical and ethical.

🛠 Physical Constraints

Building robots that operate smoothly in the real world is tough. Embodied AI systems are limited by battery life, mobility mechanics, and sensor reliability. Real-time processing of multimodal data—like vision, sound, and touch—demands powerful, energy-efficient hardware. Even the best robots today struggle with tasks as simple as climbing stairs or grasping a fragile object reliably.

⚖️ Ethical and Social Concerns

Embodied AI raises tough questions. Will AI-powered robots replace human jobs? How do we ensure privacy when social robots are constantly observing and learning? In healthcare or education, how should an AI respond in morally gray scenarios? When AI moves from virtual spaces into the real world, issues like bias, autonomy, and accountability become even more pressing and complex.

🚲 The “Bicycle Problem” of AI

There’s a reason we don’t learn to ride a bike by reading about it. Embodied AI faces the same challenge: learning through physical experience is far slower—but often richer—than data-driven training alone. Teaching a robot to pour a glass of water requires not just code, but physical trial-and-error, sensory feedback, and context awareness. This makes progress slow, but also grounded and adaptable.

Despite the hurdles, these embodied AI limitations are being tackled head-on by researchers and engineers worldwide. Overcoming them is the key to unlocking machines that can truly integrate into—and enhance—our physical world.

The Future of Embodied AI: From Smart Toys to Full-Scale Robot Companions

The future of embodied AI is not only promising—it’s already in motion. As breakthroughs in hardware, AI models, and human-machine interaction converge, we’re moving closer to a world where intelligent machines will seamlessly integrate into our daily lives.

🤖 Interfacing with Large Language Models

Imagine a humanoid robot that doesn’t just move and see—but also speaks, reasons, and adapts. By integrating large language models like ChatGPT with physical agents, researchers are giving robots conversational intelligence. This enables them to follow complex verbal instructions, carry on social dialogue, and respond to unpredictable situations with context-aware behavior.

✋ Advances in Haptics and Emotion Detection

Touch is becoming the next frontier. Haptic sensors allow embodied robots to “feel” textures, pressure, and resistance—making delicate tasks like caregiving or collaborative manufacturing possible. Meanwhile, emotion detection via facial expression and voice analysis is allowing robots to respond empathetically in social settings, such as eldercare or child education.

🌐 Real-World Interaction at Human Scale

In the near future, embodied AI future trends will include smart toys that grow with your child, robot co-workers trained on-the-job, and AI pets that adapt to your lifestyle. By 2035, it’s conceivable we’ll see embodied AI helping students with homework, assisting doctors in surgery, and offering companionship to the elderly—all with increasing autonomy and emotional intelligence.

As embodied AI matures, we won’t just interact with machines—we’ll coexist with them.

Conclusion: Why Embodied AI Is the Next Leap in Human-Tech Evolution

From static code to sensory-rich agents, embodied AI represents the next evolutionary leap in artificial intelligence. It’s no longer just about thinking machines—it’s about machines that move, perceive, and react in the real world.

This shift isn’t just technological—it’s philosophical. The line between software and hardware is blurring, and with it, our understanding of intelligence is expanding. The future of embodied AI points toward a world where robots don’t just serve as tools but as adaptive, responsive partners in every facet of life—from homes and schools to hospitals and space stations.

Final thought: Embodied AI could turn robots from tools into true collaborators—blending mind and motion to enhance the human experience.

🔗 Dive Deeper into the Future of Intelligence

Curious how machines are evolving beyond basic learning? Explore these related breakthroughs:

-

🧠 Zero-Shot Learning Explained – How AI can recognize things it’s never seen before (and what comes next).

-

⚡ Grok 3 AI: Elon’s AI Chatbot Meets X – A look inside the AI brain built for social media and beyond.

-

🎨 Tripo AI: Turning Text into 3D Worlds – The next leap in AI creativity: building reality from prompts.

-

🧩 Neurosymbolic AI: Merging Logic with Deep Learning – Why combining reasoning and neural networks might be the key to true general intelligence.

-

🚀 FastEmbed Explained – Supercharging search, recommendation engines, and real-time AI with lightning-fast vector embeddings.