Introduction: Talking to Animals — A Sci-Fi Dream Turning Real

Imagine holding a conversation with a dolphin — and it responds.

It may sound like fantasy, but modern science is turning this dream into reality. Thanks to major breakthroughs in artificial intelligence animal communication, researchers are beginning to decode the languages of animals — with dolphins at the center of this fascinating frontier.

Dolphins have long intrigued scientists for their advanced cognition, emotional intelligence, and structured vocal patterns. Their use of whistles, echolocation clicks, and even signature “names” suggests a language more complex than we once believed. However, interpreting this sophisticated communication has proven nearly impossible using traditional methods.

Now, AI and dolphin communication research is changing the game. Projects like Project CETI (Cetacean Translation Initiative) are using advanced AI models to analyze massive datasets of vocalizations from sperm whales and dolphins. The goal? To crack the code of interspecies communication using artificial intelligence animal communication tools that can detect vocal patterns, associate them with specific behaviors, and eventually generate human-understandable translations.

In addition, tech giants like Google have partnered with marine researchers to build AI-powered models — such as DolphinGemma — that focus specifically on dolphin vocalizations. These efforts mark a major leap forward for AI and dolphin communication, allowing scientists to process and interpret underwater sounds at unprecedented scale and speed.

As the field of artificial intelligence animal communication continues to evolve, we’re no longer just observing the animal kingdom — we’re beginning to talk to it. And the responses may soon surprise us.

Decoding Dolphin Language: A Puzzle for AI

Despite decades of marine research, decoding dolphin language remains one of science’s most captivating challenges. Dolphins don’t just make random noises — their vocalizations are complex, structured, and vary depending on social context, location, and even individual identity. Understanding this “language” requires more than just sharp ears — it demands the analytical muscle of interspecies communication AI.

At the forefront of this effort is AI and dolphin communication research that uses machine learning to identify patterns in massive sound libraries. AI models are trained on thousands of dolphin clicks, whistles, and burst pulses, correlating these with behaviors like hunting, mating, or social bonding. Unlike humans, AI can process and compare hours of audio data across different pods, locations, and times — revealing patterns we’d likely miss.

One breakthrough example is the Dolphin Communication Project (DCP), which uses interspecies communication AI to tag, categorize, and interpret dolphin exchanges in real-world settings. Tools like natural language processing (NLP), commonly used to understand human speech, are being adapted to uncover syntax-like structures in dolphin vocalizations.

Another major project, CETI (Cetacean Translation Initiative), is using deep learning and unsupervised AI models — the same kind used in translating unknown human languages — to try and decode the layered signals of sperm whales. Although focused on a different species, the tools and findings are directly influencing progress in AI and dolphin communication.

These advances suggest that interspecies communication AI may one day allow us to not only interpret what dolphins are saying — but actually respond. It’s a scientific moonshot, but every click, every whistle analyzed brings us closer to understanding the minds of one of Earth’s most intelligent non-human species.

How AI is Revolutionizing Animal Behavior Research

For centuries, understanding animal behavior relied on patient observation, handwritten notes, and hours of footage — but today, AI in animal behavior research is transforming the field. By automating pattern recognition and interpreting complex signals, artificial intelligence is giving scientists an entirely new lens through which to understand how animals think, feel, and interact.

At the core of this revolution is machine learning — algorithms that learn from enormous volumes of data, including audio recordings, video footage, movement tracking, and environmental context. What once took researchers years of manual coding, playback analysis, and guesswork can now be done in hours or days using intelligent systems.

🐘 Beyond Dolphins: A Global Look at AI-Powered Animal Studies

While artificial intelligence animal communication with dolphins often gets the spotlight, AI is enabling groundbreaking research across the animal kingdom:

- Elephants: In Kenya, researchers are using AI to analyze seismic signals — low-frequency vibrations elephants use to communicate through the ground. AI is helping to distinguish between calls related to mating, danger, or reunion, offering insights into elephant emotions and decision-making.

- Parrots: At Harvard and MIT, researchers are applying deep learning to analyze parrot mimicry and social calls. These models are revealing surprising linguistic complexity in how parrots form relationships and recognize individual “voices” — insights that were nearly impossible to quantify before AI in animal behavior research.

- Prairie Dogs: Cognitive ethologist Dr. Con Slobodchikoff used AI-enhanced acoustic analysis to decode the “language” of prairie dogs. His research found that they use different vocalizations not only for different predators, but also for color, size, and speed — forming what he calls a “highly descriptive language system.”

📊 Automating the Invisible

One of the most exciting elements of AI in animal behavior research is its ability to detect patterns invisible to the human eye or ear. For example:

- Facial recognition AI is now used to identify individual chimpanzees, helping researchers track behavior across time without tagging or interfering with the animals.

- Computer vision systems trained on camera trap footage can classify hundreds of species automatically, enabling conservationists to monitor migration, breeding, or stress behaviors in the wild.

In all of these cases, artificial intelligence animal communication tools not only speed up analysis but reveal previously hidden emotional, cognitive, and social dimensions of animal life.

AI is no longer just a tool for observing animals — it’s helping us understand their minds. As datasets grow and models become more refined, the gap between our world and theirs continues to narrow, bringing us closer to something truly extraordinary: a shared, meaningful connection between species.

The Technology Behind Interspecies Communication

Bridging the communication gap between humans and animals isn’t just about listening — it’s about listening intelligently. Modern interspecies communication AI relies on a powerful combination of hardware and software to collect, process, and analyze the sounds and signals animals produce. From deep-sea vocalizations to land-based movement patterns, the tech stack supporting this field is as advanced as it is diverse.

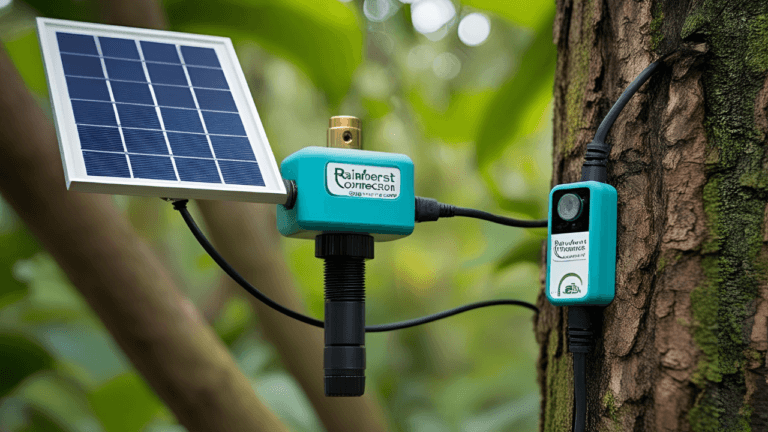

🔧 The Tools: From Ocean Floors to Forest Canopies

To enable AI decoding animal language, researchers use a variety of tools designed to gather massive volumes of real-world data:

- Acoustic sensors: High-sensitivity underwater microphones, known as hydrophones, record dolphin, whale, and fish vocalizations across different environments and seasons.

- Underwater drones: These autonomous devices can follow pods of dolphins or whales, collecting real-time acoustic and behavioral data even in deep ocean environments.

- Wearable trackers: Lightweight sensors placed on animals record their movements, body posture, and environmental conditions. This contextual data is crucial for understanding what a particular sound or signal might mean.

- Neural networks: On the back end, convolutional and recurrent neural networks are deployed to process sound waveforms, classify signals, and detect patterns — all key to advancing AI decoding animal language capabilities.

🧠 No Rosetta Stone: Training AI Without Labels

Unlike human languages, there is no dictionary, grammar guide, or Rosetta Stone for translating dolphin clicks or elephant rumbles. That’s why interspecies communication AI relies heavily on unsupervised learning — a type of machine learning where the algorithm explores patterns without needing labeled input.

For example, Google’s SoundStream and similar audio tokenizers are being used to group animal vocalizations into clusters, identifying which sounds occur together or in response to specific actions. This pattern recognition allows AI to hypothesize meaning even when no human label is available — an essential step in building animal translation tools.

⚠️ The Challenges: Nature Isn’t Always Clear

Despite its promise, AI decoding animal language faces serious hurdles:

- Lack of labeled data: We simply don’t have enough verified “animal vocabulary” to train large-scale models with high accuracy.

- Signal noise: Especially in underwater settings, AI must learn to filter out background noise from boats, waves, and other marine life.

- Dialects and variability: Dolphins in the Caribbean don’t sound the same as those in the Pacific. Even within a species, interspecies communication AI must account for regional “dialects” that can vary by group or geography.

Rapid advancements in technology and analytics are redefining the limits of what we can achieve. As tools become more sophisticated and models grow more intelligent, AI decoding animal language is shifting from a theoretical concept to a practical reality — paving the way for real-time animal translation and groundbreaking interspecies communication.

Real-World Case Studies: From Dolphins to Dogs

While the idea of speaking to animals still feels like science fiction, several real-world experiments have already begun to bridge the interspecies communication gap. These projects offer a glimpse into the tangible progress being made in AI decoding animal language — not just in labs, but in oceans, living rooms, and aviaries around the world.

🐋 CETI: Cracking the Code of Sperm Whales

One of the most ambitious efforts in artificial intelligence animal communication is the Cetacean Translation Initiative (CETI). This global, interdisciplinary project is using AI and high-performance computing to analyze thousands of hours of sperm whale vocalizations, specifically their signature “clicks” or codas. These codas differ in rhythm, repetition, and structure — patterns that AI decoding animal language systems are uniquely equipped to detect and analyze.

Using unsupervised learning and deep neural networks, CETI is attempting to group similar codas, associate them with behaviors (e.g., feeding, socializing, migrating), and eventually generate responses. While translation is still far off, the project represents a major step toward true artificial intelligence animal communication.

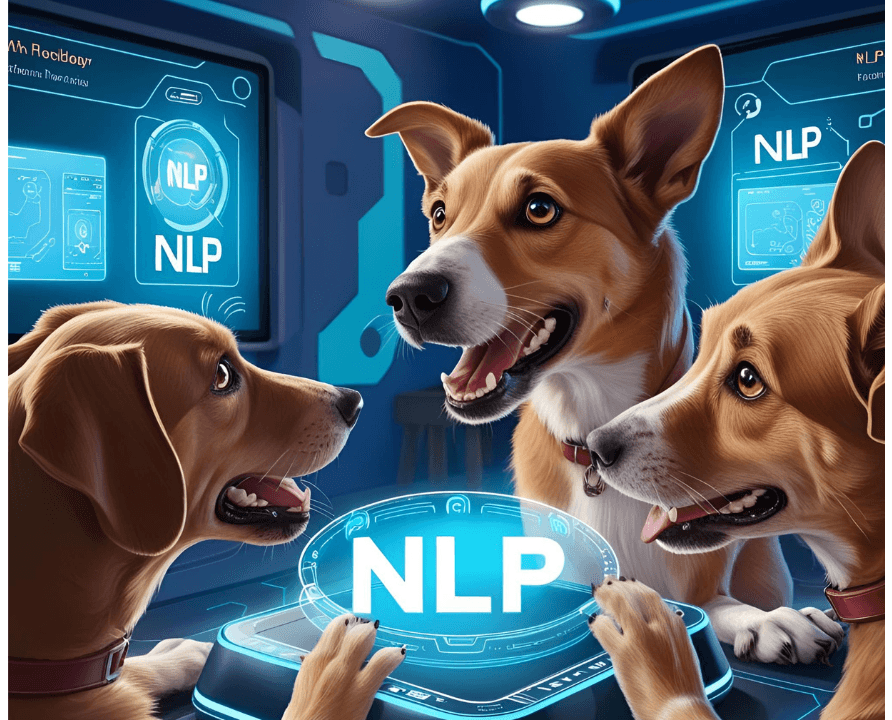

🐶 Dogs with Speech Buttons and NLP Models

In homes and research settings, domestic dogs are engaging in a different kind of communication experiment. Pioneered by speech-language pathologist Christina Hunger, and now replicated in research, dogs are trained to use soundboard-style buttons that “speak” words like “outside,” “play,” or “mad.” These buttons are linked to natural language processing (NLP) models, which analyze usage patterns to detect sequences, emotions, and intent.

While it’s debated whether dogs understand grammar or just context, the AI analysis of button patterns has revealed consistent usage, suggesting early signs of symbolic communication — a core focus of AI decoding animal language in mammals.

🐦 AI-Assisted Bird Interfaces

In a more experimental space, researchers are working with birds, such as zebra finches and crows, to create AI-powered robotic interfaces. These setups involve sensors and actuators that respond to certain bird vocalizations, gestures, or positions. In some cases, the birds learn to interact with these tools in a call-and-response format, allowing rudimentary communication between species.

While still in early development, these interfaces are helping scientists refine the boundaries of artificial intelligence animal communication, especially in animals with smaller brains and faster learning curves.

🧠 What We’ve Learned

These experiments highlight both the potential and limits of AI decoding animal language:

- ✅ What works: AI excels at identifying structure, repetition, and associations in large, noisy datasets — all crucial to understanding animal signals.

- ❌ What doesn’t (yet): True two-way communication remains elusive. Meaning is difficult to prove without context, and not all species “talk” the way we expect.

- 💡 What it teaches us: Communication is more than words. Through behavior, tone, and timing, animals convey intent — and AI is helping us pick up the signals we’ve ignored for too long.

These early case studies show that artificial intelligence animal communication is more than a dream — it’s an emerging science. With each breakthrough, we’re getting closer to building a shared language between species.

Ethical and Philosophical Implications

As breakthroughs in interspecies communication AI accelerate, we face profound ethical and philosophical questions that science alone cannot answer. Understanding animal minds might be one of the most revolutionary steps in human history — but with great understanding comes great responsibility. What do we do when the animals finally “speak”?

🧠 If Animals Could Speak — Would We Listen?

Imagine dolphins telling us they’re stressed by underwater noise pollution, or elephants expressing grief over habitat destruction. As AI in animal behavior research gives us tools to interpret their emotional and cognitive worlds, we may be confronted with uncomfortable truths. What if we hear what animals are “saying” — and don’t like it?

Interspecies communication AI doesn’t just decode sound — it could decode suffering, joy, resistance, or even requests. Are we prepared to act on what we learn? Or will we dismiss it when it becomes inconvenient?

🔐 Who Owns the Voice of Nature?

Another complex issue is data ownership. As researchers collect thousands of hours of animal sounds, signals, and movements using AI in animal behavior research, questions arise:

- Who owns this information — the scientists, the governments, or the animals themselves?

- Can this data be commercialized?

- Should animals have data rights?

In a world increasingly driven by digital privacy laws, it’s not far-fetched to consider ethical frameworks that treat interspecies data with similar respect. Especially if interspecies communication AI eventually enables individualized recognition of animals (e.g., “this dolphin said this”), the concept of animal autonomy becomes less abstract and more actionable.

🕹️ From Understanding to Manipulation?

With the power to decode comes the potential to control. Could AI be used not just to understand animals, but to manipulate them? For example, could researchers use playback of specific dolphin calls to influence pod behavior? Could livestock be conditioned via AI feedback systems to behave in certain ways?

Interspecies communication AI could shift the balance from coexistence to control. This raises a deep philosophical dilemma: are we building bridges of empathy, or designing tools of exploitation?

⚖️ Empathy, Utility, or Exploitation?

The line between using AI for understanding and using it for human benefit can blur quickly. Some questions we must confront:

- Are we listening to animals to protect them — or to better use them?

- Can AI deepen interspecies empathy instead of commodifying animal behavior?

- Is it possible to build a future where AI in animal behavior research respects animal agency, rather than reducing them to data points?

If interspecies communication AI continues to evolve, we’ll need not just engineers and scientists, but ethicists, philosophers, and perhaps even legal advocates for animals at the table.

Understanding animals could redefine what it means to be human — and what it means to share this planet. As we move forward, the challenge won’t just be technical — it’ll be moral.

The Future: AI as a Translator Between Worlds

Imagine a world where your smartphone can translate the chirps of a parrot or the clicks of a dolphin into plain English. While it may sound like science fiction, breakthroughs in artificial intelligence animal communication are rapidly turning this into a plausible future.

Scientists are developing tools that could one day function as universal animal translators, capable of interpreting species-specific sounds, gestures, and emotional states. With advances in AI decoding animal language, we’re moving closer to real-time interfaces that don’t just recognize signals but interpret their meaning and emotional nuance.

🌍 Real-Time Dolphin Translators and More

Inspired by initiatives like CETI, researchers are envisioning real-time dolphin translators — wearable or mobile devices that could allow humans to “converse” with dolphins during observation or therapy sessions. These tools would leverage acoustic sensors, neural networks, and behavioral models to convert marine vocalizations into structured messages.

But dolphins aren’t the only species on the radar. Artificial intelligence animal communication is also expanding into experiments with elephants, dogs, apes, and even bees. Using unsupervised learning, AI is decoding animal “languages” without needing predefined dictionaries — much like how children learn language through exposure and context.

🤖 AI-Based Empathy and “Wild Social Networks”

Looking ahead, AI decoding animal language could do more than translate — it might teach us empathy. Emerging tools may simulate animal experiences for human understanding: what does a bat hear, or a whale feel when sonar disrupts its path?

We may even see the rise of “wild social networks” — cloud-based systems where AI monitors, shares, and interprets animal communication across regions. This would allow conservationists to track migration, emotional stress, mating calls, and more — and respond with tailored, respectful interventions.

🌱 A Deeper Connection with Nature

Ultimately, the future of artificial intelligence animal communication is not about control — it’s about connection. As AI helps us understand animals not as resources but as fellow communicators, we’ll begin to see nature not just as a backdrop, but as an active dialogue.

Through AI decoding animal language, we are laying the groundwork for a new kind of relationship — one built not on dominance, but on listening.

Conclusion: A New Era of Connection with the Wild

The idea that we could one day hold meaningful conversations with animals is no longer fantasy — it’s becoming a scientific frontier. Through the lens of artificial intelligence animal communication, we are witnessing the dawn of a new era: one where humans may finally understand the rich, layered, and intelligent worlds of the creatures around us.

Interspecies communication AI is doing more than decoding sounds — it’s unlocking the possibility of mutual understanding, empathy, and co-existence. With every dataset processed and every pattern discovered, we’re peeling back the layers of silence that have separated us from the rest of the animal kingdom.

This journey isn’t just about discovery — it’s about rediscovery. Rediscovering our place in nature. Rediscovering the value of every voice, no matter how small, strange, or wild.

“The more we understand their world, the more human ours becomes.”

As artificial intelligence animal communication matures, we are not just translating animal languages — we are rewriting our own story as caretakers, collaborators, and conscious members of Earth’s living community.

Curious about the wider impact of AI beyond animal communication? Dive into these thought-provoking reads:

-

Exploring the Impact of Generative AI in Creative Industries — How artificial intelligence is revolutionizing film, design, music, and beyond.

-

AI Power Consumption Exploding — As AI grows smarter, it’s demanding more energy. What does that mean for our planet?

-

AI and Human Thinking — Discover how AI isn’t just copying human thought—it’s changing how we think.

-

Is AI Making Us Dumber? — A critical look at whether our reliance on technology is quietly eroding human intelligence.

-

Dystopian Technology: How Science Fiction Became Reality — From AI-driven courts to surveillance cities—science fiction predictions are becoming today’s headlines.

-

Harnessing AI in Predicting Natural Disasters — See how machine learning is being used to forecast and prevent real-world catastrophes.